Examples

NV center - DC Magnetometry

- class Magnetometry(particle_filter: ParticleFilter, phys_model: NVCenter, control_strategy: Callable, simpars: SimulationParameters, cov_weight_matrix=None, eta_flag: bool = False, extraction_flag: bool = False, cov_flag: bool = False)

Bases:

StatelessMetrologySimulates the estimation of a magnetic field with a mean square error loss. This class is suitable for a neural network agent, for a static strategy, and for other simple controls known in the literature, like the

strategy and the particle guess heuristic (PGH). It works both for static and for oscillating magnetic fields.

Constructor of the

Magnetometryclass.Parameters

- particle_filter:

ParticleFilter Particle filter responsible for the update of the Bayesian posterior on the parameters and on the state of the probe. It contains the methods for applying the Bayes rule and computing Bayesian estimators from the posterior.

- phys_model:

NVCenter Abstract description of the parameters encoding and of the measurement on the NV center.

- control_strategy: Callable

Callable object (normally a function or a lambda function) that computes the values of the controls for the next measurement from the Tensor input_strategy. This class expects a callable with the following header

controls = control_strategy(input_strategy)It is typically a wrapper for the neural network or a vector of static controls.

- simpars:

SimulationParameters Contains the flags and parameters that regulate the stopping condition of the measurement loop and modify the loss function used in the training.

- cov_weight_matrix: List, optional

Weight matrix that determines the relative contribution to the total error of the parameters in phys_model.params. It is list of float representing a positive semidefinite matrix. If this parameter is not passed then the default weight matrix is the identity, i.e.

.

- eta_flag: bool = False

This flag controls the addition of a normalization factor to the MSE loss.

If eta_flag is True, the MSE loss is divided by the normalization factor

(56)

where

are the bounds of the i-th parameter in phys_model.params and

are the diagonal entries of cov_weight_matrix.

is the total elapsed evolution time, which can be different for each estimation in the batch.

Achtung! This flag should be used only if the resource is the total estimation time.

- extraction_flag: bool = False

If extraction_flag=True a couple of particles are sampled from the posterior and added to the input_strategy Tensor. This is useful to simulate the PGH control for the evolution time, according to which the k-th control should be

(57)

with

, where

and

are respectively the first and the second particle extracted from the ensemble and

is the cartesian norm.

- cov_flag: bool = False

If cov_flag=True a flattened version of the covariance matrix of the particle filter ensemble is added to the input_strategy Tensor. This is useful to simulate the

control strategy and its variant that accounts for a finite transversal relaxation time. They prescribe respectively for the k-th control

(58)

and

(59)

being

the covariance matrix of the posterior computed with the

compute_covariance()method.

- generate_input(weights: Tensor, particles: Tensor, meas_step: Tensor, used_resources: Tensor, rangen: Generator)

Generates the input_strategy Tensor of the

generate_input()method. The returned Tensor is influenced by the parameters extract_flag and cov_flag of the constructor.

- loss_function(weights: Tensor, particles: Tensor, true_values: Tensor, used_resources: Tensor, meas_step: Tensor)

Mean square error on the parameters, as computed in

loss_function(). The behavior of this method is influence by the flag eta_flag passed to the constructor of the class.

- particle_filter:

- class NVCenter(batchsize: int, params: List[Parameter], prec: Literal['float64', 'float32'] = 'float64', res: Literal['meas', 'time'] = 'meas', control_phase: bool = False)

Bases:

StatelessPhysicalModelModel for the negatively charged NV center in diamond used for various quantum metrological task. A single measurement on the NV center consists in multiple Ramsey sequencies of the same controllable duration applied to the NV center, followed by photon counting of the photoluminescent photon and a majority voting to decide the binary outcome. The NV center is reinitialized after each photon counting. During the free evolution in the Ramsey sequence the NV center precesses freely in the external magnetic field, thereby encoding its value in its state. The two possible controls we have on the system are the duration of the free evolution

and the phase

applied before the photon counting. The resource of the estimation task can be either the total number of measurements or the total evolution time.

Achtung! The

model()method must still be implemented in this class. It should describe the probability of gettingin the measurement after the majority voting from the collected photons.

Constructor of the

NVCenterclass.Parameters

- batchsize: int

Batchsize of the simulation, i.e. number of estimations executed simultaneously.

- params: List[

Parameter] List of unknown parameters to estimate in the NV center experiment, with their respective bounds.

- prec{“float64”, “float32”}

Precision of the floating point operations in the simulation.

- res: {“meas”, “time”}

Resource type for the present metrological task, can be either the total evolution time, i.e. time, or the total number of measurements on the NV center, i.e. meas.

- control_phase: bool = False

If this flag is True, beside the free evolution time, also the phase applied before the photon counting is controlled by the agent.

- count_resources(resources: Tensor, outcomes: Tensor, controls: Tensor, true_values: Tensor, meas_step: Tensor)

The resources can be either the total number of measurements, or the total evolution time, according to the attribute res of the

NVCenterclass.

- perform_measurement(controls: Tensor, parameters: Tensor, meas_step: Tensor, rangen: Generator) Tuple[Tensor, Tensor]

Measurement on the NV center.

The NV center is measured after having evolved freely in the magnetic field for a time specified by the parameter control. The possible outcomess are

and

, selected stochastically according to the probabilities

, where

is the evolution time (the control) and

the parameters to estimate. This method returns the observed outcomes and their log-likelihood.

Achtung! The

model()method must return the probability

- class NVCenterDCMagnetometry(batchsize: int, params: List[Parameter], prec: Literal['float64', 'float32'] = 'float64', res: Literal['meas', 'time'] = 'meas', invT2: Optional[float] = None)

Bases:

NVCenterModel describing the estimation of a static magnetic field with an NV center used as magnetometer. The spin-spin relaxation time

can be either known, or be a parameter to estimate. The estimation will be formulated in terms of the precession frequency

of the NV center, which is proportional to the magnetic field

.

This physical model and the application of Reinforcement Learning to the estimation of a static magnetic fields have been also studied in the seminal work of Fiderer, Schuff and Braun 1.

Constructor of the

NVCenterDCMagnetometryclass.Parameters

- batchsize: int

Batchsize of the simulation, i.e. number of estimations executed simultaneously.

- params: List[

Parameter] List of unknown parameters to estimate in the NV center experiment, with their respective bounds.

- prec{“float64”, “float32”}

Precision of the floating point operations in the simulation.

- res: {“meas”, “time”}

Resource type for the present metrological task, can be either the total evolution time, i.e. time, or the total number of measurements on the NV center, i.e. meas.

- invT2: float, optional

If this parameter is specified only the precession frequency

is considered as an unknown parameter, while the inverse of the transverse relaxation time

is fixed to the value invT2. In this case the list params must contain a single parameter, i.e. omega. If no invT2 is specified, it is assumed that it is an unknown parameter that will be estimated along the frequency in the Bayesian procedure. In this case params must contain two objects, the second of them should be the inverse of the transversal relaxation time.

- model(outcomes: Tensor, controls: Tensor, parameters: Tensor, meas_step: Tensor, num_systems: int = 1) Tensor

Model for the outcome of a measurement on a NV center subject to free precession in a static magnetic field. The probability of getting the outcome

is

(60)

The evolution time

is controlled by the trainable agent, and

is the unknown precession frequency, which is proportional to the magnetic field. The parameter

may or may not be an unknown in the estimation, according to the value of the attribute invT2 of the

NVCenterDCMagnetometryclass.

- main()

Runs the same simulations done by Fiderer, Schuff and Braun 1.

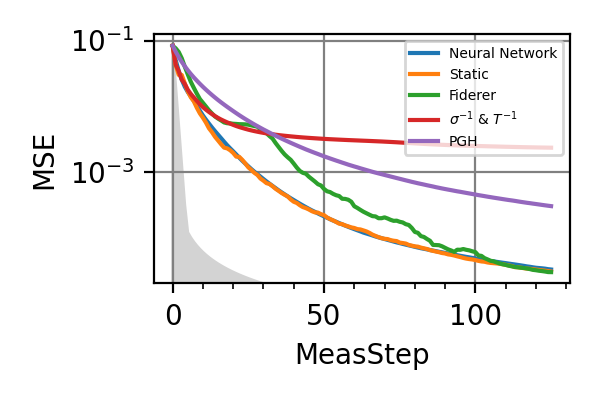

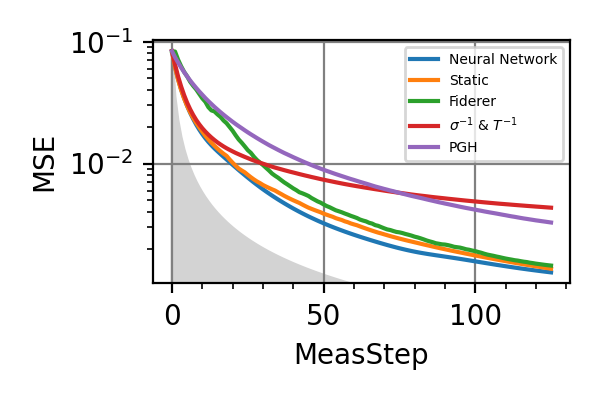

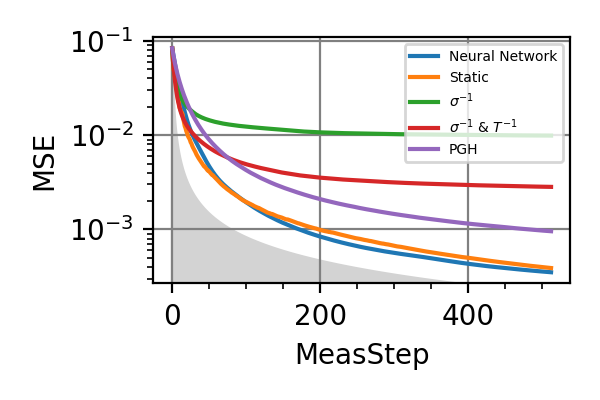

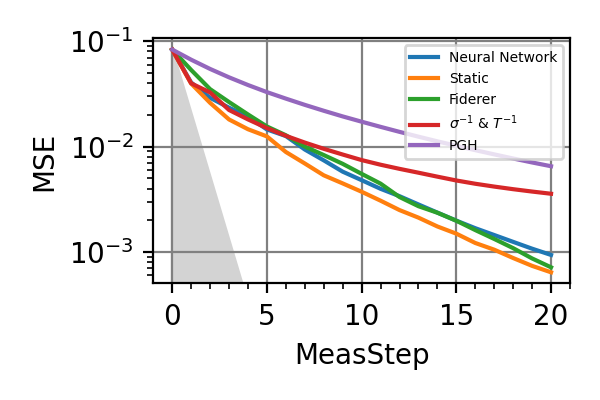

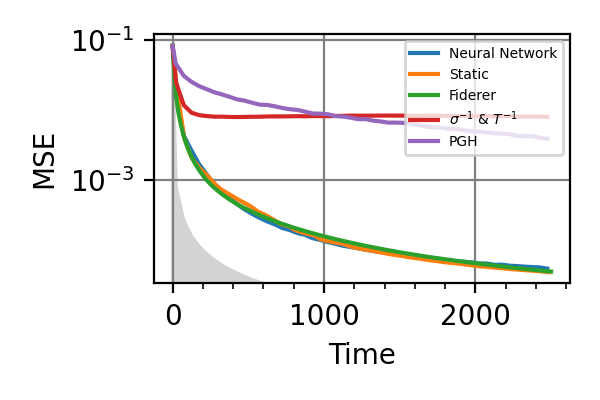

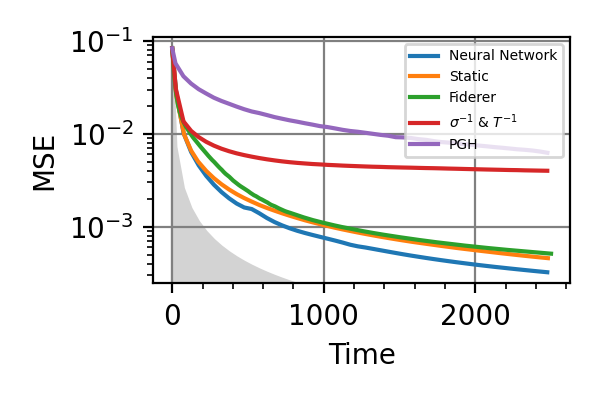

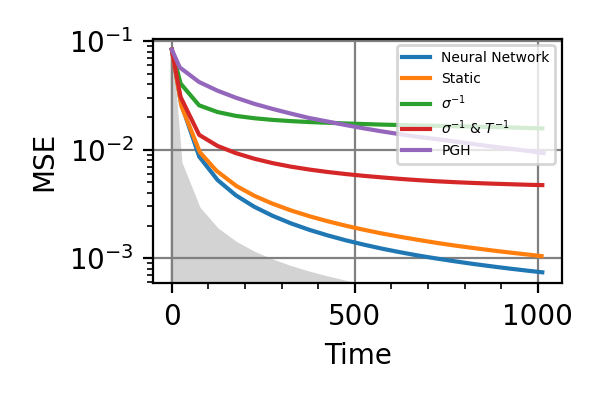

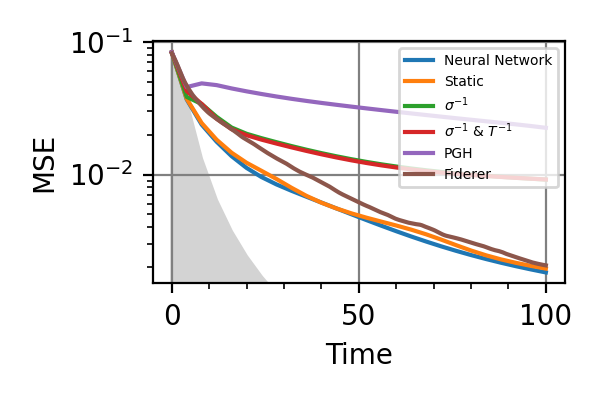

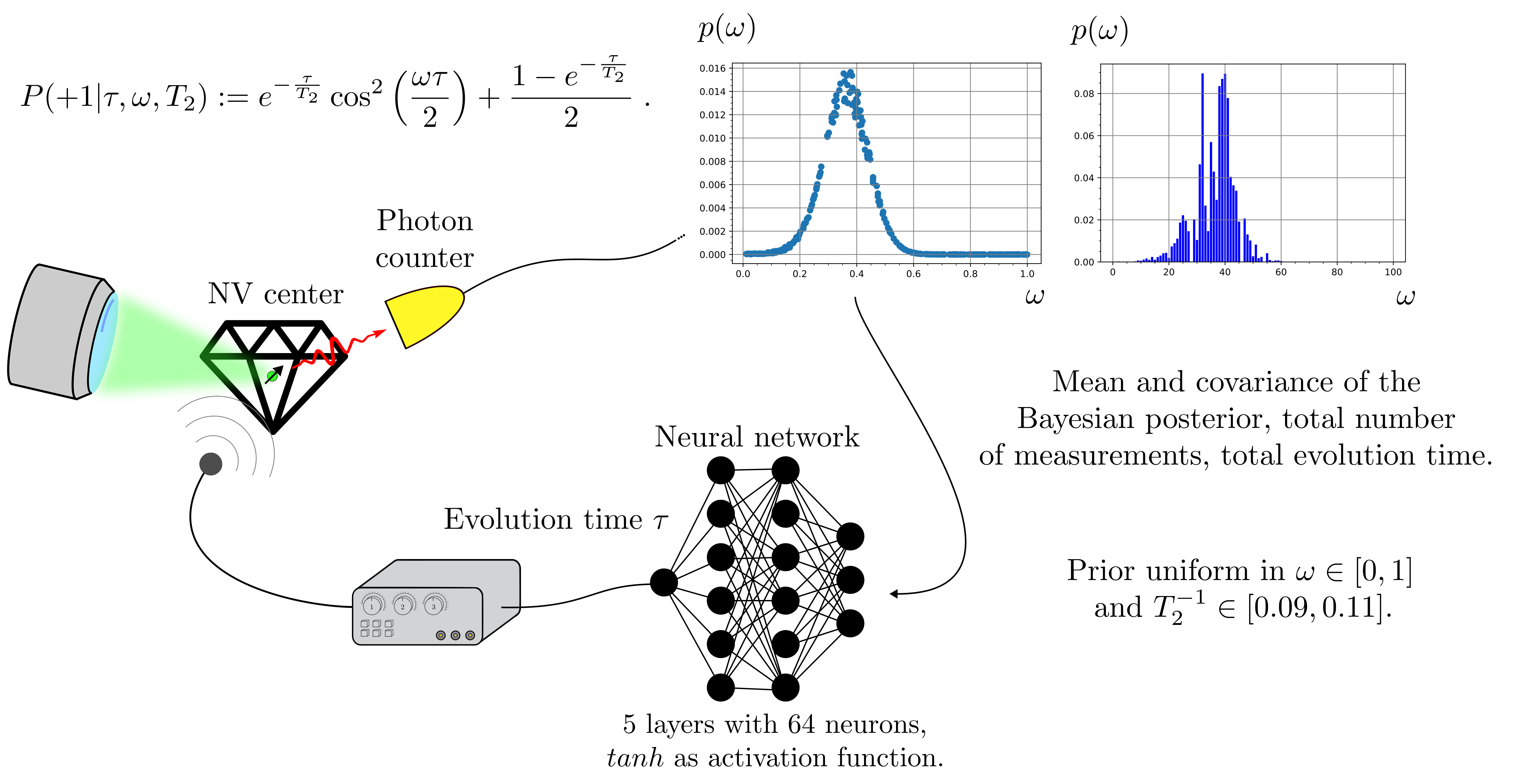

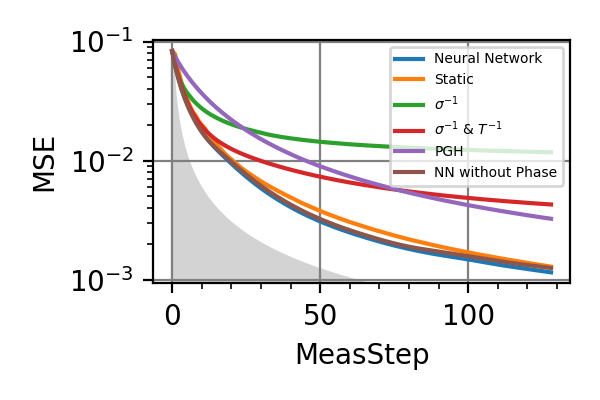

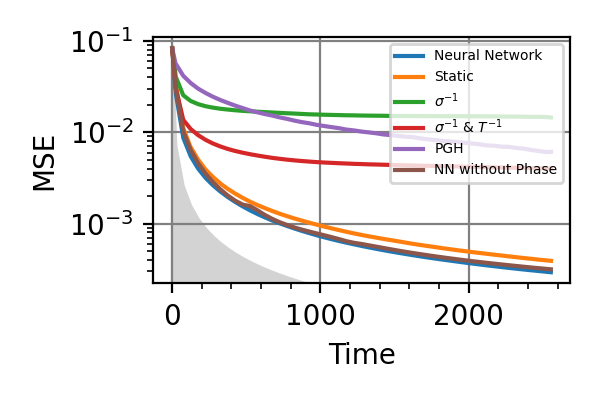

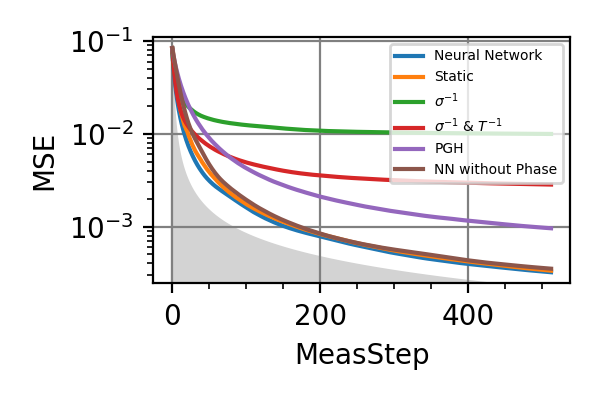

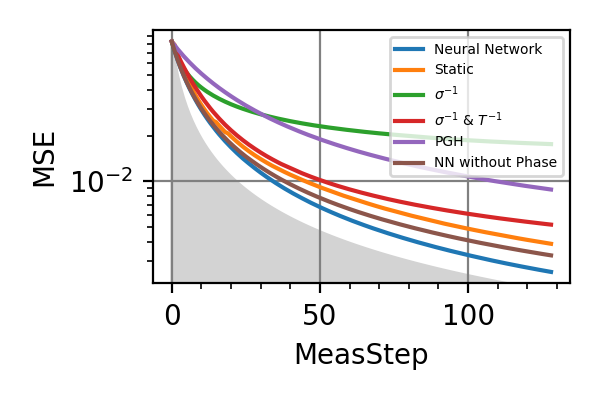

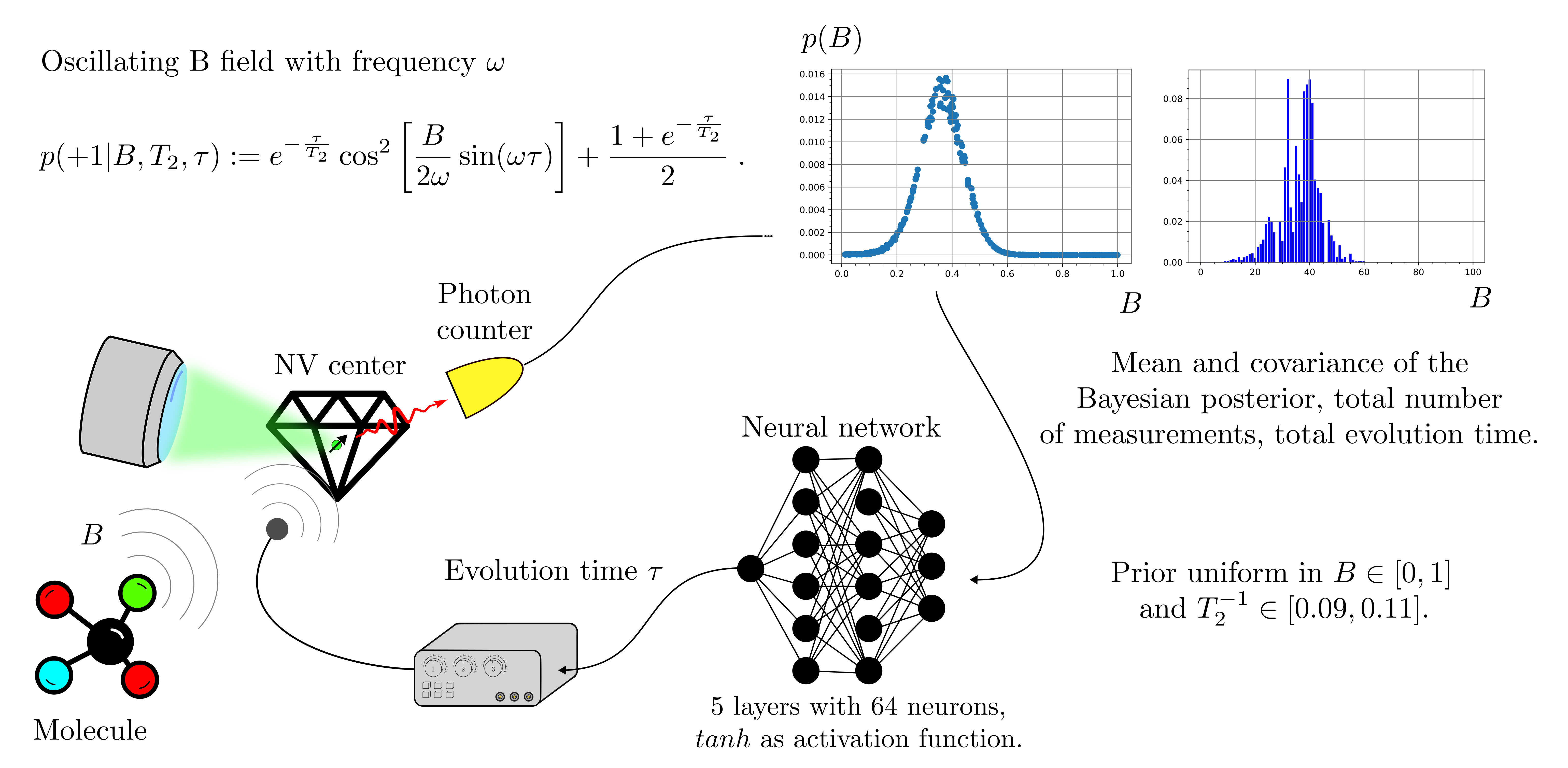

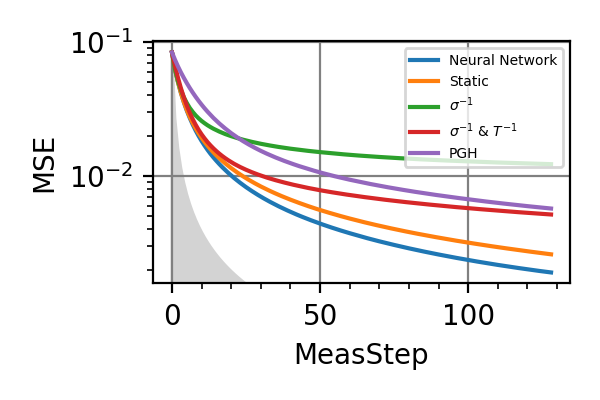

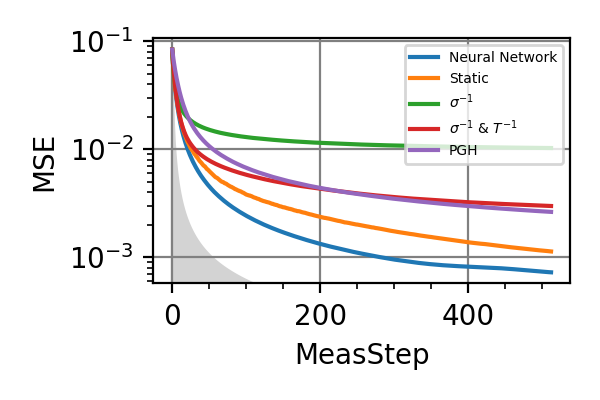

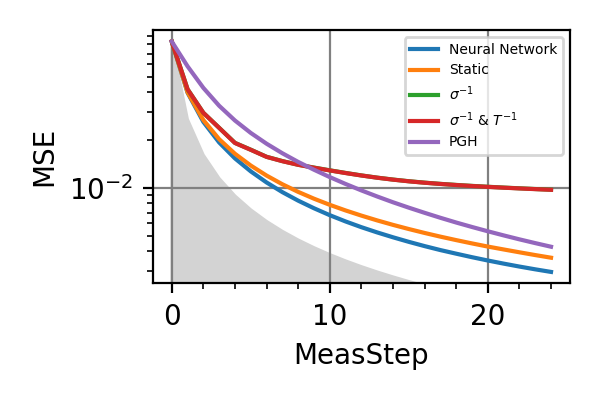

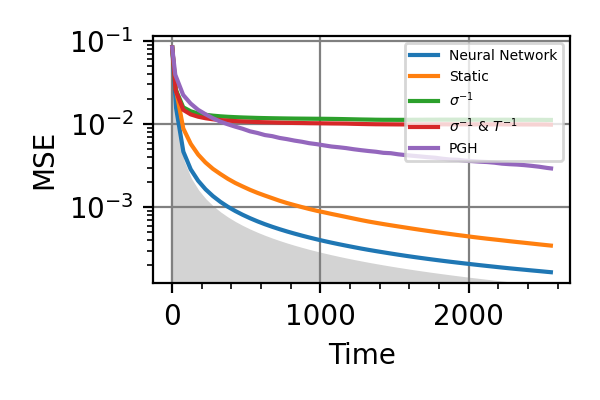

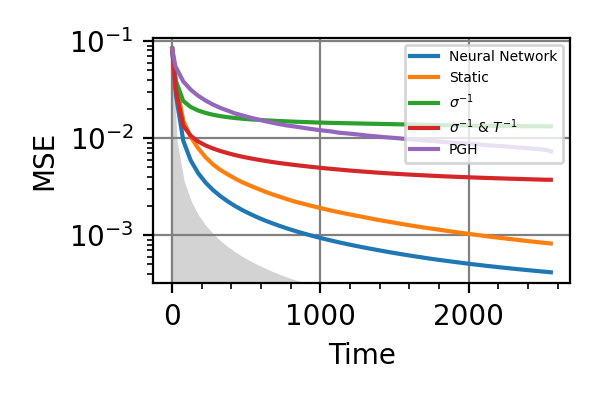

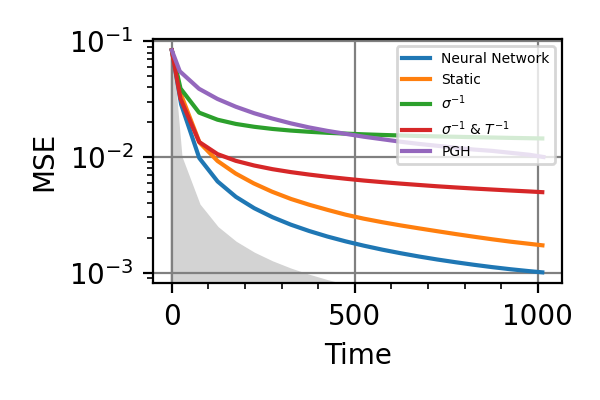

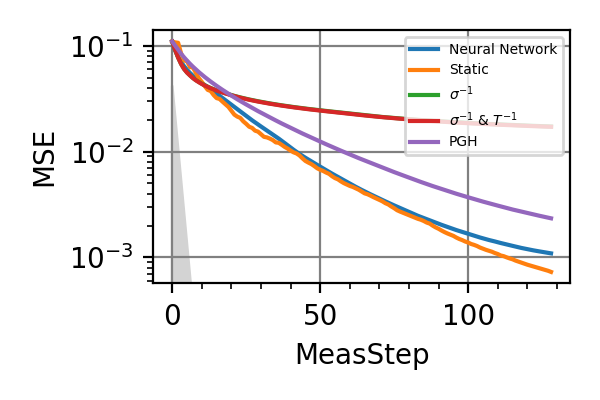

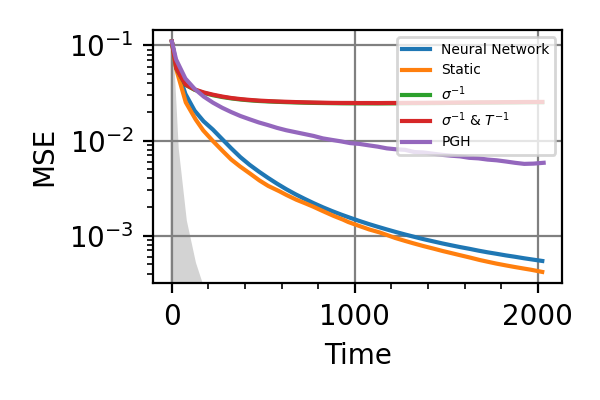

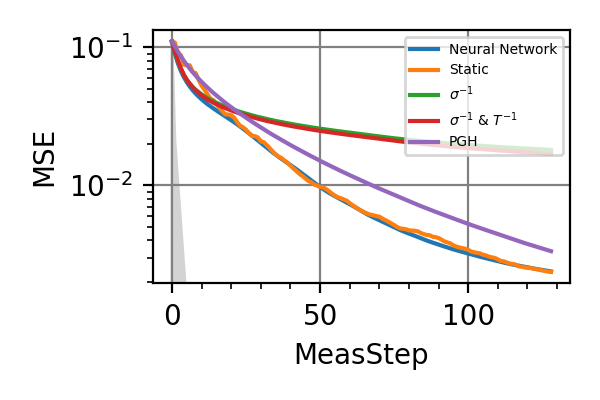

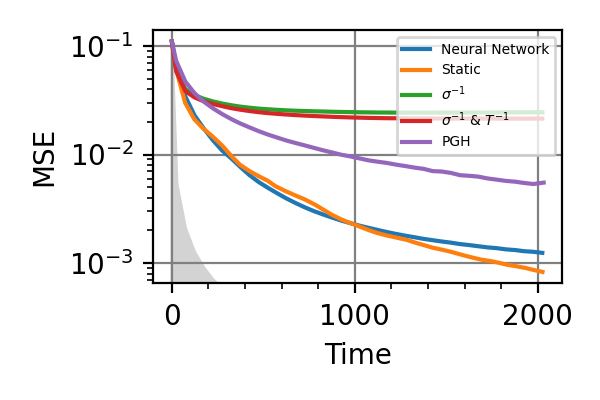

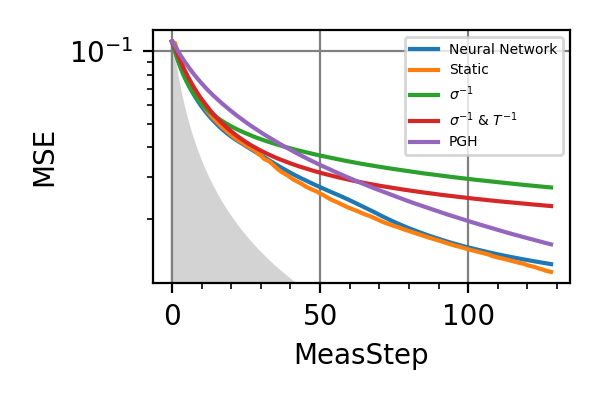

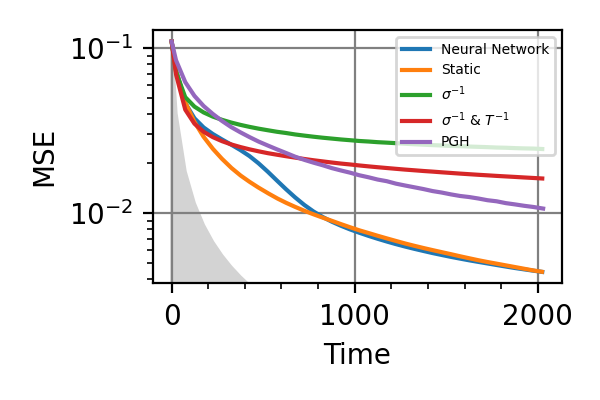

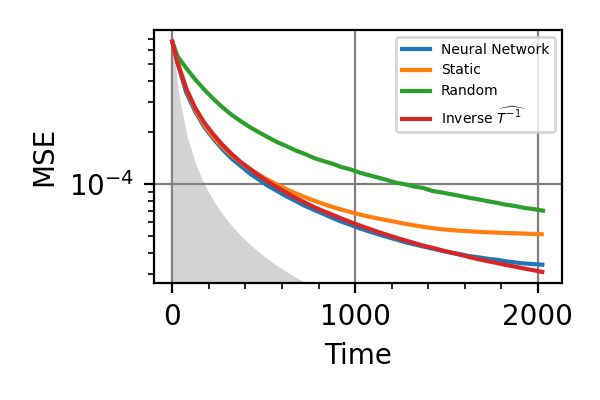

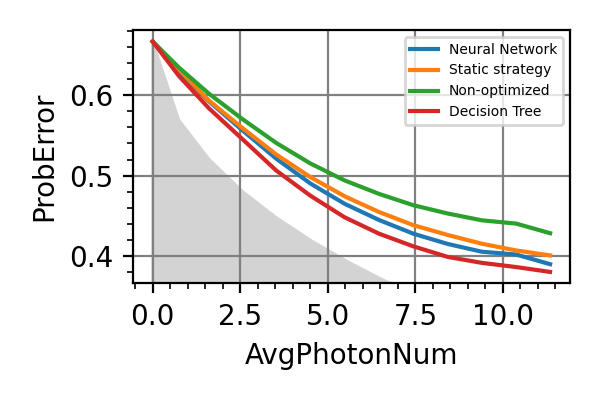

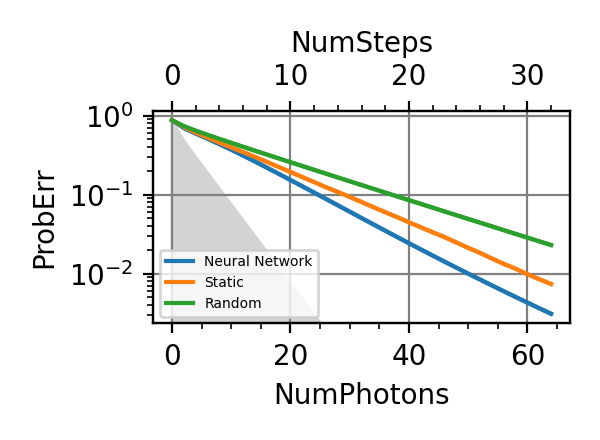

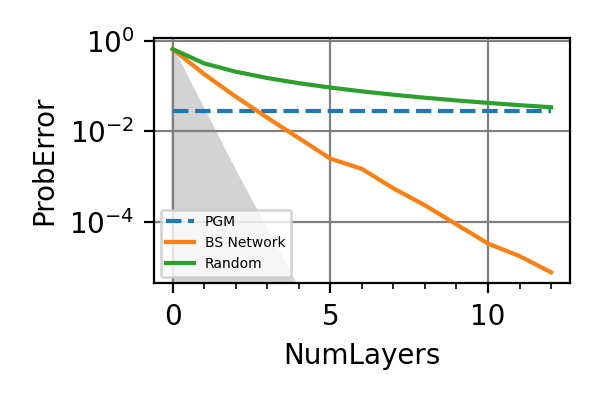

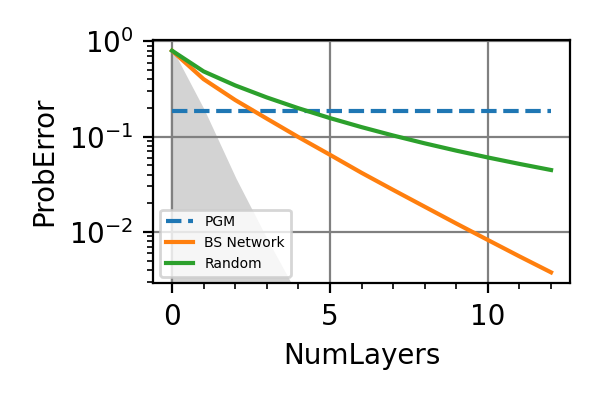

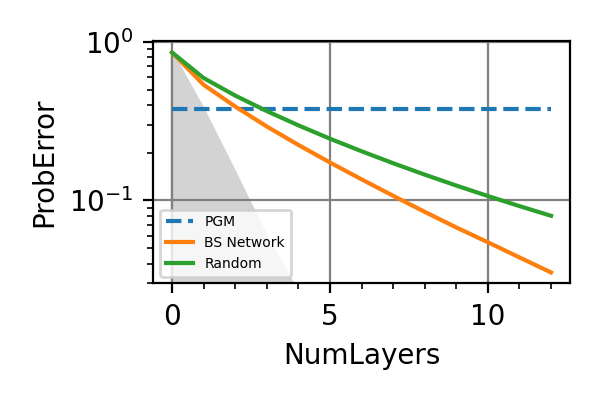

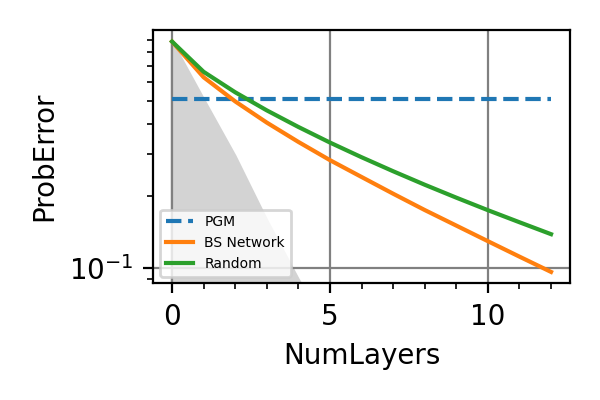

The performances of the optimized neural network and static strategies are reported in the following plot, together with other strategies commonly used in the literature. The resources can be the number of measurements or the total evolution time.

Fig. 1 invT2=0.01, Meas=128

Fig. 2 invT2=0.1, Meas=128

Fig. 3 invT2 in (0.09, 0.11), Meas=512

Fig. 4 invT2=0, Meas=24

Fig. 5 invT2=0.01, Time=2560

Fig. 6 invT2=0.1, Time=2560

Fig. 7 invT2 in (0.09, 0.11), Time=1024

Fig. 8 invT2=0, Time=128

For the plot referring to the estimation of omega only the green line is the best performance found in the literature so far, obtained in 1 with model-free RL. In the plot corresponding to the simultaneous estimation of both the frequency and the decoherence time we din’t compare our results with 1 and the green line in simply the

.

The shaded grey areas in the above plot indicate the Bayesian Cramér-Rao bound, which is the the ultimate precision bound computed from the Fisher information.

From the above plots we can conclude that the adaptive strategy offers no advantage with respect to the optimal static strategy for those experiments limited in the number of measurements and for all those with a sufficiently high coherence time. For

we see some small advantage of the adaptive strategy in the time limited experiments. This observation is in accordance with the analysis based only on the Fisher information. For

the Fisher information of a single measurement is

and is independent on

. For

the Fisher information manifests a dependence on

, which could indicate the possibility of an adaptive strategy being useful, which is confirmed in these simulations.

Notes

The results of the simulations with invT2 in (0.09, 0.11) are very similar to that with invT2=0.1 because of the relatively narrow prior on invT2.

For an NV center used as a magnetometer the actual decoherence model is given by

instead of

Achtung! The performances of the time limited estimations with the

and the PGH strategies are not in accordance with the results of Fiderer, Schuff and Braun 1. Also the simulations with invT2 in (0.09, 0.11) do not agree with the results reported in this paper.

Normally in the applications the meaningful resource is the total time required for the estimation. This doesn’t coincide however with the total evolution time that we considered, because the initialization of the NV center, the read-out the and the data processing all take time. This overhead time is proportional to the number of measurements, so that in a real experiment we expect the actual resource to be a combination of the evolution time and the number of measurements.

In a future work the role of the higher moments of the posterior distribution in the determination of the controls should be explored. In particular it should be understood if with

(61)

more advantage from the adaptivity could be extracted.

In room-temperature magnetometry it is impossible to do single-shot readout of the NV center state (SSR). We should train the control strategy to work with non-SSR, as done by Zohar et al 2

All the trainings of this module have been done on a GPU NVIDIA Tesla V100-SXM2-32GB, each requiring

hours.

- 2

I Zohar et al, Quantum Sci. Technol. 8 035017 (2023).

- parse_args()

Arguments

- scratch_dir: str

Directory in which the intermediate models should be saved alongside the loss history.

- trained_models_dir: str = “./nv_center_dc/trained_models”

Directory in which the finalized trained model should be saved.

- data_dir: str = “./nv_center_dc/data”

Directory containing the csv files produced by the

performance_evaluation()and thestore_input_control()functions.- prec: str = “float32”

Floating point precision of the whole simulation.

- n: int = 64

Number of neurons per layer in the neural network.

- num_particles: int = 480

Number of particles in the ensemble representing the posterior.

- iterations: int = 32768

Number of training steps.

- scatter_points: int = 32

Number of points in the Resources/Precision csv produced by

performance_evaluation().

- static_field_estimation(args, batchsize: int, max_res: float, learning_rate: float = 0.01, gradient_accumulation: int = 1, cumulative_loss: bool = False, log_loss: bool = False, res: Literal['meas', 'time'] = 'meas', invT2: Optional[float] = None, invT2_bound: Optional[Tuple] = None, cov_weight_matrix: Optional[List] = None, omega_bounds: Tuple[float, float] = (0.0, 1.0))

Simulates the Bayesian estimation of a static magnetic field with an NV center, for a neural network controlling the evolution time, a static strategy, two variations of the

strategy, and the particle guess heuristic (PGH).

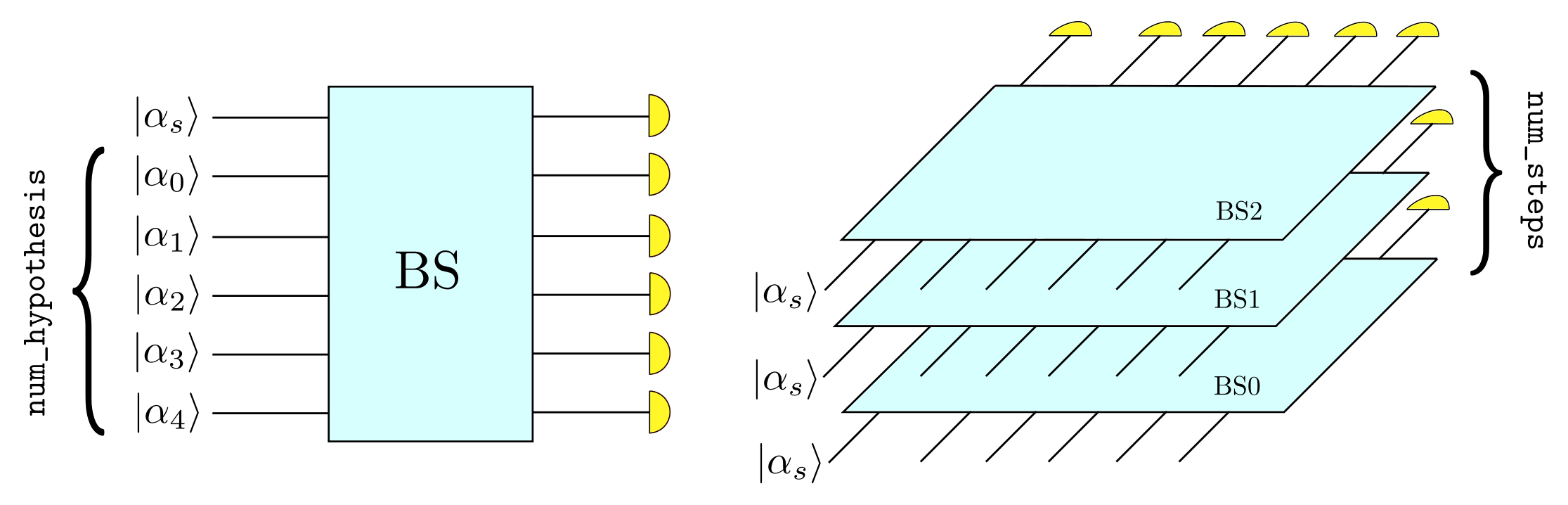

The measurement loop is showed in the following picture.

Parameters

- args:

Arguments passed to the Python script.

- batchsize: int

Batchsize of the simulation, i.e. number of estimations executed simultaneously.

- max_res: float

Maximum amount of resources allowed to be consumed in the estimation.

- learning_rate: float = 1e-2

Initial learning rate for the neural network. The initial learning rate of the static strategy is fixed to 1e-1. They both decay with

InverseSqrtDecay.- gradient_accumulation: int = 1

Flag of the

train()function.- cumulative_loss: bool = False

Flag

cumulative_loss.- log_loss: bool = False

Flag

log_loss.- res: {“meas”, “time”}

Type of resource for the simulation. It can be either the number of measurements or the total evolution time.

- invT2: float, optional

Inverse of the transversal relaxation time. If

or it is unknown and must be estimated, this parameter should not be passed.

- invT2_bound: Tuple[float, float], optional

Extrema of the uniform prior for the Bayesian estimation of the inverse of the transversal relaxation time.

- cov_weight_matrix: List, optional

Weight matrix for the mean square error on the frequency and on the inverse of the coherence time

.

- omega_bounds: Tuple[float, float] = (0.0, 1.0)

Extrema of admissible values for the precession frequency.

NV center - Phase control

- class NVCenterDCMagnPhase(batchsize: int, params: List[Parameter], prec: Literal['float64', 'float32'] = 'float64', res: Literal['meas', 'time'] = 'meas', invT2: Optional[float] = None)

Bases:

NVCenterModel describing the estimation of a static magnetic field with an NV center used as magnetometer. The transversal relaxation time

can be either known, or be a parameter to estimate. The estimation will be formulated in terms of the precession frequency

of the NV center, which is proportional to the magnetic field

. With respect to the

nv_center_dcmodule we add here the possibility of imprinting an arbitrary phase on the NV-center state before the photon counting measurement.Constructor of the

NVCenterDCMagnPhaseclass.Parameters

- batchsize: int

Batchsize of the simulation, i.e. number of estimations executed simultaneously.

- params: List[

Parameter] List of unknown parameters to estimate in the NV center experiment, with their respective bounds. This contains either the precession frequency only or the frequency and the inverse coherence time.

- prec{“float64”, “float32”}

Precision of the floating point operations in the simulation.

- res: {“meas”, “time”}

Resource type for the present metrological task, can be either the total evolution time, i.e. time, or the total number of measurements on the NV center, i.e. meas.

- invT2: float, optional

If this parameter is specified only the precession frequency

is considered as an unknown parameter, while the inverse of the transverse relaxation time

is fixed to the value invT2. In this case the list params must contain a single parameter, i.e. omega. If no invT2 is specified, it is assumed that it is an unknown parameter that will be estimated along the frequency in the Bayesian procedure. In this case params must contain two objects, the second of them should be the inverse of the transversal relaxation time.

- model(outcomes: Tensor, controls: Tensor, parameters: Tensor, meas_step: Tensor, num_systems: int = 1) Tensor

Model for the outcome of a measurement on a NV center that has been precessing in a static magnetic field. The probability of getting the outcome

is

(62)

The evolution time

and the phase

are controlled by the trainable agent, and

is the unknown precession frequency, which is proportional to the magnetic field. The parameter

may or may not be an unknown in the estimation, according to the value of the attribute invT2 of the

NVCenterDCMagnPhaseclass.

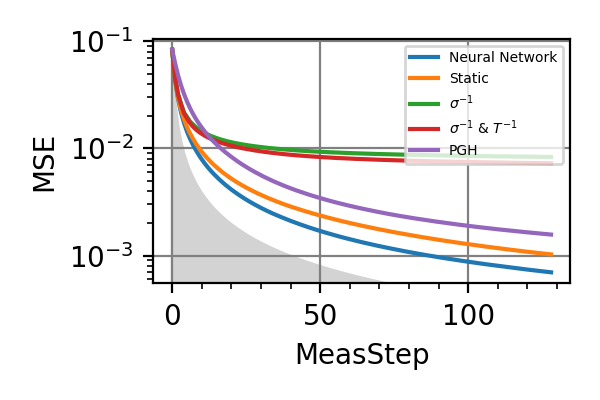

- main()

Runs the same simulations done by Fiderer, Schuff and Braun 1 but with the possibility of controlling the phase of the NV center before the Ramsey measurement.

The performances of the optimized neural network and the static strategy are reported in the following plots, together with other strategies commonly used in the literature. The resource can be either the total number of measurements or the total evolution time. Beside the NN optimized to control

and

, the performances of the NN trained to control only the evolution time

are reported in brown.

Fig. 9 invT2=0.1, Meas=128

Fig. 10 invT2=0.1, Time=2560

Fig. 11 invT2 in (0.09, 0.11), Meas=512

Fig. 12 invT2 in (0.09, 0.11), Time=1024

Fig. 13 invT2=0.2, Meas=128

Fig. 14 invT2=0.2, Time=2560

The shaded grey areas in the above plot indicate the Bayesian Cramér-Rao bound, which is the the ultimate precision bound computed from the Fisher information.

There is only a very small advantage in controlling the phase for

, if there is any at all. Similarly, with

no advantage has been found. For

and Time=2560 the phase control had converged to

, and more training could not take it out of this minimum. For

the advantage in introducing also the phase control becomes more consistent.

Notes

For the phase control be advantageous, the phase

must be known to some extent. While the error on

goes down, the evolution time increases, so that the error on

doesn’t go to zero. This limits the utility of controlling the phase in DC magnetometry.

All the training of this module have been done on a GPU NVIDIA Tesla V100-SXM2-32GB, each requiring

hours.

- parse_args()

Arguments

- scratch_dir: str

Directory in which the intermediate models should be saved alongside the loss history.

- trained_models_dir: str = “./nv_center_dc_phase/trained_models”

Directory in which the finalized trained model should be saved.

- data_dir: str = “./nv_center_dc_phase/data”

Directory containing the csv files produced by the

performance_evaluation()and thestore_input_control()functions.- prec: str = “float32”

Floating point precision of the whole simulation.

- n: int = 64

Number of neurons per layer in the neural network.

- num_particles: int = 480

Number of particles in the ensemble representing the posterior.

- iterations: int = 32768

Number of training steps.

- scatter_points: int = 32

Number of points in the Resources/Precision csv produced by

performance_evaluation().

- static_field_estimation(args, batchsize: int, max_res: float, learning_rate: float = 0.01, gradient_accumulation: int = 1, cumulative_loss: bool = False, log_loss: bool = False, res: Literal['meas', 'time'] = 'meas', invT2: Optional[float] = None, invT2_bound: Optional[Tuple] = None, cov_weight_matrix: Optional[List] = None, omega_bounds: Tuple[float, float] = (0.0, 1.0))

Simulates the Bayesian estimation of a static magnetic field with an NV center, for a neural network controlling the sensor, a static strategy, two variations of the

strategy, and the particle guess heuristic (PGH). Beside the evolution time also the phase is be controlled by the agent.

Parameters

- args:

Arguments passed to the Python script.

- batchsize: int

Batchsize of the simulation, i.e. number of estimations executed simultaneously.

- max_res: float

Maximum amount of resources allowed to be consumed in the estimation.

- learning_rate: float = 1e-2

Initial learning rate for the neural network. The initial learning rate of the static strategy is fixed to 1e-1. They both decay with

InverseSqrtDecay.- gradient_accumulation: int = 1

Flag of the

train()function.- cumulative_loss: bool = False

Flag

cumulative_loss.- log_loss: bool = False

Flag

log_loss.- res: {“meas”, “time”}

Type of resource for the simulation. It can be either the number of Ramsey measurements or the total evolution time.

- invT2: float, optional

Inverse of the transversal relaxation time. If

or it is unknown and must be estimated, this parameter should not be passed.

- invT2_bound: Tuple[float, float], optional

Extrema of the uniform prior for the Bayesian estimation of the inverse of the transversal relaxation time.

- cov_weight_matrix: List, optional

Weight matrix for the mean square error on the frequency and on the inverse of the coherence time

.

- omega_bounds: Tuple[float, float] = (0.0, 1.0)

Extrema of admissible values for the precession frequency.

NV center - AC Magnetometry

- class NVCenterACMagnetometry(batchsize: int, params: List[Parameter], omega: float = 0.2, prec: Literal['float64', 'float32'] = 'float64', res: Literal['meas', 'time'] = 'meas', invT2: Optional[float] = None)

Bases:

NVCenterThis model describes the estimation of the intensity of oscillating magnetic field of known frequency with an NV center used as magnetometer. The transversal relaxation time

can be either known, or be a parameter to estimate.

Constructor of the

NVCenterACMagnetometryclass.Parameters

- batchsize: int

Batchsize of the simulation, i.e. number of estimations executed simultaneously.

- params: List[

Parameter] List of unknown parameters to estimate in the NV center experiment, with their respective bounds. This contains either the field intensity

only or

and the inverse coherence time

.

- omega: float = 0.2

Frequency of the oscillating magnetic field to be estimated.

- prec{“float64”, “float32”}

Precision of the floating point operations in the simulation.

- res: {“meas”, “time”}

Resource type for the present metrological task, can be either the total evolution time, i.e. time, or the total number of measurements on the spin, i.e. meas.

- invT2: float, optional

If this parameter is specified the estimation is performed only for the field intensity

while the inverse of the transverse relaxation time

is fixed to the value invT2. In this case the list params must contain a single parameter. If no invT2 is specified, it is assumed that it is an unknown parameter that will be estimated along

by the Bayesian procedure. In this case params must contain two objects, the second of them should be the inverse of the transversal relaxation time.

- model(outcomes: Tensor, controls: Tensor, parameters: Tensor, meas_step: Tensor, num_systems: int = 1)

Model for the outcome of a measurement on a spin that has been precessing in an oscillating magnetic field of intensity

and known frequency omega. The probability of getting the outcome

is

(63)

The evolution time

is controlled by the trainable agent, and

is the unknown magnetic field. The parameter

may or may not be an unknown in the estimation, according to the value of the attribute invT2 of the

NVCenterACMagnetometryclass.

- alternate_field_estimation(args, batchsize: int, max_res: float, learning_rate: float = 0.01, gradient_accumulation: int = 1, cumulative_loss: bool = False, log_loss: bool = False, res: Literal['meas', 'time'] = 'meas', omega: float = 0.2, invT2: Optional[float] = None, invT2_bound: Optional[Tuple] = None, cov_weight_matrix: Optional[List] = None, B_bounds: Tuple[float, float] = (0.0, 1.0))

Simulates the Bayesian estimation of the intensity of an oscillating magnetic field of known frequency with an NV center sensor, for a neural network controlling the evolution time, a static strategy, two variations of the

strategy, and the particle guess heuristic (PGH).

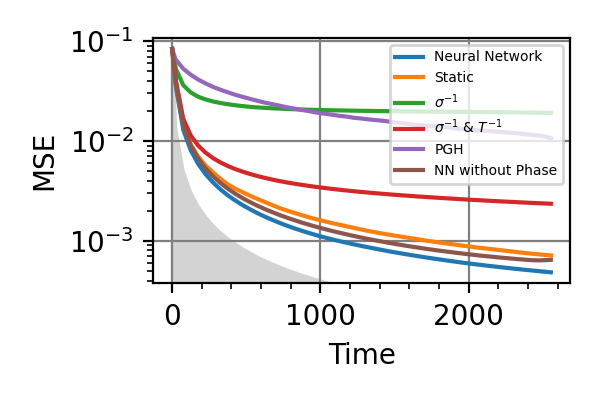

The measurement loop is showed in the following picture.

Parameters

- args:

Arguments passed to the Python script.

- batchsize: int

Batchsize of the simulation, i.e. number of estimations executed simultaneously.

- max_res: float

Maximum amount of resources allowed to be consumed in the estimation.

- learning_rate: float = 1e-2

Initial learning rate for the neural network. The initial learning rate of the static strategy is fixed to 1e-1. They both decay with

InverseSqrtDecay.- gradient_accumulation: int = 1

Flag of the

train()function.- cumulative_loss: bool = False

Flag

cumulative_loss.- log_loss: bool = False

Flag

log_loss.- res: {“meas”, “time”}

Type of resource for the simulation. It can be either the number of measurements or the total evolution time.

- omega: float = 0.2

Known frequency of the oscillating magnetic field.

- invT2: float, optional

Inverse of the transversal relaxation time. If it is infinity or it is unknown and must be estimated, this parameter should not be passed.

- invT2_bound: Tuple[float, float], optional

Extrema of admissible values of the inverse of the transversal relaxation time, whose precise value is not known before the estimation.

- cov_weight_matrix: List, optional

Weight matrix for the mean square error on the field intensity and on the decoherence parameter.

- B_bounds: Tuple[float, float] = (0.0, 1.0)

Extrema of admissible values for the intensity of the magnetic field.

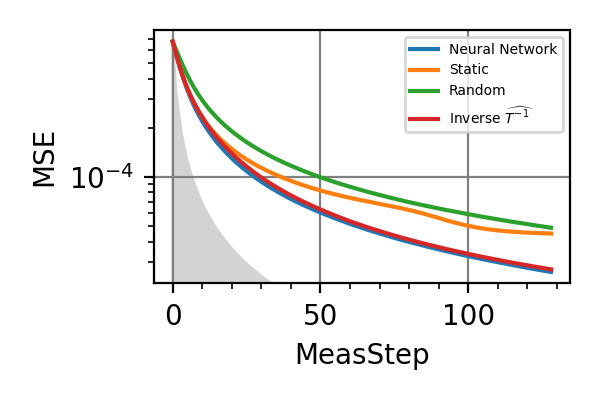

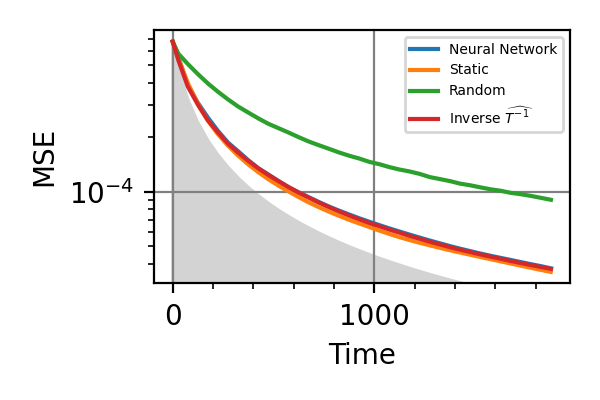

- main()

Runs the simulations for the estimation of the intensity of an oscillating magnetic field of known frequency, for the same parameters of the simulations done by Fiderer, Schuff and Braun 1,

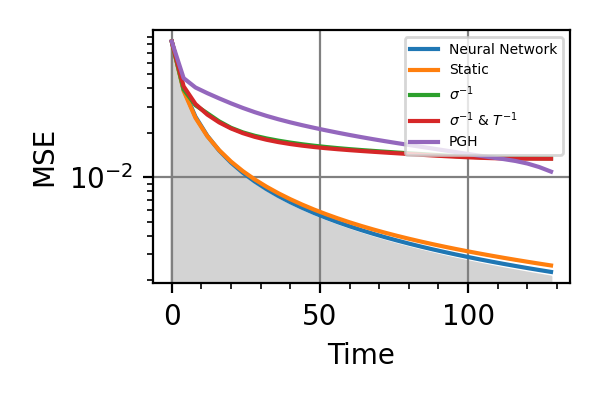

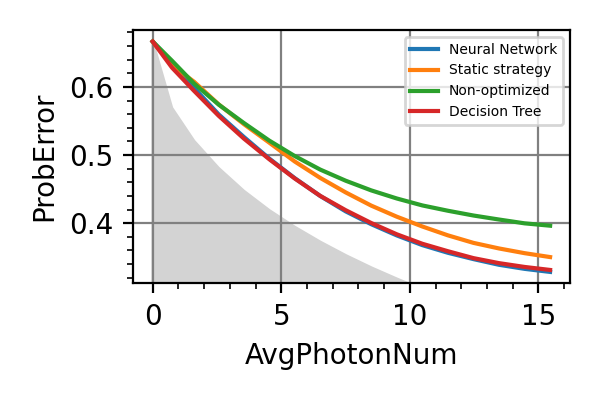

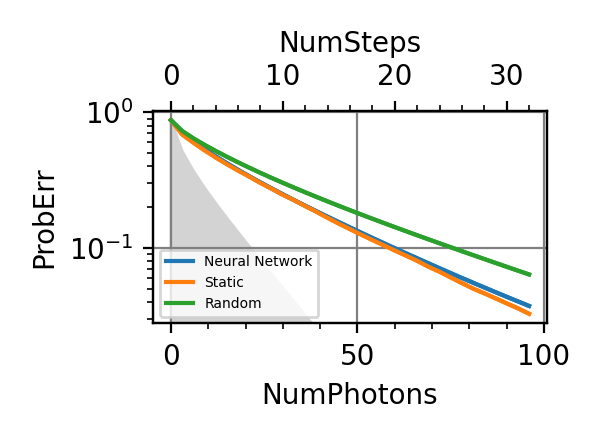

The performances of the optimized neural network and static strategies are reported in the following plots, together with the simulated

and PGH strategies, for a range of resources and coherence times. The resource can be either the total evolution time or the total number of measurements.

Fig. 15 invT2=0.01, Meas=128

Fig. 16 invT2=0.1, Meas=128

Fig. 17 invT2 in (0.09, 0.11), Meas=512

Fig. 18 invT2=0, Meas=24

Fig. 19 invT2=0.01, Time=2560

Fig. 20 invT2=0.1, Time=2560

Fig. 21 invT2 in (0.09, 0.11), Time=1024

Fig. 22 invT2=0, Time=128

The shaded grey areas in the above plot indicate the Bayesian Cramér-Rao bound, which is the the ultimate precision bound computed from the Fisher information.

In all simulations we see that an “adaptivity-gap” opens between the precision of the static and the NN strategies as the resources grow.

Notes

The model used in this example is formally equivalent to that of the

nv_center_dcmodule, where the adaptive strategy gives only small advantages, at difference with the results obtained hare for AC-magnetometry. This is because the AC model maps to the DC one in a very different region of parameters with respect to the region we have explored innv_center_dc.In AC magnetometry the technique of dynamical decoupling (DD) is often used to improve the sensitivity with respect to

and to increase the coherence time

. This consists in series of

-pulses that reverse the sign of the accumulated phase. Given

the times at which the instantaneous

-pulse are applied, the outcome probability for a noiseless estimation is given by

(64)

with

being the number of pulses, and

and

being respectively the initialization and the measurement times.

In the simulations done we have used no

-pulse, but optimizing their application is the natural extension of this example, left for future works. For controlling the pulses there are possibilities:

the interval between the pulses is fixed to

, which is produced by a NN together with the number of pulses N,

the controls are the

time intervals

. The number of pulses is fixed but they can be made ineffective with

,

the control is the free evolution time

together with a boolean variable, that tells whether a pulse or a measurement has to be applied after the free evolution. This would require a stateful model for the NV center, where the current phase is the state.

The problem of estimating

knowing the frequency is complementary to the protocol for the optimal discrimination of frequencies presented in 3, which is used to distinguish chemical species in a sample. In this work the authors put forward an optimal strategy for the discrimination of two frequencies

and

, knowing the intensity

. If

and the field intensity is unknown a two stage approach to the problem is possible. We can estimate

as done in this example, i.e. by considering the frequency fixed to

, and then proceed with the optimal frequency discrimination based on the intensity just estimated. The error probability of the second stage depends on the precision of the first. Given a fixed total time for the discrimination, the time assigned to the first and the second stage can be optimized to minimize the final error. For close frequencies we expect this two stage protocol to be close to optimality. A small improvement would be to simulate the estimation of

with the frequency as a nuisance parameters. It is important for the first stage, the estimation of the field intensity, to have low sensitivity to variations in the frequency. For optimizing frequency discrimination with

as a nuisance parameter in a fully integrated protocol the introduction of

-pulses is necessary, just like for dynamical decoupling. The natural extension of hypothesis testing on the frequency is the estimation of both the intensity and the frequency of the magnetic field optimally, both starting from a broad prior. All these improvements are left for future work.

All the training of this module have been done on a GPU NVIDIA Tesla V100-SXM2-32GB, each requiring

hours.

- 3

Schmitt, S., Gefen, T., Louzon, D. et al. npj Quantum Inf 7, 55 (2021).

- parse_args()

Arguments

- scratch_dir: str, required

Directory in which the intermediate models should be saved alongside the loss history.

- trained_models_dir: str = “./nv_center_ac/trained_models”

Directory in which the finalized trained model should be saved.

- data_dir: str = “./nv_center_ac/data”

Directory containing the csv files produced by the

performance_evaluation()and thestore_input_control()functions.- prec: str = “float32”

Floating point precision of the whole simulation.

- n: int = 64

Number of neurons per layer in the neural network.

- num_particles: int = 480

Number of particles in the ensemble representing the posterior.

- iterations: int = 32768

Number of training steps.

- scatter_points: int = 32

Number of points in the Resources/Precision csv produced by

performance_evaluation().

NV center - Hyperfine coupling

- class NVCenterHyperfine(batchsize: int, params: List[Parameter], prec: Literal['float64', 'float32'] = 'float64', res: Literal['meas', 'time'] = 'meas', invT2: float = 0.0)

Bases:

NVCenterThis class models the measurement of an NV center strongly coupled to a 13C nuclear spin in the diamond lattice. Such nuclear spin is not hidden in the spin bath of nuclei, that causes the dephasing noise, instead it splits the energy levels of the NV center, according to the hyperfine interaction strength. The precession frequency of the NV center in a magnetic field is determined by the state of the nuclear spin. In the work of T. Joas et al. 4 multiple incoherent nuclear spin flips happen during the read out, so that the nuclear spin is in each eigenstate approximately half of the time. This motivates the choice for the outcome probability of Ramsey measure on the NV center:

(65)

In such model

and

are the two precession frequencies of the sensor to be estimated, splitted by the hyperfine interaction,

is the coherence time,

and

are the controls, being respectively the evolution time and the phase.

Notes

This models is completely symmetric under permutation of the two precession frequencies. In these cases the flag

permutation_invariantmust be activated. Only those weight matrices that are permutationally invariant should be considered for the estimation.All the training of this module have been done on a GPU NVIDIA Tesla V100-SXM2-32GB, each requiring

hours.

- 4

Joas, T., Schmitt, S., Santagati, R. et al. npj Quantum Inf 7, 56 (2021).

Constructor of the

NVCenterHyperfineclass.Parameters

- batchsize: int

Batchsize of the simulation, i.e. number of estimations executed simultaneously.

- params: List[

Parameter] List of unknown parameters to estimate in the NV center experiment, with their respective bounds. These are the two precession frequencies

and

.

- prec{“float64”, “float32”}

Precision of the floating point operations in the simulation.

- res: {“meas”, “time”}

Resource type for the present metrological task, can be either the total evolution time, i.e. time, or the total number of measurements on the NV center, i.e. meas.

- invT2: float = 0.0

The inverse of the transverse relaxation time

is fixed to the value invT2.

- model(outcomes: Tensor, controls: Tensor, parameters: Tensor, meas_step: Tensor, num_systems: int = 1) Tensor

Model for the outcome probability of the NV center subject to the hyperfine interaction with the carbon nucleus and measured through photoluminescence after a Ramsey sequence. The probability of getting +1 in the majority voting following the photon counting is given by Eq.65.

Notes

There is also another way of expressing the outcome probability of the measurement. Instead of defining the model in terms of

and

we could define it in terms of the frequency sum

and frequency difference

, thus writing

(66)

In this form there is no permutational invariance in

and

, instead the model is invariant under the transformation

. We should choose a prior having

, which means

. We could impose the positivity of the frequencies by requiring

in the prior.

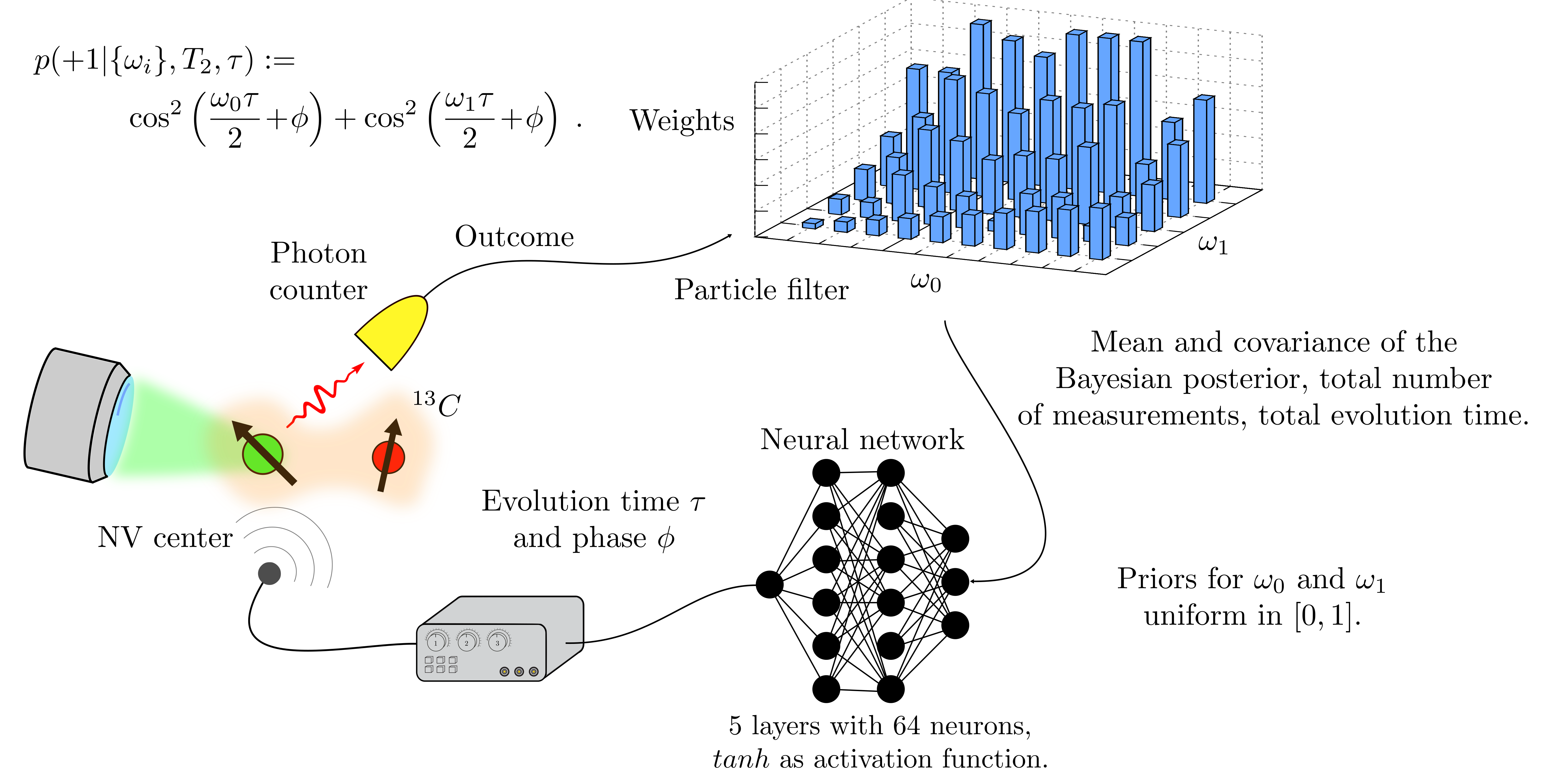

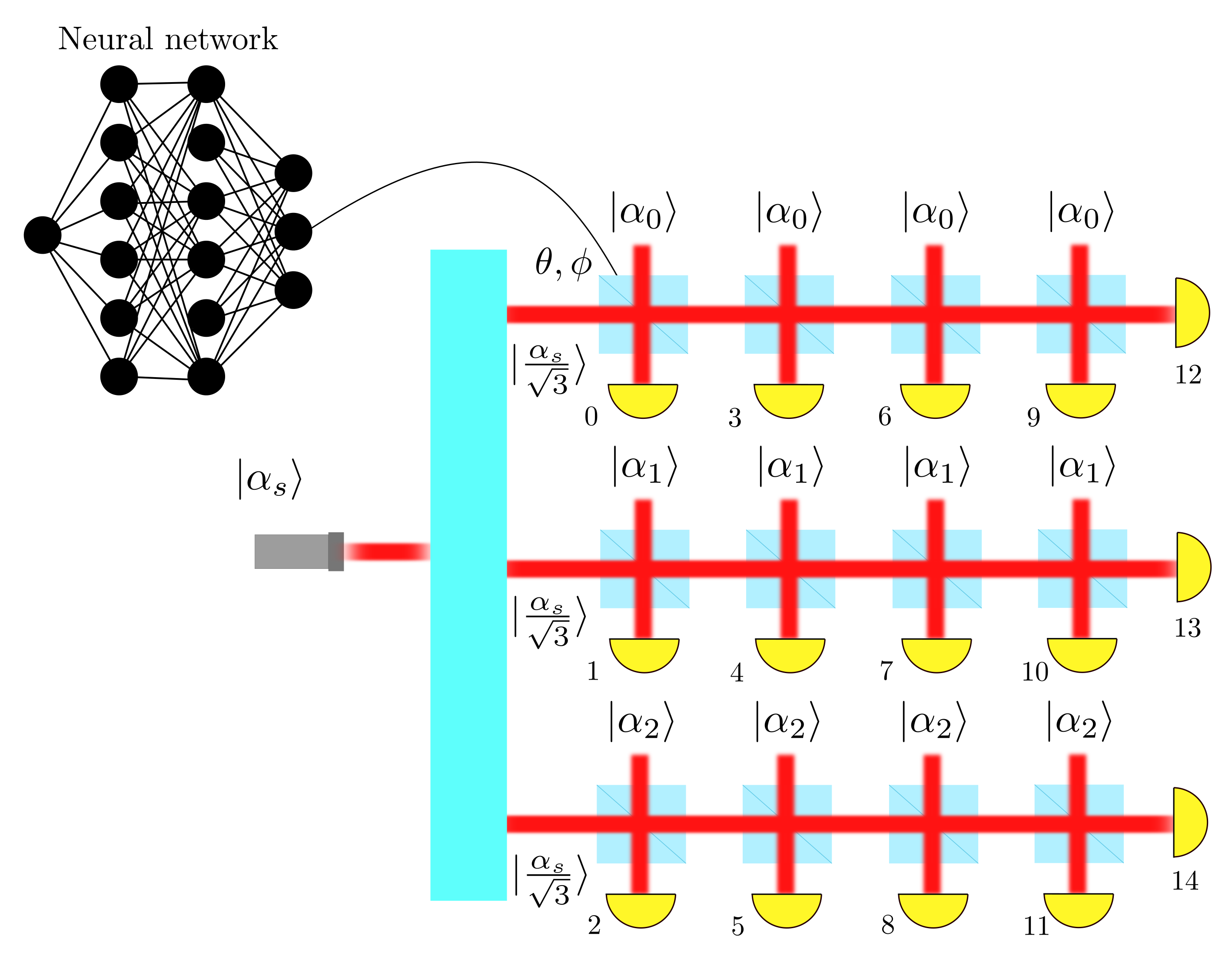

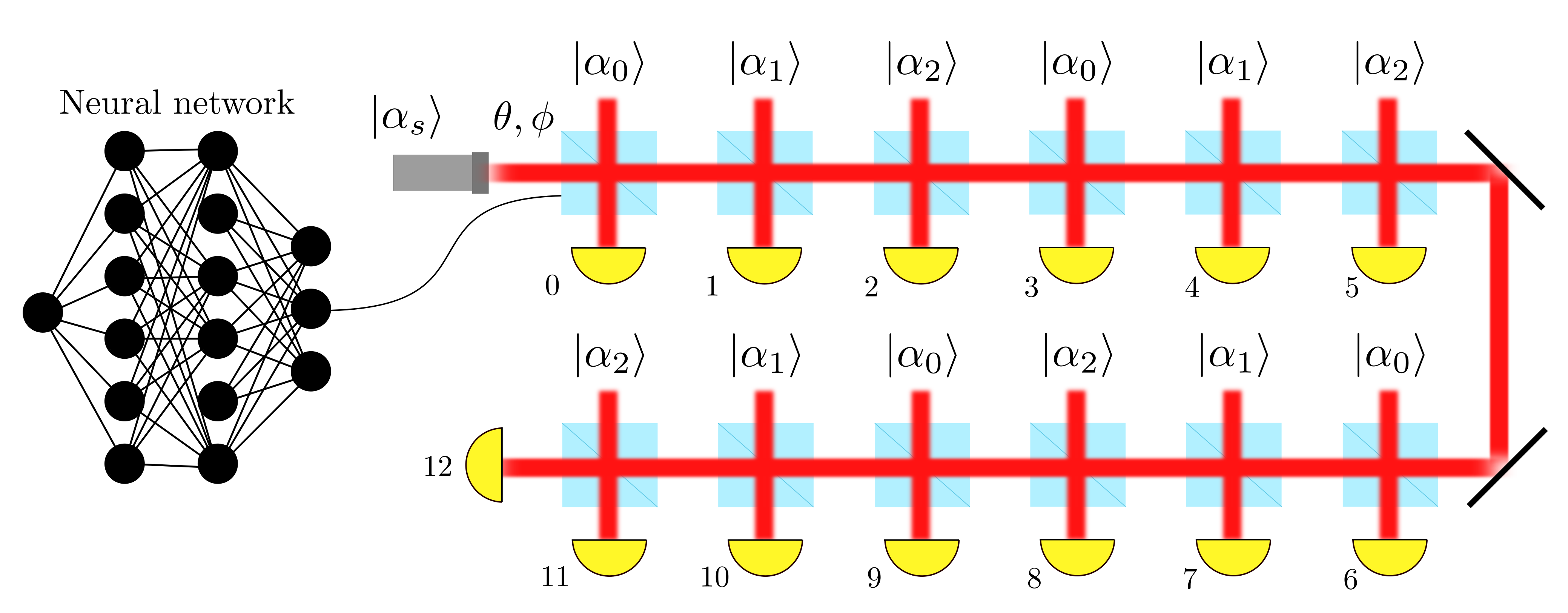

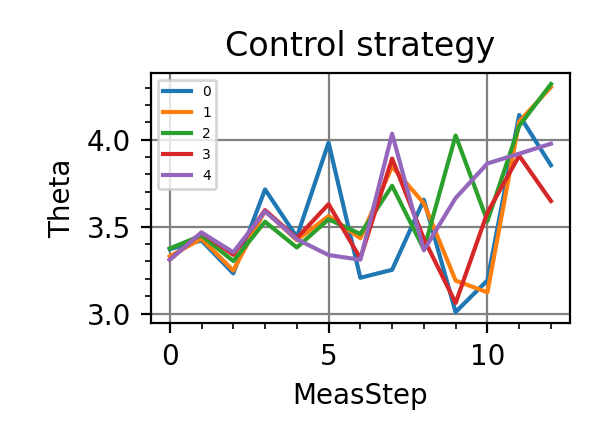

- hyperfine_parallel_estimation(args, batchsize: int, max_res: float, learning_rate: float = 0.01, gradient_accumulation: int = 1, cumulative_loss: bool = False, log_loss: bool = False, res: Literal['meas', 'time'] = 'meas', invT2: Optional[float] = None, cov_weight_matrix: Optional[List] = None, omega_bounds: Tuple[float, float] = (0.0, 1.0))

Estimation of the frequencies of two hyperfine lines in the spectrum of an NV center strongly interacting with a 13C nuclear spin. The mean square errors of the two parameters are weighted equally with

. The frequency difference is the component of the hyperfine interaction parallel to the NV center quantization axis, i.e.

. The priors for

and

are both uniform in (0, 1), and they are symmetrized. Beside the NN and the static strategies, the performances of the particle guess heuristic (PGH), and of the

strategies are reported in the plots. These other strategies are explained in the documentation of the

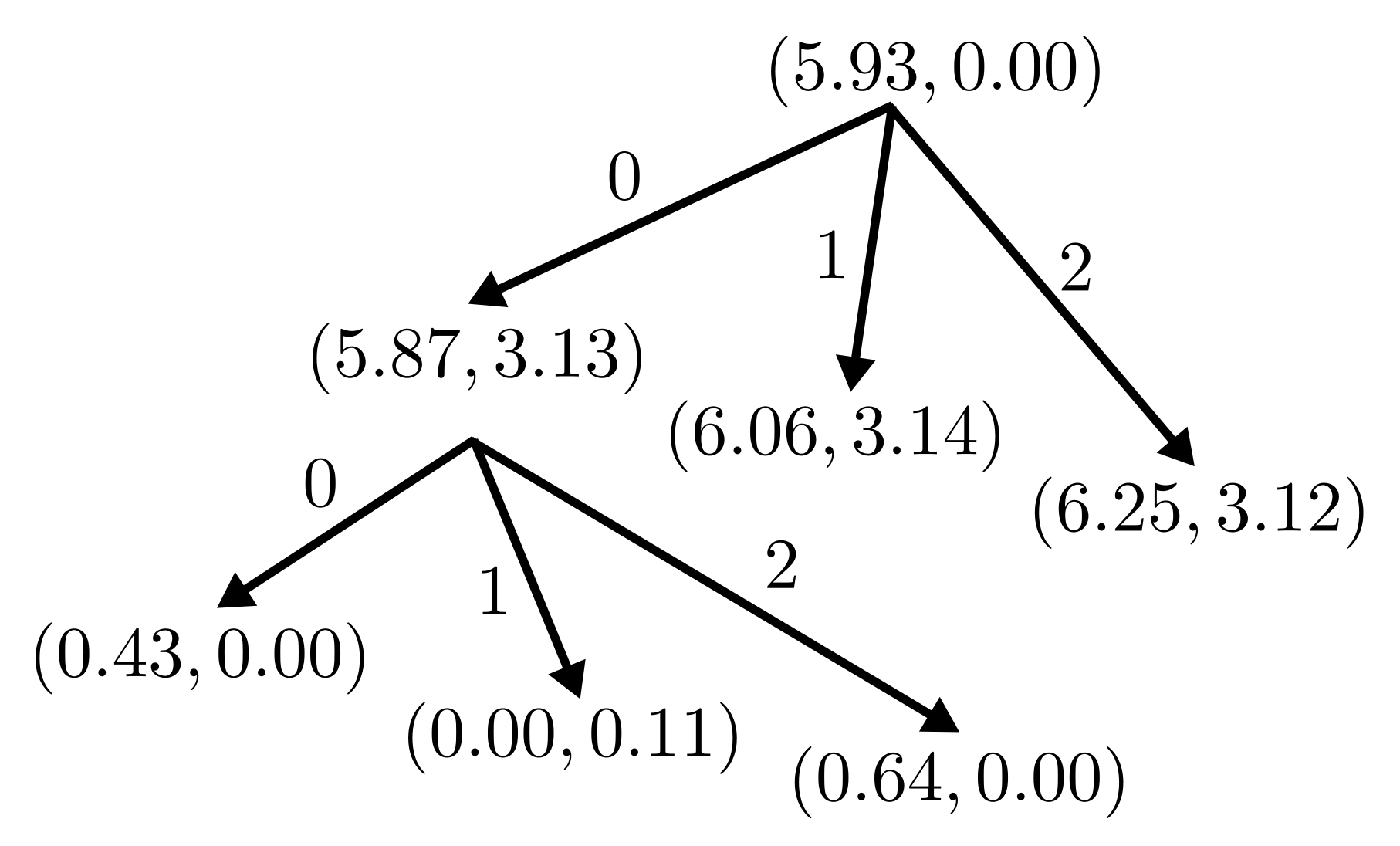

Magnetometryclass. The picture represent a schematic of the estimation of the hyperfine coupling controlled by the NN.

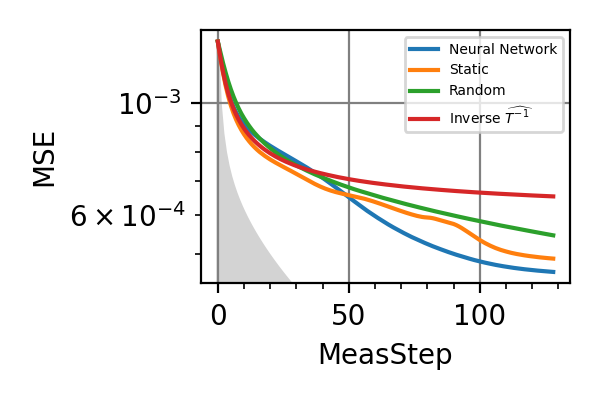

- main()

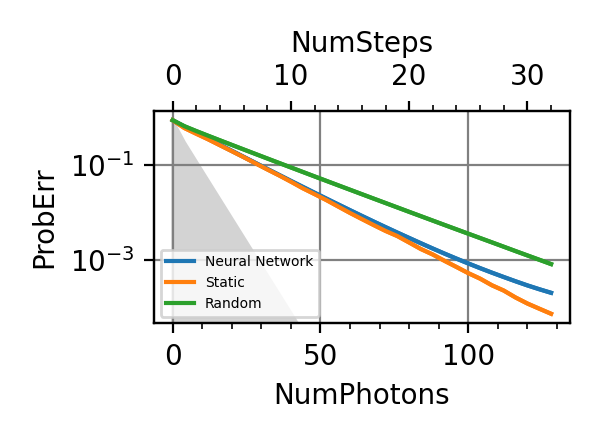

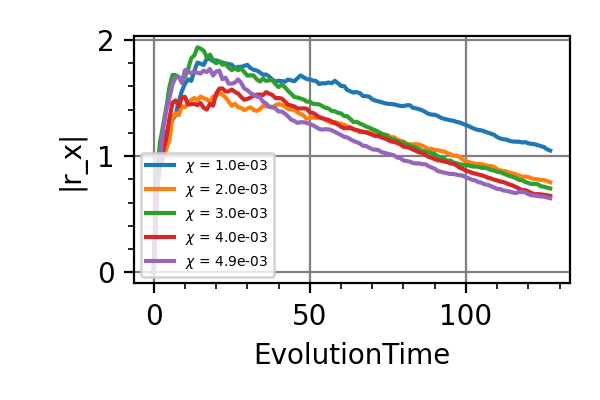

In the following we report the mean square error on the Bayesian estimators for the two frequencies for different strategies and different coherence times

. The resource is either the total evolution time or the number of measurements on the NV center.

Fig. 23 invT2=0, Meas=128

Fig. 24 invT2=0, Time=2048

Fig. 25 invT2=0.01, Meas=128

Fig. 26 invT2=0.01, Time=2048

Fig. 27 invT2=0.1, Meas=128

Fig. 28 invT2=0.1, Time=2048

The shaded grey areas in the above plot indicate the Bayesian Cramér-Rao bound, which is the the ultimate precision bound computed from the Fisher information.

From these simulations there seems to be no significant advantage in using an adaptive strategy for the simultaneous estimation of the two precession frequencies. Neither for large nor for small coherence times

.

Notes

In a future work the alternative model of Eq.66 should be implemented. Being interested in the difference

, the sum of the frequencies would be treated as a nuisance parameter.

The absence on an advantage of the adaptive strategy over the non-adaptive one is probably also due to the hyper-simplified information passed to the neural network. We are in fact approximating a complex 2D posterior, with many peaks and valleys with a Gaussian. A better approach would be to train an autoencoder to compress the information contained in the posterior and pass it to the NN. The autoencoder will be trained to compress that class of distribution which are produced by the likelihood of the double precession frequency model.

All the simulations of this module have been done on a GPU NVIDIA GeForce RTX-4090-24GB.

- parse_args()

Arguments

- scratch_dir: str

Directory in which the intermediate models should be saved alongside the loss history.

- trained_models_dir: str = “./nv_center_double/trained_models”

Directory in which the finalized trained model should be saved.

- data_dir: str = “./nv_center_double/data”

Directory containing the csv files produced by the

performance_evaluation()and thestore_input_control()functions.- prec: str = “float32”

Floating point precision of the whole simulation.

- n: int = 64

Number of neurons per layer in the neural network.

- num_particles: int = 4096

Number of particles in the ensemble representing the posterior.

- iterations: int = 4096

Number of training steps.

NV center - Decoherence

- class DecoherenceSimulation(particle_filter: ParticleFilter, phys_model: NVCenterDecoherence, control_strategy: Callable, simpars: SimulationParameters, cov_weight_matrix: Optional[List] = None, random: bool = False, median: bool = False, loss_normalization: bool = False)

Bases:

StatelessMetrologyThis class does the same job of

StatelessMetrology, but it can append a randomly generated control time to the input_strategy Tensor produced by thegenerate_input()method and it can compute the median square error (MedianSE) instead of the mean square error (MSE) in theloss_function()method.Parameters passed to the constructor of the

DecoherenceSimulationclass.Parameters

- particle_filter:

ParticleFilter Particle filter responsible for the update of the Bayesian posterior on the parameters.

- phys_model:

NVCenterDecoherence Abstract description of the NC center for the characterization of the dephasing noise.

- control_strategy: Callable

Callable object (normally a function or a lambda function) that computes the values of the controls for the next measurement from the Tensor input_strategy, which is produced by the method

generate_input().- simpars:

SimulationParameters Contains the flags and parameters that regulate the stopping condition of the measurement loop and modify the loss function used in the training.

- cov_weight_matrix: List, optional

Positive semidefinite weight matrix. If this parameter is not passed then the default weight matrix is the identity, i.e.

.

- random: bool = False

If this flag is True then a randomly chosen evolution time

is added to the input_strategy Tensor produced by

generate_input().- median: bool = False

If this flag is True, then instead of computing the mean square error (MSE) from a batch of simulation the median square error (MedianSE) is computed. See the documentation of the

loss_function()method.Achtung! The median can be used in the training, however it means throwing away all the simulations in the batch except to the one that realizes the median. This is not an efficient use of the simulations in the training. When used the median should be always confined to the performances evaluation, while the training is to be carried out with the mean square error.

- loss_normalization: bool = False

If loss_normalization is True, then the loss is divided by the normalization factor

(67)

where

are the bounds of the i-th parameter in phys_model.params and

are the diagonal entries of cov_weight_matrix.

is the total elapsed evolution time, which can be different for each estimation in the batch.

Achtung! This flag should be used only if the resource is the total estimation time.

- generate_input(weights: Tensor, particles: Tensor, meas_step: Tensor, used_resources: Tensor, rangen: Generator)

This method returns the same input_strategy Tensor of the

generate_input()method, but, if the attribute random is True, an extra column is appended to this Tensor containing the randomly generated evolution time. The value of

is uniformly extracted in the interval of the admissible values for the inverse of the coherence time

.

- loss_function(weights: Tensor, particles: Tensor, true_values: Tensor, used_resources: Tensor, meas_step: Tensor)

Computes the loss for each estimation in the batch.

If median is False, then it returns the same loss of the

loss_functionmethod, i.e. the square error for each simulation in the batch, called, where

runs from

to

, with

the batchsize. If the flag median is True, this method returns the median square error of the batch, in symbols

. In order to compute it, the vector of losses

is sorted, the element at position

is then the median of the losses in the batch. Calling

the position in the unsorted batch of the simulation realizing the median, the outcome of this method is the vector

(68)

The advantage of using the median error is the reduced sensitivity to outliers with respect to the mean erro.

Achtung! Even if the performances evaluation can be performed with the median square error, the training should always minimize the mean square error.

- particle_filter:

- class NVCenterDecoherence(batchsize: int, params: List[Parameter], prec: Literal['float64', 'float32'] = 'float64', res: Literal['meas', 'time'] = 'meas')

Bases:

NVCenterModel describing an NV center subject to a variable amount of decoherence. The dephasing rate encodes some useful information about the environment, which can be recovered by estimating the transverse relaxation time and the decaying exponent.

Constructor of the

NVCenterDecoherenceclass.Parameters

- batchsize: int

Batchsize of the simulation, i.e. number of estimations executed simultaneously.

- params: List[

Parameter] List of unknown parameters to estimate in the NV center experiment, with their priors. It should contain the inverse of the characteristic time of the dephasing process, indicated with the symbol

and the exponent

of the decay. This last parameter carries information on the spectral density of the noise.

- prec{“float64”, “float32”}

Precision of the floating point operations in the simulation.

- res: {“meas”, “time”}

Resource type for the present metrological task. It can be either the total evolution time, i.e. time, or the total number of measurements on the NV center, i.e. meas.

- model(outcomes: Tensor, controls: Tensor, parameters: Tensor, meas_step: Tensor, num_systems: int = 1)

Model for the outcome of a photon counting measurement following a Ramsey sequence on the NV center sensor subject to dephasing noise. The probability of getting the outcome

is

(69)

The evolution time

is controlled by the trainable agent and

and

are the two unknown characteristic parameters of the noise. The factor 20 appearing at the exponent is just the normalization factor we used for

.

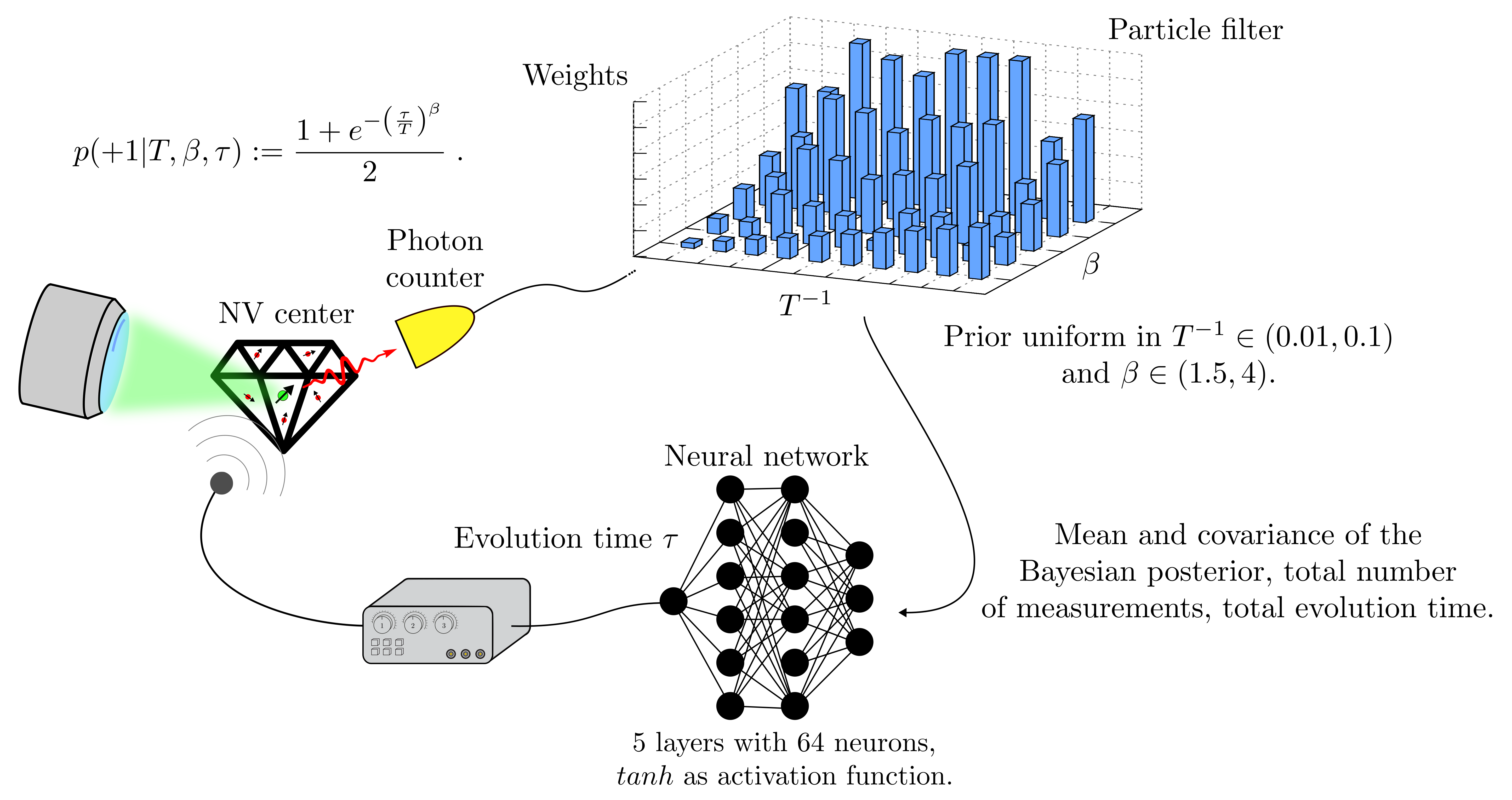

- decoherence_estimation(args, batchsize: int, max_res: float, learning_rate: float = 0.001, gradient_accumulation: int = 1, cumulative_loss: bool = True, log_loss: bool = False, res: Literal['meas', 'time'] = 'meas', loss_normalization: bool = False, beta_nuisance: bool = False, fixed_beta: bool = False)

Simulates the Bayesian estimation of the decoherence parameters of a dephasing noise acting on an NV center. The control parameter is the free evolution time of the system during the Ramsey sequence. We have implement four strategies for the control of the evolution time:

Adaptive strategy with a trained neural network on the basis of the state of the particle filter (adaptive strategy).

Static strategy implemented with a neural network that receives in input the amount of already consumed resources (time or measurements)

Random strategy, with an evolution time chosen in the admissible interval for the values of

.

Inverse time strategy. The evolution time is is

for the measurement-limited estimation and

for the time-limited estimations, where

and

. These numerical coefficients come from the optimization of the Fisher information. For the simulations where

is a nuisance parameter

is used also for the time-limited estimation.

This function trains the static and the adaptive strategies, and evaluates the error for all these four possibilities, in this order.

Achtung! In this example the static strategy is not implemented as a Tensor of controls for each individual measurement, like in the other examples. On the contrary it is implemented as a neural network that takes in input only the value of the consumed resources up to that point.

The measurement cycle is represented in the picture below.

Parameters

- args:

Arguments passed to the Python script.

- batchsize: int

Batchsize of the simulation, i.e. number of estimations executed simultaneously.

- max_res: float

Maximum amount of resources allowed to be consumed in the estimation.

- learning_rate: float = 1e-3

Initial learning rate for the neural networks, for both the adaptive and static strategies. The learning rate decays with

InverseSqrtDecay.- gradient_accumulation: int = 1

Flag of the

train()function.- cumulative_loss: bool = False

Flag

cumulative_loss.- log_loss: bool = False

Flag

log_loss.- res: {“meas”, “time”}

Type of resource for the simulation. It can be either the number of measurements or the total evolution time.

- loss_normalization: bool = False

Parameter loss_normalization passed to the constructor of the

NVCenterDecoherenceclass.- beta_nuisance: bool = False

If this flag is True, the loss is computed only from the error on the inverse decay time

.

- fixed_beta: bool = False

If this flag is True the decay exponent is set to

.

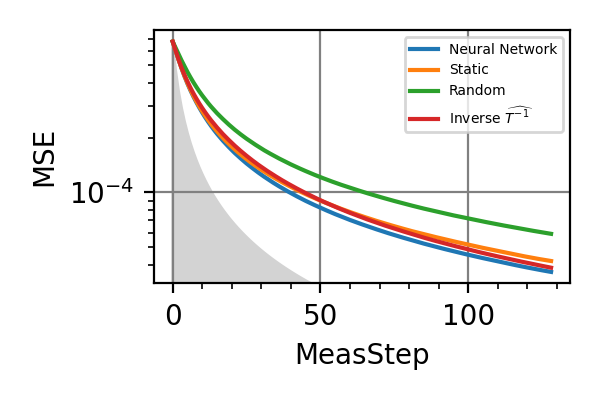

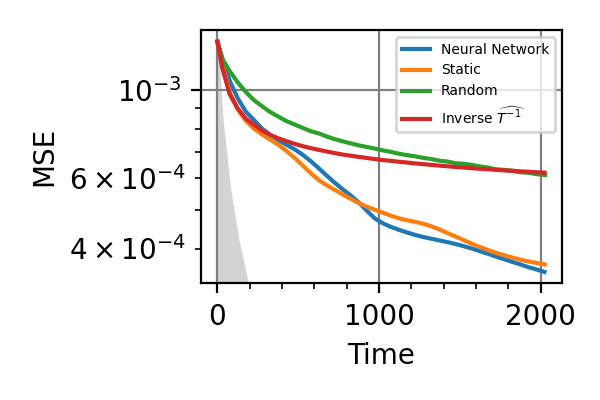

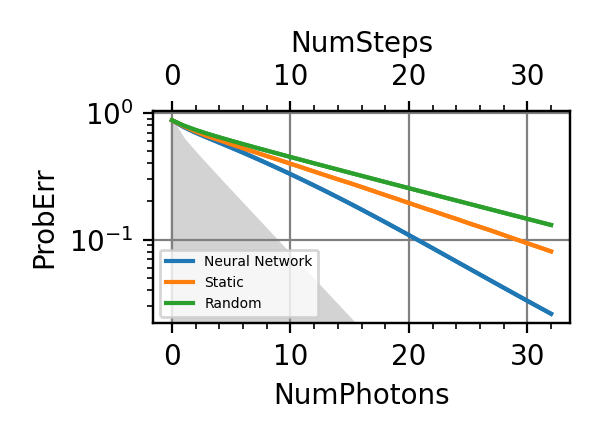

- main()

In this example we characterize the dephasing process of an NV center. We study three cases. In the first one the decaying exponent

is unknown and it is treated as a nuisance parameter. Therefore, only the precision on the inverse decay time appears in the loss (the MSE). In the second case

is known and fixed (

), and only

is estimated. In the third case both

and

are unknown and are parameters of interest, but their MSE are not evenly weighted: the error

weights half of

. This is done t equilibrate the different priors on the parameters and consider them on an equal footage.

The prior on

is uniform in (0.01, 0.1), and that of

is uniform in (0.075, 0.2) (when it is not fixed), so that the interval for the actual exponent, which is

(see

model()) is (1.5, 4) 5.In the following we report the estimator error (MSE) for a bounded number of measurement (on the left), and for a bounded total evolution time (on the right). These simulations are done by calling the function

decoherence_estimation().The adaptive and static strategies are compared with two simple choices for the controls. The first is a randomize strategy, according to which the inverse of the evolution time is randomly selected uniformly in (0.01, 0.1), while the second is a

strategy, according to which the inverse of the evolution time is chosen to be the current estimator for the inverse of the coherence time.

Fig. 29 Meas=128, beta nuisance

Fig. 30 Time=2048, beta nuisance

Fig. 31 Meas=128, fixed beta

Fig. 32 Time=2048, fixed beta

Fig. 33 Meas=128, both parameters

Fig. 34 Time=2048, both parameters

The shaded grey areas in the above plot indicate the Bayesian Cramér-Rao bound, which is the the ultimate precision bound computed from the Fisher information.

There is no advantage in using a NN instead of the strategies that optimize the Fisher information.

Notes

Some application of the estimation of the decoherence time involve the measure of the radical concentration in a biological sample and the measurement of the local conductivity through the Johnson noise.

All the training of this module have been done on a GPU NVIDIA Tesla V100-SXM2-32GB, each requiring

hours.

- parse_args()

Arguments

- scratch_dir: str, required

Directory in which the intermediate models should be saved alongside the loss history.

- trained_models_dir: str = “./nv_center_decoherence/trained_models”

Directory in which the finalized trained model should be saved.

- data_dir: str = “./nv_center_decoherence/data”

Directory containing the csv files produced by the

performance_evaluation()and thestore_input_control()functions.- prec: str = “float32”

Floating point precision of the whole simulation.

- n: int = 64

Number of neurons per layer in the neural network.

- num_particles: int = 2048

Number of particles in the ensemble representing the posterior.

- iterations: int = 4096

Number of training steps.

- scatter_points: int = 32

Number of points in the Resources/Precision csv produced by

performance_evaluation().

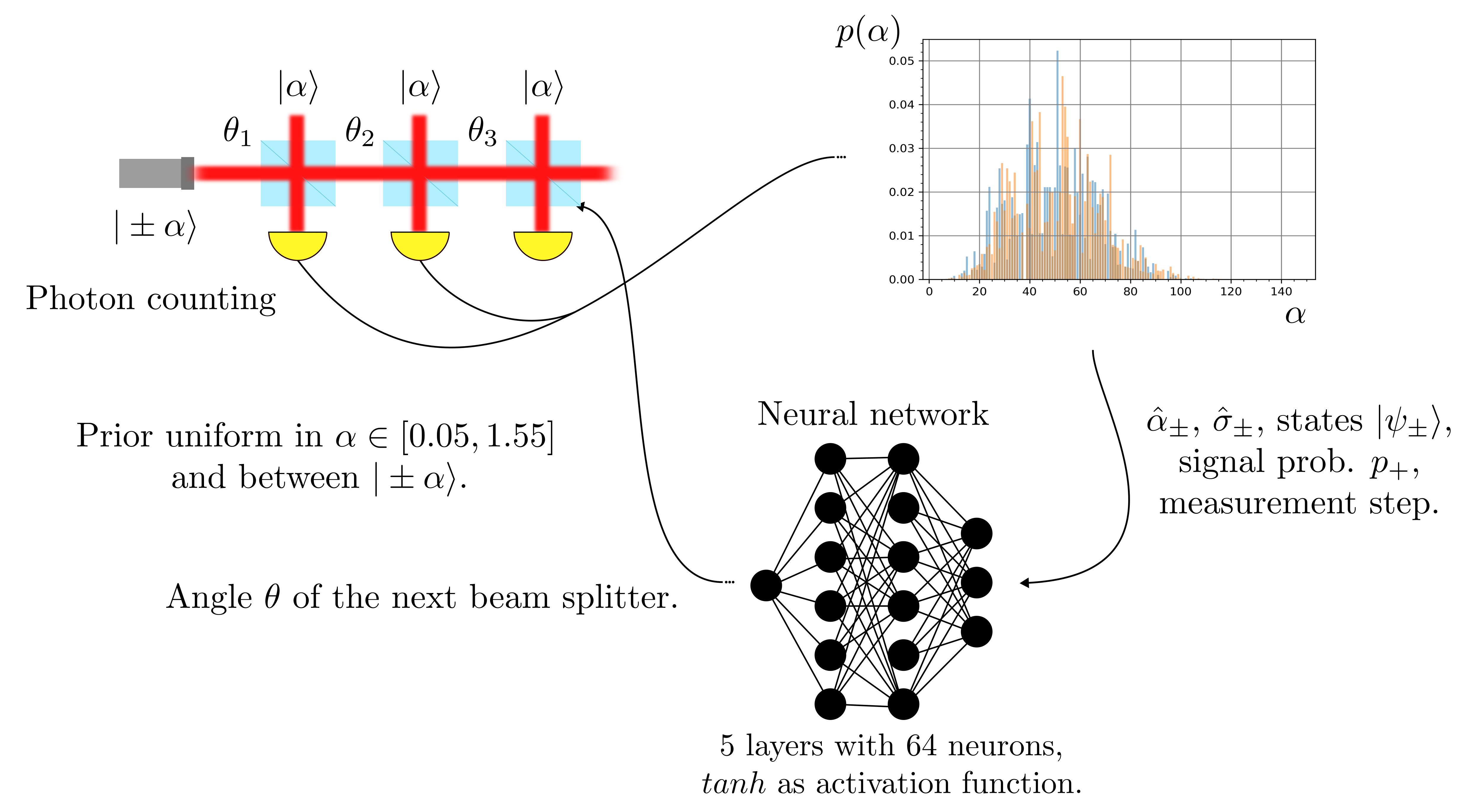

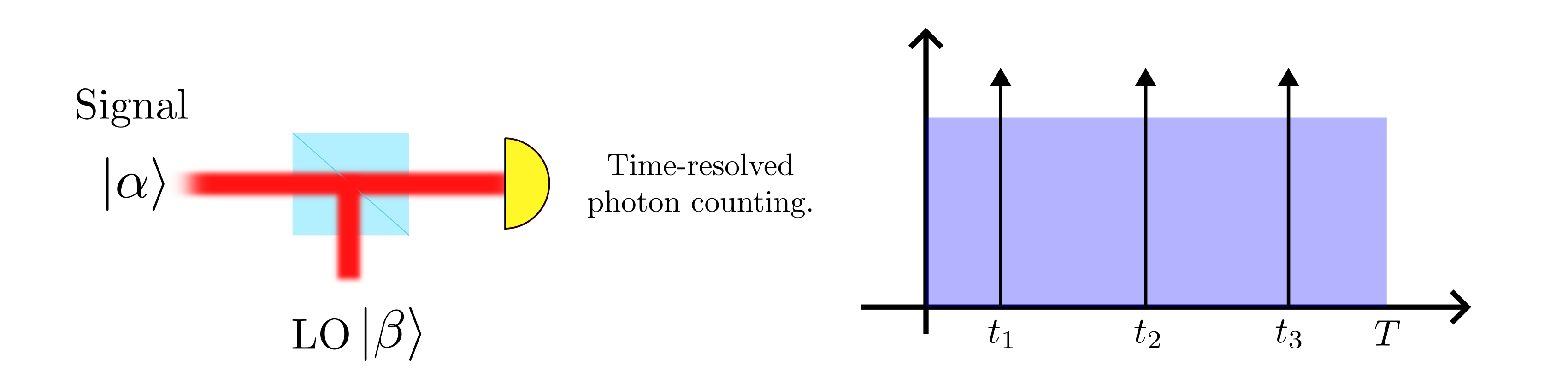

Dolinar (Dolan) receiver

- class AgnosticDolinar(batchsize: int, params: List[Parameter], n: int, resources: str = 'alpha', prec: str = 'float64')

Bases:

StatefulPhysicalModelSchematization of the agnostic Dolinar receiver 6.

The task is to discriminate between

and

, given a single copy of

and

copies of

, that have been prepared in another lab. At difference with the usual Dolinar receiver the intensity of the signal

is not known, but can be estimated from

to adapt the strategy. The physical motivations behind this model is that of a series of wires, with only one carrying the signal, subject to a loss noise of fluctuating intensity.

- 6

F. Zoratti, N. Dalla Pozza, M. Fanizza, and V. Giovannetti, Phys. Rev. A 104, 042606 (2021).

Constructor of the

AgnosticDolinarclass.Parameters

- batchsize: int

Number of agnostic Dolinar receivers simulated at the same time in a mini-batch.

- params: List[

Parameter] List of parameters. It must contain the continuous parameter alpha with its admissible range of values, and the discrete sign parameter, that can take the values (-1, +1).

- n: int

Number of states

that the agnostic Dolinar receiver consumes to make a decision on the sign of the sign of the signal.

Achtung! Note that in the simulation n+1 measurement are actually performed, because both outputs of the last beam splitter are measured.

- resources: {“alpha”, “step”}

Resources of the model, can be the amplitude

of the signal or the number of state

consumed in the procedure.

- prec: str = “float64”

Floating point precision of the model.

- count_resources(resources: Tensor, outcomes: Tensor, controls: Tensor, true_values: Tensor, state: Tensor, meas_step: Tensor) Tensor

The resources can be either the number of states

consumed, which is update after every beam splitter, or the amplitude

, that is related to the mean number of photons in the signal.

- initialize_state(parameters: Tensor, num_systems: int) Tensor

State initialization for the agnostic Dolinar receiver. The state contains the amplitude of the remaining signal after the measurements (for both hypothesis) and the total number of measured photons.

- model(outcomes: Tensor, controls: Tensor, parameters: Tensor, state: Tensor, meas_step: Tensor, num_systems: int = 1)

Probability of observing the number of photons outcomes in a photon counter measurement in the agnostic Dolinar receiver.

- perform_measurement(controls: Tensor, parameters: Tensor, true_state: Tensor, meas_step: Tensor, rangen: Generator)

The signal

and the reference states

are sequentially mixed on a beam splitter with tunable transmission

, and one output of the beam splitter is measured through photon counting, while the other gets mixed again. The left over signal after n uses of the beam splitter is then measured on a photon counter.

- class DolinarSimulation(particle_filter: ParticleFilter, phys_model: AgnosticDolinar, control_strategy: Callable, simpars: SimulationParameters, loss: int = 3)

Bases:

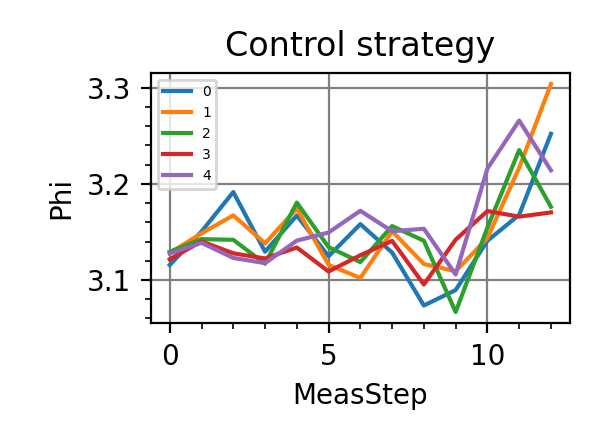

StatefulSimulationSimulation class for the agnostic Dolinar receiver. It works with a

ParticleFilterand aAgnosticDolinarobjects.The measurement loop is showed in the following picture.

Constructor of the

DolinarSimulationclass.Parameters

- particle_filter:

ParticleFilter Particle filter responsible for the update of the Bayesian posterior on

and on the sign of the signal. It contains the methods for applying the Bayes rule and computing Bayesian estimators from the posterior.

- phys_model:

AgnosticDolinar Model of the agnostic Dolinar receiver.

- simpars:

SimulationParameters Parameter simpars passed to the class constructor.

- loss: int = 3

Type of loss to be used in the simulation. It regulates the behavior of the

loss_function()method. There are nine possible losses to choose from. These are explained in the documentation ofloss_function().

- generate_input(weights: Tensor, particles: Tensor, state_ensemble: Tensor, meas_step: Tensor, used_resources: Tensor, rangen: Generator)

This method collects the information on

and on the sign of the signal produced by the particle filter and builds from them the input_strategy object to be fed to the neural network. This Tensor has nine scalar components, that are:

, the normalized intensity of the signal after the measurements, assuming that it was

at the beginning.

, the mean posterior estimator for signal intensity assuming

.

, the variance of the posterior distribution for the signal intensity, assuming

.

, the normalized intensity of the signal after the measurements, assuming that it was

at the beginning.

, the mean posterior estimator for signal intensity assuming

.

, the variance of the posterior distribution for the signal intensity, assuming

.

the posterior probability for the original signal to be

,

the index of the current measurement meas_step normalized against the total number of measurements,

the total number of photons measured, up to the point this method is called.

- loss_function(weights: Tensor, particles: Tensor, true_state: Tensor, state_ensemble: Tensor, true_values: Tensor, used_resources: Tensor, meas_step: Tensor)

The loss of the agnostic Dolinar receiver measures the error in guessing the sign of the signal

, after the signal has been completely measured.

There are nine possible choices for the loss, which can be tuned through the parameter loss passed to the constructor of the

DolinarSimulationclass. Before analyzing one by one these losses we establish some notation.is the posterior probability that the original signal is

,

,

is the sign of the signal in

, and

is the total number of observed photons. The error probability given by the resource limited Helstrom bound is indicated with

. This is computed by the function

helstrom_bound(). We also define the Kronecker delta function(70)

There are two possible policies for guessing the signal sign, that we indicate respectively with

and

. They are respectively

(71)

and

(72)

The possible choices for the loss are

loss=0:

,

loss=1:

,

loss=2:

,

loss=3:

,

loss=4:

,

loss=5:

,

loss=6:

,

loss=7:

,

loss=8:

,

Notes

The optimization with loss=3 works better for small

then for large

. This is because the scalar loss is the mean of the error probability on the batch and it is not so relevant to optimize the estimations those regions of the parameter

that have low error probability anyway. To summarize, loss=3 makes the optimization concentrate on the region of small

, since this region dominates the error.

Achtung!: A constant added to the loss is not irrelevant for the training, since the loss itself and not only the gradient is appears in the gradient descent update.

- particle_filter:

- dolinar_receiver(args, num_steps: int, loss: int = 3, learning_rate: float = 0.01)

Training and performance evaluation of the neural network and the static strategies that control the beam splitter reflectivity in the agnostic Dolinar receiver.

Parameters

- args:

Arguments passed to the Python script.

- num_steps: int

Number of reference states

in the Dolinar receiver.

- loss: int = 3

Type of loss used for the training, among the ones described in the documentation of

loss_function(). The loss used for the performances evaluation is always loss=0.- learning_rate: float = 1e-2

Initial learning rate for the neural network. The initial learning rate of the static strategy is fixed to 1e-2. They both decay with

InverseSqrtDecay.

- helstrom_bound(batchsize: int, n: int, alpha: Tensor, iterations: int = 200, prec: str = 'float64')

Calculates the Helstrom bound for the agnostic Dolinar receiver with n copies of

. It is the lower bound on the error probability for the discrimination task and it is given by the formula

(73)

where

is the probability distribution of a Poissonian variable, i.e.

(74)

Parameters

- batchsize: int

Number of bounds to be computed simultaneously, it is the first dimension of the alpha Tensor.

- n: int

Number of copies of

at disposal.

- alpha: Tensor

Tensor of shape (batchsize, 1), with the amplitudes of the coherent states for each discrimination task.

- iterations: int = 200

There is no closed formula for the error probability, this parameter is the number of summand to be computed in Eq.73.

- prec: str = “float64”

Floating point precision of the alpha parameter.

Returns

- Tensor:

Tensor of shape (batchsize, 1) and type prec containing the evaluated values of the Helstrom error probability for the discrimination taks.

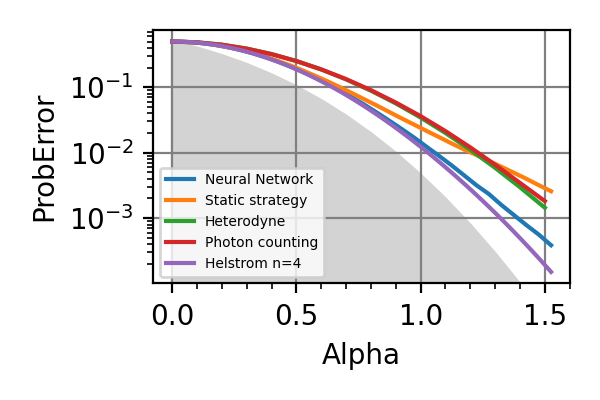

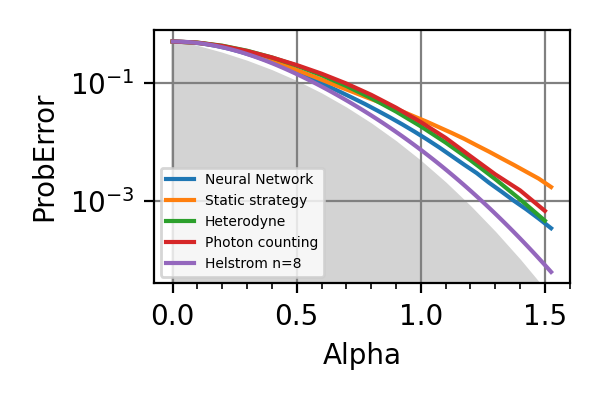

- main()

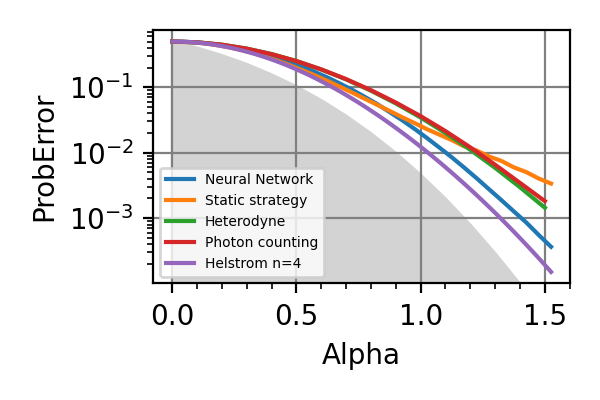

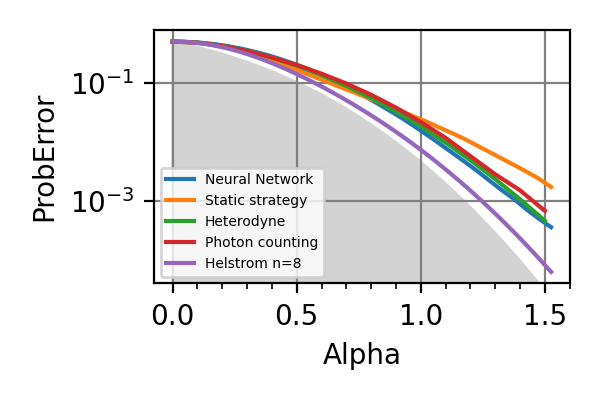

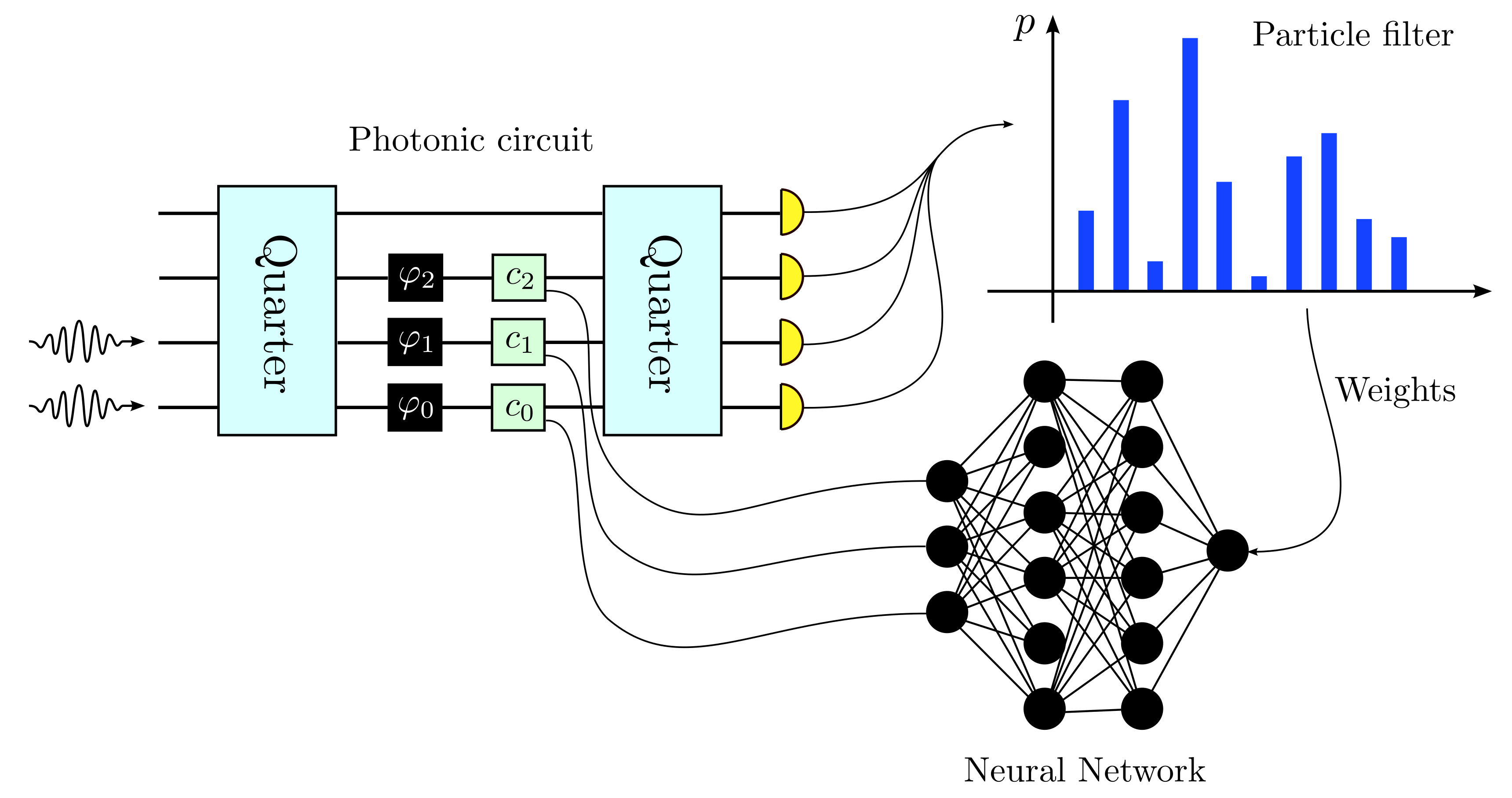

We have trained the optimal adaptive and static strategies for the agnostic Dolinar receiver.

The error probabilities as a function of

are reported in the following figure for

and

reference states

, for two different training losses (loss=3 and loss=6).

The Heterodyne and Photon counting are two 2-stage adaptive strategies based on first measuring all the reference state

and then trying to discriminate the signal based on the acquired information 5.

Fig. 35 n=4, loss=3

Fig. 36 n=8, loss=3

Fig. 37 n=4, loss=6

Fig. 38 n=8, loss=6

The shaded grey areas in the above plot indicate the Helstrom bound for the discrimination of

.

The loss=3 seems to be uniformly superior to loss=6 for every value of

. The advantage of using the neural network strategy is very contained with respect to already known strategies for

but it is significant for

. Instead of a NN a decision tree could have also been a viable strategy for controlling the beam splitters as done in the module

dolinar_three. As expected the gap between the precision of the adaptive and of the non-adaptive strategies increases with the number of photons in the signal.Notes

The loss used in calling

performance_evaluation()is always loss=0.The resources used in calling

store_input_control()are always the number of measurements.An application of machine learning (decision trees) to the Dolinar receiver have been presented also by Cui et al 7.

Known bugs: the training on the GPU freezes if the parameter alpha_bound is set to be too large. This is probably due to a bug in the implementation of the function random.poisson. A preliminary work-around has shown that reimplementing the extraction from a Poissonian distribution with random.categorical would solve the problem.

All the training of this module have been done on a GPU NVIDIA Tesla V100-SXM2-32GB, each requiring

hours.

- 7

C. Cui, W. Horrocks, S. Hao et al., Light Sci Appl. 11, 344 (2022).

- parse_args()

Arguments

- scratch_dir: str

Directory in which the intermediate models should be saved alongside the loss history.

- trained_models_dir: str = “./dolinar/trained_models”

Directory in which the finalized trained model should be saved.

- data_dir: str = “./dolinar/data”

Directory containing the csv files produced by the

performance_evaluation()and thestore_input_control()functions.- prec: str = “float64”

Floating point precision of the whole simulation.

- batchsize: int = 4096

Batchsize of the simulation.

- n: int = 64

Number of neurons per layer in the neural network.

- num_particles: int = 512

Number of particles in the ensemble representing the posterior.

- num_steps: int = 8

Number of reference states

, i.e. number of beam splitters.

- training_loss: int = 3

Loss used in the training. It is an integer in th range [0, 8]. The various possible losses are reported in the description of the

loss_function()method.- iterations: int = 32768

Number of training steps.

Quantum ML classifier

- class CoherentStates(batchsize: int, prec: str = 'float64')

Bases:

objectThis class contains the methods to operated on one ore more bosonic wires carrying coherent states. It can’t deal with squeezing or with noise, only with displacements, phase shifts and photon counting measurements.

Notes

The covariance matrix of the system is always proportional to the identity and corresponds to zero temperature.

Constructor of the

CoherentStatesclass.Parameters

- batchsize: int

Number of experiments that are simultaneously treated by the object.

Achtung! This is not the number of bosonic lines that an object of this class can deal with. This is in fact not an attribute of the class, and is not fixed during the initialization.

- apply_bs_network(variables_real: Tensor, variables_imag: Tensor, input_channels: Tensor, num_wires: int)

Applies the beam splitter network identified by variables_real and variables_imag to the state carried by the bosonic lines.

Parameters

- variables_real: Tensor

Tensor of shape (batchsize, ?, num_wires, num_wires) and type prec.

- variables_imag: Tensor

Tensor of shape (batchsize, ?, num_wires, num_wires) and type prec.

- input_channels: Tensor

Displacement vector of the bosonic wires. It is a Tensor of type prec and shape (batchsize, ?, 2 num_wires). The components of the i-th wire are at position i and i+num_wires.

- num_wires: int

Number of wires in the system.

Returns

- Tensor

Tensor of shape (batchsize, ?, 2 num_wires) and type prec, containing the evolved displacements of the bosonic wires state.

- apply_bs_network_from_unitary(unitary: Tensor, input_channels: Tensor)

Applies the beam splitter identified by variables_real and variables_imag.

Parameters

- unitary: Tensor

Tensor of shape (batchsize, ?, num_wires, num_wires) and type complex64 if prec is float32 or complex128 if prec if float64.

- input_channels: Tensor

Displacement vector of the bosonic wires. It is a Tensor of type prec and shape (batchsize, ?, 2 num_wires). The components of the i-th wire are at position i and i+num_wires.

Returns

- Tensor

Tensor of shape (batchsize, ?, 2 num_wires) and type prec, containing the evolved displacements of the bosonic wires wires.

Notes

The covariance matrix of the system is always proportional to the identity and corresponds to zero temperature.

- beamsplitter(input1: Tensor, input2: Tensor, theta: Tensor, phi: Tensor) Tuple[Tensor, Tensor]

Beam splitter (BS) operating on two bosonic modes carrying coherent states. It returns the two states outputted by the BS.

Parameters

- input1: Tensor

Tensor of shape (bs, ?, 2) and of type prec containing the x and y components of the first incident state on the BS.

- input2: Tensor

Tensor of shape (bs, ?, 2) and of type prec containing the x and y components of the second incident state on the BS.

- theta: Tensor

Tensor of shape (bs, ?) and of type prec. It contains the transmission coefficient of the BS. It is indicated with

in the formulas.

- phi:

Tensor of shape (bs, ?) and of type prec. It contains the phase applied by the BS. It is indicated with

in the formulas.

Returns

- Tensor:

Tensor of shape (bs, ?, 2) and type prec. Contains the first output state of the BS.

- Tensor:

Tensor of shape (bs, ?, 2) and type prec. Contains the second output state of the BS.

- mean_phot(input_state: Tensor) Tensor

Returns the mean photon number on a coherent state.

Parameters

- input_state: Tensor

Tensor of shape (bs, ?, 2) and of type prec containing the x and y components of the coherent state.

Returns

- Tensor

Tensor of shape (bs, ?) and type prec containing the average number of photons of the coherent states described in input_state.

- phase_shift(input_state: Tensor, phase: Tensor) Tensor

Applies a phase shift on the coherent states encoded in input_state.

Parameters

- input_state: Tensor

Tensor of shape (bs, ?, 2) and of type prec containing the x and y components of the coherent state to be rotated.

- phase: Tensor

Tensor of shape (bs, ?) containing the phase for the rotation of each state in the batch.

Returns

- Tensor:

Tensor of shape (bs, ?, 2) and of type prec containing the rotated coherent state.

- photon_counter(input_state: Tensor, rangen: Generator, num_systems: int = 1) Tuple[Tensor, Tensor]

Photon counting measurement on a bosonic line carrying a coherent state.

Parameters

- input_state: Tensor

Tensor of shape (bs, ?, 2) and of type prec containing the x and y components of the coherent state on which the photon counting measurement will be performed.

- rangen: Generator

Random number generator from the module

tensorflow.random.- num_systems: int = 1

Number of independent coherent states processed per batch. It is the size of the second dimension of input_state.

Returns

- outcomes: Tensor

Tensor of shape (bs, num_systems, 1) and type prec containing the outcomes of the photon counting measurement (the number of photons).

- log_prob: Tensor

Tensor of shape (bs, num_systems) and type prec containing the logarithm of the probability of the observed number of photons.

- postselect_photon_counter(input_state: Tensor, outcomes: Tensor) Tensor

Returns the logarithm of the probability of observing outcomes after a photon counting measurement on input_state.

Parameters

- input_state: Tensor

Tensor of shape (bs, ?, 2) and of type prec containing the x and y components of the coherent state on which the photon counting measurement is performed.

- outcomes: Tensor

Tensor of shape (bs, ?, 1) and type prec containing the outcomes of the photon counting measurement (the number of photons).

Returns

- log_prob: Tensor

Tensor of shape (bs, ?) and type prec containing the logarithm of the probability of observing outcomes on input_state.

- class QMLDiscriminator(batchsize: int, params: List[Parameter], num_ref: int, resources: str = 'alpha', arch: str = 'serial', decision_tree: bool = False, prec: str = 'float64')

Bases:

StatefulPhysicalModel,CoherentStatesBlueprint class for the quantum Machine Learning (QML) based classifier able to distinguish between three coherent states

,

,

, given

copies of each of them, which constitute the quantum training set. The signal state is a single copy of a coherent state, promised to be one of the three training states, i.e.

with s=0, 1, 2.

Notes

The priors on the real components of the training states

,

,

are uniform, as the prior on the signal classes s=0, 1, 2.

Attributes

- bs: int

The batchsize of the physical model, i.e., the number of simultaneous signal testings simulated.

- controls: List[

Control] The controls of the QML classifier are the transmission coefficient and the extra phase of the beam splitter that precedes the photon counting. These can be chosen adaptively according to the previous measurements outcomes.

- params: List[

Parameter] List of parameters to be estimated, passed to the class constructor. These are the real components of the unknown training states (continuous parameter), and the class of the signal.

- controls_size: int = 2

Number of scalar controls in the QML classifier, i.e. 2, the two angles

and

characterizing the beam splitter transformation performed by

beamsplitter().- d: int = 7

The number of unknown parameters to estimate, i.e. 7.

- state_specifics:

StateSpecifics Size of the state of the apparatus, which can be 2 for the serial architecture or 6 for the parallel, plus the type of the state, i.e. prec. If decision_tree=True the size of the state is increased by one.

- recompute_state: bool = True

Flag to the constructor of the

PhysicalModelclass.- outcomes_size: int = 1

Number of scalar outcomes for each measurement in the apparatus, i.e. one, the number of photons in the photon counter.

- prec: str

The floating point precision of the controls, outcomes, and parameters.

- num_ref: int

Number of copies of each training state, passed to the class constructor.

- num_steps: int

Total number of photon counting measurements in the apparatus. It depends on num_ref and on the architecture, in particular it is 3 num_ref+1 for the serial apparatus and 3 num_ref+3 for the parallel one.

- resources: {“alpha”, “step”}

Resource type passes to the class constructor. It can be the average number of photons in the device (“alpha”) or the number of measurements (“step”).

- dt: bool

Flag decision_tree passed to the class constructor. It should be activated if a decision tree is used for controlling the beam splitters.

- arch: {“serial”, “parallel”}

Architecture of the QML classifier passed to the class constructor. An object of the child class

QMLDiscriminatorSerialwill have arch=”serial”, while an instance ofQMLDiscriminatorParallelwill have arch=”parallel”.

Constructor of the

QMLDiscriminatorclass.Parameters

- batchsize: int

Batchsize of the physical model, i.e., the number of simultaneous estimations.

- params: List[

Parameter] A list of unknown parameters to be estimated, alongside the corresponding sets of admissible values. For the three states Dolinar these are the x and y components of

,

,

(six continuous parameters in total) plus the class of the signal, which is a discrete parameter that takes values in the set {0, 1, 2}.

- num_ref: int

Number of copies of each training state.

- resources: {“alpha”, “step”}

Two types of resource counting are possible in the apparatus. The first is the the total number of photon counting measurements, corresponding to the value “step” for the resources parameter. The second is the mean number of photons in the apparatus, corresponding to the value “alpha” for this parameter. The average photon number is

(75)

where

is the number of training copies, i.e. num_ref.

- arch: {“serial”, “parallel”}

Architecture of the QML classifier.

- decision_tree: bool = False

If this flag is True, then the physical model of the device is set up to be used with a decision tree controlling the beam splitters. This affect the state size, which now contains an integer that univocally identifies the string of outcomes that have already appeared in the photon counting measurements.

- prec: str = “float64”

Floating point precision of the controls, outcomes, and parameters.

- count_resources(resources: Tensor, outcomes: Tensor, controls: Tensor, true_values: Tensor, state: Tensor, meas_step: Tensor) Tensor

The resources can be either the number of measurement steps or the mean number of photons in the system. In the second case the resource is considered consumed after the last photon counting measurement closing the device.

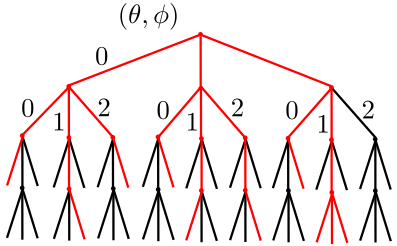

- class QMLDiscriminatorParallel(batchsize: int, params: List[Parameter], num_ref: int, resources: str = 'alpha', decision_tree: bool = False, prec: str = 'float64')

Bases:

QMLDiscriminatorParallel strategy for classifying a coherent state, given three hypothesis.

The original signal is first equally splitted on three wires, i.e.

. On each line the signal is mixed with the copies of the same training state.

The order of the measurements is show in the figure near the symbol for the photon counter. The measurements are not really done in parallel as it can be seen. All the outcomes of the previous photon counters contributes to the determination of the next controls through the particle filter and the neural network (or the decision tree). In order to realize the measurements in this order the optical path along the the wires should be prolonged differently.

Constructor of the

QMLDiscriminatorParallelclass.Parameters

- batchsize: int

Number of simultaneous estimations.

- params: List[

Parameter] Contains the real components of the unknown training states and the signal to be classified.

- num_ref: int

Number of training states for each class.

- resources: {“alpha”, “step”}

Type of resources counted.

- decision_tree: bool = False

Indicates that a decision tree is used for controlling the beam splitters.

- prec:

Floating point precision of the estimation.

- initialize_state(parameters: Tensor, num_systems: int) Tensor

Initializes the true state of the signal

for the parallel device.

Parameters

- parameters: Tensor

Tensor of shape (bs, num_system, 7) and type prec containing the real components of the three training states and the index classifying the true signal (the seventh parameter).

- num_systems: int

Size of the second dimension of the parameters Tensor. It is the number of systems treated per batch element.

Returns

- Tensor:

Tensor of shape (bs, num_system, 6) containing the state of the true signal, equally distributed on the three lines of the discrimination device with parallel architecture. If decision_tree=True the size of the last dimension of the returned Tensor is 7.

- model(outcomes: Tensor, controls: Tensor, parameters: Tensor, state: Tensor, meas_step: Tensor, num_systems: int = 1)