Submodules

parameter

Submodule containing the class Parameter and the utility

function trim_single_param().

- class Parameter(bounds: Optional[Tuple[float, float]] = None, values: Optional[Tuple[float, ...]] = None, randomize: bool = True, *, name: str)

Bases:

objectThis class provides the abstract description of a parameter that needs to be estimated in a quantum metrological task. The user can define one or more

Parameterobjects that represent the targets of the estimation.Achtung! The

Parameterobjects should only be defined within the initialization of aPhysicalModelobject.In an experiment, the values of the parameters are encoded in the state of the quantum system used as a probe. Depending on whether we can directly access the encoding quantum channel or not, we classify the task as a quantum estimation problem, if we are only given the codified probe state, or as a quantum metrology problem, if we can access the channel.

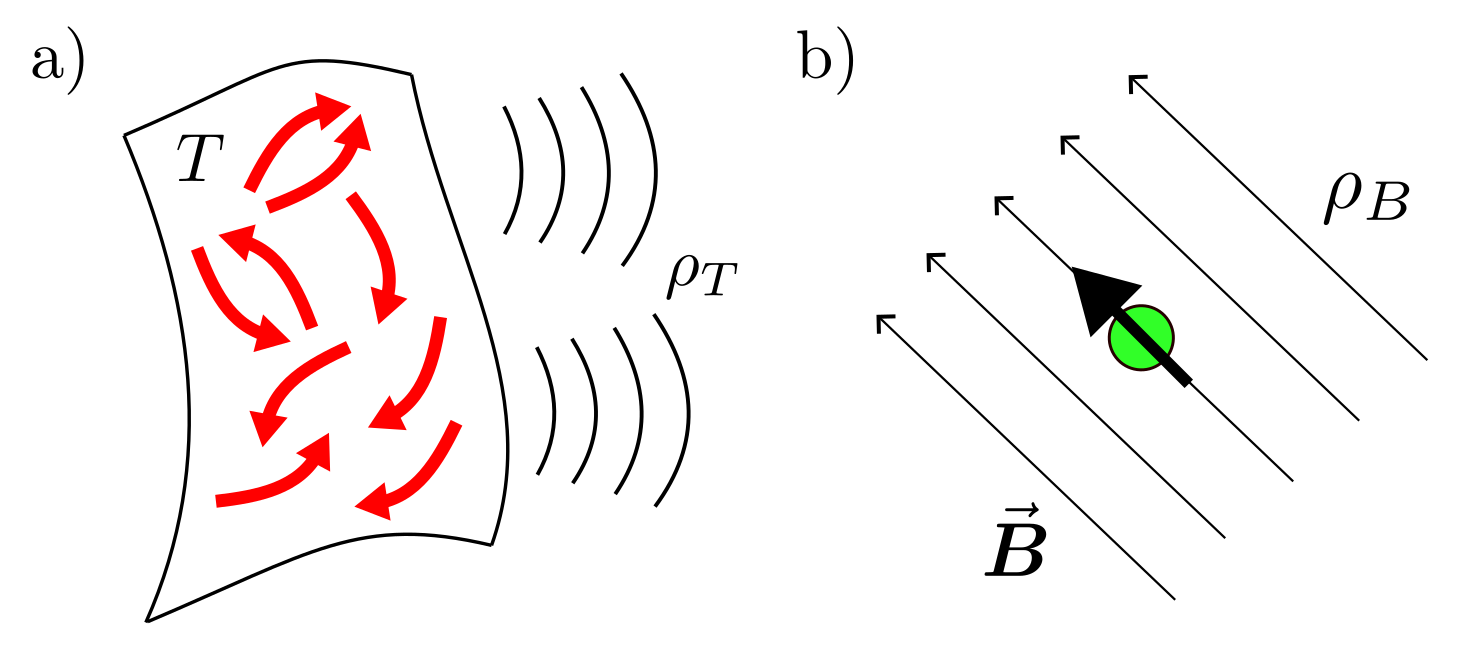

An example of parameter estimation would be receiving the radiation generated by a distribution of currents on a plane, which depends on the properties of the source, like the temperature for example 1. In this scenario, the quantum probe is the radiation. Since the emission of the radiation happens by hypothesis in a far and unaccessible region, we don’t have direct access to the quantum channel that performs the encoding, but only to the encoded states

, which is the state of the radiated field at detection.

An example of a quantum metrological task is the estimation of the environmental magnetic field with a spin, for which we can choose the initial state and the duration of the interaction. The final state of the spin is

.

A parameter can be continuous or discrete. Naturally continuous parameters are the magnetic field and the temperature, for example. A discrete parameter is the sign of a signal or the structure of an interaction 2. When discrete parameters are present, we are in the domain of hypothesis testing. In a metrological task, we may have a mix of continuous and discrete parameters, like in the

dolinarreceiver.A parameter can be a nuisance; which is an unknown parameter that needs to be estimated, on which we however do not evaluate the precision of the procedure because we are not directly interested in the estimation of this parameter. An example of this is the fluctuating optical visibility of an interferometer when we are only interested in the phase. Estimating the nuisance parameters is often necessary/useful to estimate the parameters of interest. Whether a parameter is a nuisance or not is not specified in the class

Parameter, but in the user-defined methodloss_function()of the classesStatefulSimulation.- 1

E. Köse and D. Braun Phys. Rev. A 107, 032607 (2023).

- 2

A. A. Gentile, B. Flynn, S. Knauer, et al. Nat. Phys. 17, 837–843 (2021).

Attributes

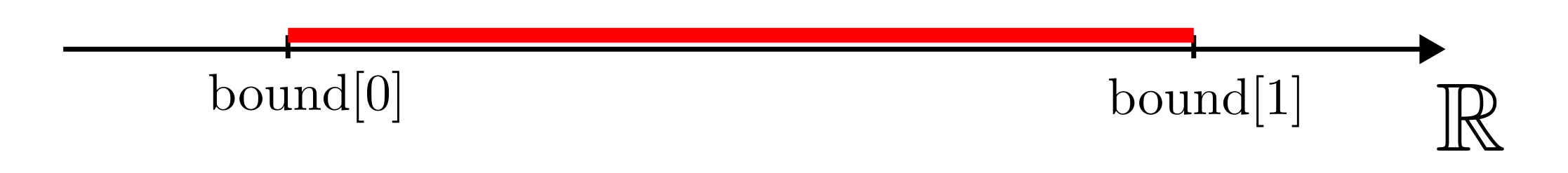

- bounds: Tuple[float, float]

Tuple of extrema of the set of admissible values for the parameter. It is defined both for continuous and discrete parameters. In this latter case it is bounds[0]=min(values) and bounds[1]=max(values).

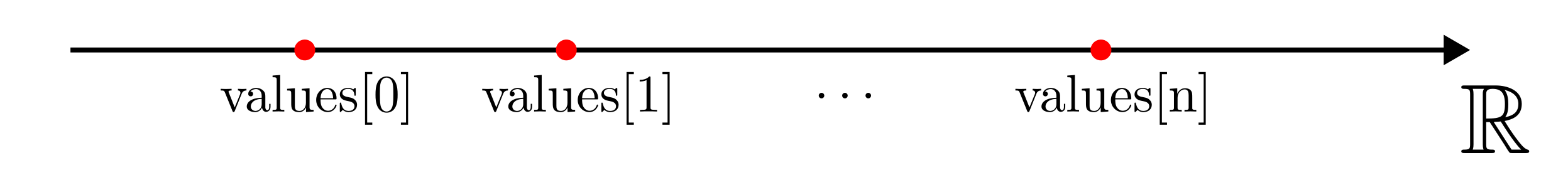

- values: Tuple[float, …]

Tuple of admissible values for a parameter. This tuple contains the values passed to the class constructor in the parameter values, sorted from the smallest to the largest and without repetitions.

Achtung! This attribute is defined only for a discrete parameter.

- continuous: bool

Determines if the parameter is continuous or not. It controls the behavior of the

reset()method.- randomize: bool

Determines if the initialization of the parameter values is stochastic or deterministic. It controls the behavior of the

reset()method.- name: str

Name of the parameter.

- bs: int

Size of the first dimension of the Tensor produced by the

reset()method and number of estimations performed simultaneously in the simulation.- prec: str

Floating point precision of the parameter values, can be float32 or float64.

Notes

Beside the variables passed to the constructor, a

Parameterobject requires the manual change of two other attributes to work properly. One attribute is the precision prec, which can be set to either float32 or float64 (with the default value being float64). The other attribute is the batchsize bs, which has a default value of 0. These changes are made automatically if theParameterobject is defined within the call to the constructor of thePhysicalModelclass.Parameters passed to the class constructor.

Parameters

- bounds: Tuple[float, float], optional

Tuple of floats containing the extrema of the interval of admissible values for a continuous parameter, where bounds[0] is the minimum and bounds[1] the maximum.

If the bounds parameter is passed to the object constructor, then the

Parameterobject is automatically treated as continuous and the attribute continuous is therefore set to True.Achtung! The bounds and values parameters are mutually exclusive and cannot both be both passed to the

Parameterclass constructor.- values: Tuple[float, …], optional

Tuple of float of unknown length containing the admissible values for a discrete parameter. Repetitions are ignored.

If the values parameter is passed to the constructor of the object, this is automatically treated as a discrete parameter and the attribute continuous is set to False.

Achtung! The bounds and values parameters are mutually exclusive and cannot both be both passed to the

Parameterclass constructor.Achtung! Even if only the values parameter is passed in the call to the class constructor, the bounds tuple is still included in the attributes. In this case it is defined as bounds[0]=min(values) and bound[1]=max(values).

- randomize: bool = True

This flag controls whether the function

reset()should generate random values or behave (almost) deterministically.Note: The value of this flag should be changed only for a discrete parameter.

Th use of this flag is explained through the following example. Consider a parameter that can assume three values {a, b, c}. If the randomization is on and we call the

reset()method with parameter num_particles, we get a Tensor of shape (bs, num_particles, 1) where the entries of each row have been extracted uniformly and stochastically from the set {a, b, c}. For example, if num_particles=12, a row could look like (a, b, a, c, b, b, a, c, a, c, a, b). If we however set randomize=False, then the rows are all equal to (a, a, a, a, b, b, b, b, c, c, c, c). With the randomization turned off, if k>1 is the number of admissible values for the parameter, we have lfloor num_particles/k rfloor repetitions of each element of the tuple values in each row of the output Tensor. If num_particles is not divisible by k, the values of the last, at most k-1, remaining particles are extracted uniformly and stochastically from the tuple values.If we generate a single particle, its value will always be extracted randomly and uniformly from values, since one will be always the remainder of the division 1/k.

The utility of setting randomize=False is confined to the task of hypothesis testing, where we want a number of particles in the particle filter that matches the number of hypotheses. In this case, in the initialization of the

ParticleFilterobject, we will set num_particles=k, with k being the number of hypotheses, so that with randomize=False, the particle filter ensemble contains exactly one and only one particle for each hypothesis. Thereset()method, called with parameter num_particles=1 for generating the true values in the simulation, returns, however, a single uniformly randomly extracted hypothesis among the admissible ones defined in values.Note: Setting this flag to False for a continuous parameter has undesired effects. In this scenario the true values of the parameter in the simulation are generated by calling

reset()with num_particles=1, which, for a continuous parameter, is implemented as a linspace(bounds[0], bounds[1], 1), that always returns bounds[0].- name: str

Name of the parameter.

- reset(seed: Tensor, num_particles: int, fragmentation: int = 1) Tensor

Generates a Tensor of admissible values for the parameter.

Produces a Tensor of shape (bs, num_particles, 1) of type prec, with a series of independent valid values for the parameter. Valid means that they lie in the interval specified by the tuple bounds if the parameter is continuous or, if it is discrete, that they belong to the tuple values, both being attributes of the

Parameterclass. The random entries are generated to cover uniformly the set of admissible values, whether it is discrete or continuous. According to the parameter randomize of the class constructor, this function implements a stochastic or deterministic generation of the Tensor entries.Parameters

- seed: Tensor

Seed of the random number generator that initializes the values of the parameter. It is a Tensor of type int32 and of shape (2,).

This is the kind of seed that is accepted by the stateless random functions of the module

tensorflow.random.- num_particles: int

Size of the second dimension of the Tensor generated by this method. It is the number of values generated for each batch.

- fragmentation: int = 1

Number of pattern repetition in populating the particles with the parameter values. This parameter is only important for a discrete parameter with randomize=False.

Returns

Notes

This method is used in

ParticleFilterto initialize the particle filter ensemble and inPhysicalModeland children classes to produce the true values of the parameter in the estimation task, according to which the outcomes of the measurements are simulated.Achtung!: This method should be called with randomize=True for a continuous parameter. If randomize=False, the true values of the parameter in the simulation of the estimation are generated by calling this method with num_particles=1, which, for a continuous parameter is implemented as a linspace(bounds[0], bounds[1], 1), which always returns bounds[0].

- trim_single_param(old_param: Tensor, param: Parameter, one_arr: Tensor) Tensor

Trims the values of a given Tensor to be within the bounds set for the corresponding

Parameterobject. If the parameter is discrete the values of the particles are levelled to the closest element among the admissible values.Parameters

- old_param: Tensor

Tensor of values for the parameter param that do not necessarily lay in the acceptable region of parameter values, defined by param.bounds. The shape and the type of this Tensor must be the same of one_arr.

- param: Parameter

Describes abstractly the parameter.

- one_arr: Tensor

Tensor filled with ones, of the same type and shape as old_param.

Returns

- Tensor

A modified version of old_param, where all the entries that were greater than param.bounds[1] have been set to param.bounds[1] and all those that were smaller than param.bounds[0] have been set to param.bounds[0]. If the parameter is discrete and some entries of old_param don’t exactly belong to the set of admissible values, i.e. param.values, the said entries are “levelled” to the closest element of param.values. This is useful for correcting the distortions of the discrete parameter values introduced by the perturbation in the resampling procedure.

Notes

Since discrete parameters also have a bounds attribute, this function works on them as well. It is used in the resampling routine of the

ParticleFilterclass, where the particles are perturbed or newly generated and could fall out of the admissible values set.

physical_model

Submodule containing the PhysicalModel class

alongside with StateSpecifics and Control.

- class Control(name: str, is_discrete: bool = False)

Bases:

objectControl parameter for the physical sensor.

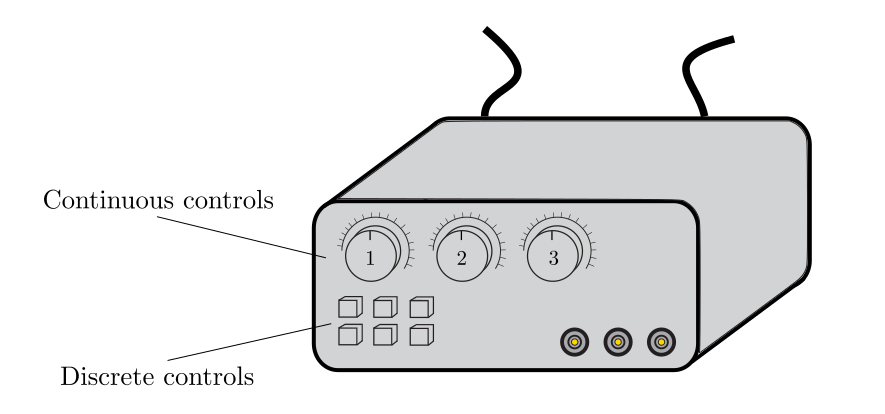

A control is a tunable parameter that can be adjusted during the experiment. This could be for example the measurement duration, the detuning of the laser frequency driving a cavity, a tunable phase in an interferometer, or other similar parameters.

In a pictorial sense, control parameters are all the buttons and knobs on the electronics of the experiment.

A control can be continuous if it takes values in an interval, or discrete if it takes only a finite set of values (like on and off).

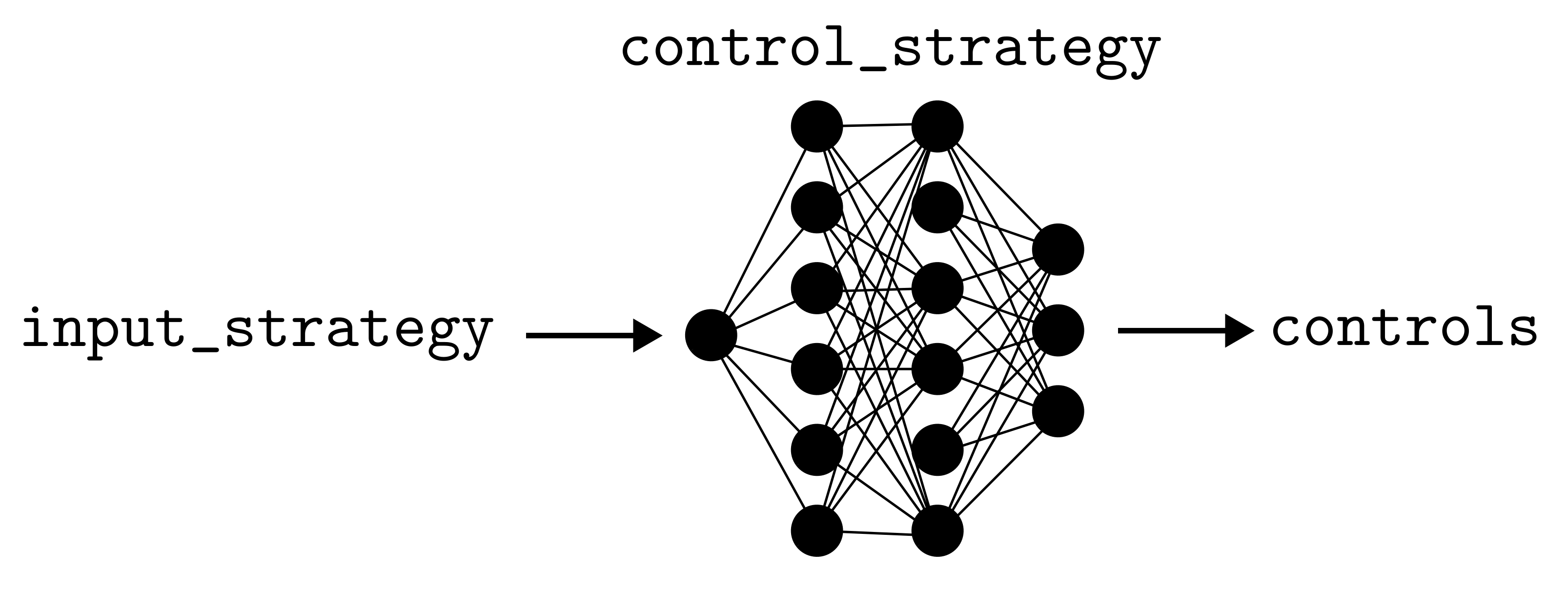

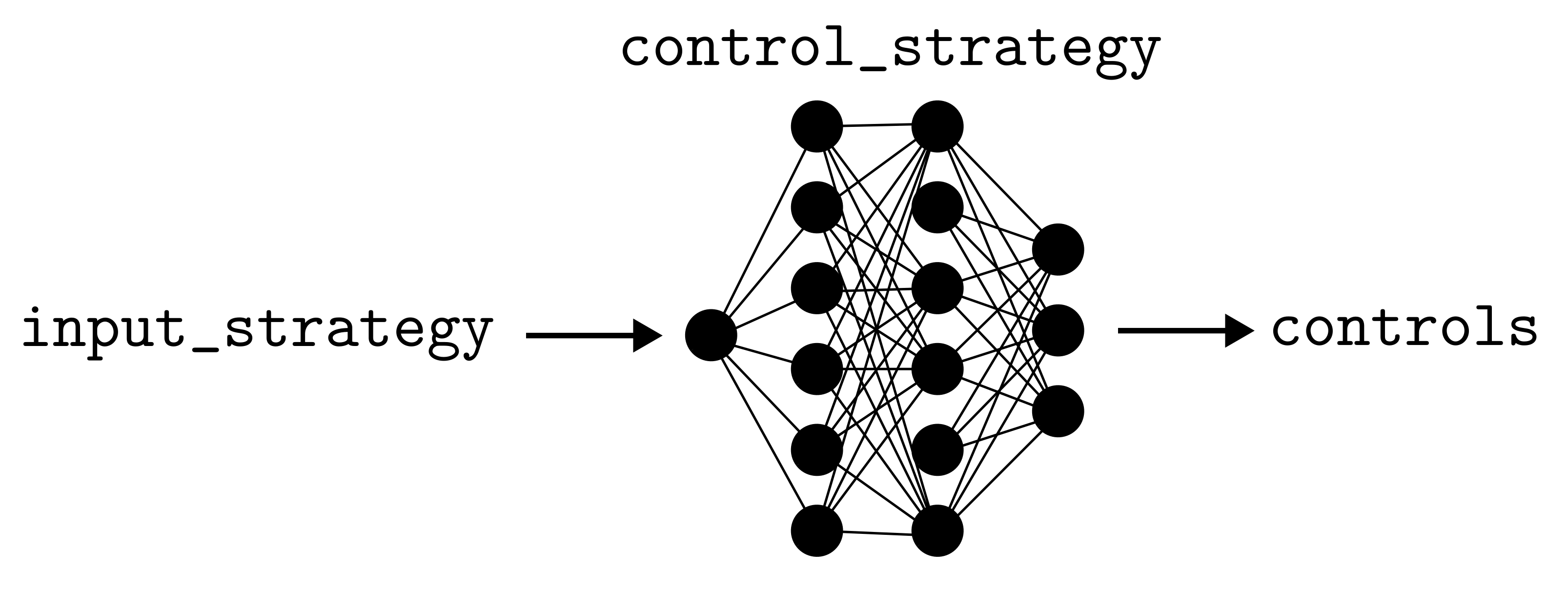

- is_discrete: bool = False

If the parameter is discrete this flag is true.

If in the simulation at least one of the controls is discrete, then the control_strategy attribute of the

Simulationclass should be a callable with headercontrols, log_prob_control = control_strategy(input_strategy, rangen)that is, it can contain stochastic operations (like the extraction of the discrete control from a probability distribution) and it must return the logarithm of the extraction probability of the chosen controls.

- name: str

Name of the control.

- class PhysicalModel(batchsize: int, controls: List[Control], params: List[Parameter], state_specifics: StateSpecifics, *, recompute_state: bool = True, outcomes_size: int = 1, prec: str = 'float64')

Bases:

objectAbstract representation of the physical system used as quantum probe.

This class and its children,

StatefulPhysicalModelandStatelessPhysicalModel, contain a description of the physics of the quantum probe, of the unknown parameters to estimate, of the dynamics of their encoding on the probe, and of the measurements.Achtung! In implementing a class for some particular device, the user should not directly derive

PhysicalModel, but instead the classesStatefulPhysicalModelandStatelessPhysicalModelshould be the base objects.When programming the physical model, the first and most important decision to make is whether the model is stateful or stateless. In the former case,

StatefulPhysicalModelshould be used, while in the latter caseStatelessPhysicalModelshould be chosen. The probe is stateless if it is reinitialized after each measurement and no classical information needs to be passed from one measurement to the next, other than that contained in the particle filter ensemble. If this is not the case, then the probe is stateful.Achtung! If some classical information on the measurement outcomes needs to be passed down from measurement to measurement, other than the information contained in the particle filter ensemble, then the probe is stateful. For example, if we perform multiple weak measurements on a signal and want to keep track of the total number of photons observed, then this information is part of the signal state. See the

dolinarmodule for an example.Attributes

- bs: int

The batch size of the physical model, i.e., the number of probes on which the estimation is performed simultaneously in the simulation.

- controls: List[

Control] A list of controls on the probe, i.e., the buttons and knobs of the experiment.

- params: List[

Parameter] A list of unknown parameters to be estimated, along with the corresponding sets of admissible values.

- controls_size: int

The number of controls on the probe, i.e., the length of the controls attribute.

- d: int

The number of unknown parameters to estimate, i.e., the length of the params attribute.

- state_specifics:

StateSpecifics The size of the last dimension and type of the Tensor used to represent the state of the probe internally in the simulation.

- recompute_state: bool

A flag passed to the constructor of the

PhysicalModelclass; it controls whether the state ensemble should be recomputed after a resampling of the particles or not.- outcomes_size: int

A parameter passed to the constructor of the

PhysicalModelclass; it is the number of scalar outcomes of a measurement on the probe.- prec: str

The floating point precision of the controls, outcomes, and parameters.

Examples

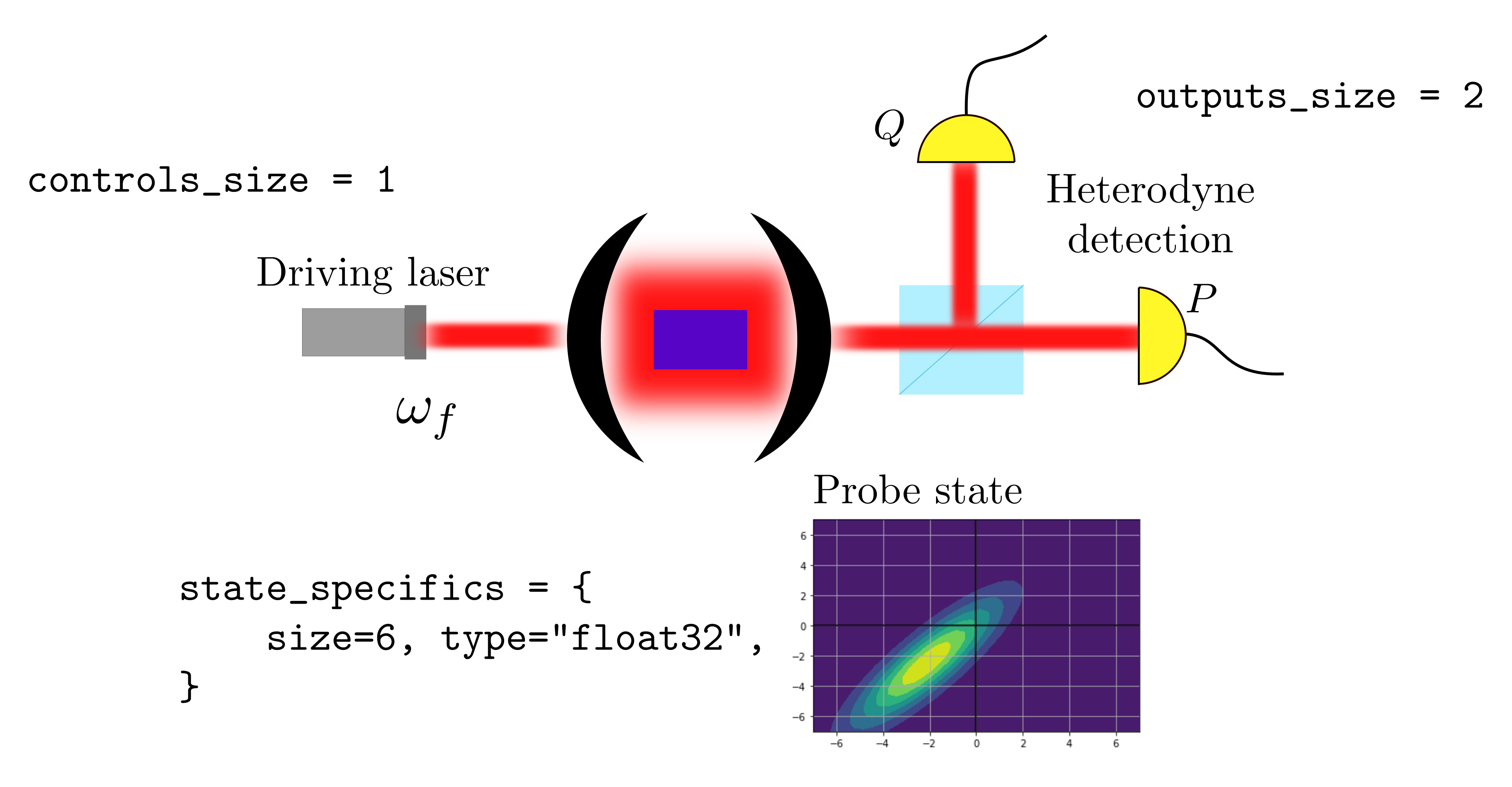

For a metrological task performed on a cavity that is driven by a laser, and where we can control the laser frequency, we have control_size=1. If a heterodyne measurement is performed on the cavity, then outcomes_size=2, because a measurement produces an estimate for both the quadratures. Assuming the cavity state is Gaussian, it can be represented by 6 real numbers (two for the mean and four for the covariance). The number of scalar quantities can be reduced to 5 by observing that the covariance matrix is symmetric.

Parameters passed to the constructor of the

PhysicalModelclass.Parameters

- batchsize: int

Batch size of the physical model, i.e., the number of simultaneous estimations in the simulation.

- controls: List[

Control] A list of controls for the probe (the buttons and knobs of the experiment).

- params: List[

Parameter] A list of

Parameterobjects that represent the unknowns of the estimation.- state_specifics:

StateSpecifics The size of the last dimension and type of the Tensor used to represent internally in the simulation the state of the probe.

- recompute_state: bool = True

Controls whether the state ensemble should be recomputed with the method

recompute_state()after a resampling of the particles or not.Achtung! This flag should be deactivated only if the states in the ensemble don’t depend on the particles or if the resampling has been deactivated.

Recomputing the state is very expensive, especially when there have already been many measurement steps. One should consider whether to resample the particles at all in a simulation involving a relatively large quantum state.

- outcomes_size: int = 1

Number of scalars collected in a measurement on the probe.

- prec: str = “float64”

Floating point precision of the controls, outcomes, and parameters.

- true_values(rangen: Generator) Tuple[Tensor]

Provides a batch of fresh “true values” for the parameters of the system, from which the measurement is simulated.

Parameters

- rangen: Generator

A random number generator from the module

tensorflow.random.

Returns

- Tensor

Tensor of shape (bs, 1, d), where bs and d are attributes of the

PhysicalModelclass. For each estimation in the batch, this method produces a single instance of each parameters in the attribute params, extracted uniformly from the allowed values. For doing so the methodreset()is called with num_particles=1, for ech parameter.

- wrapper_count_resources(resources: Tensor, outcomes: Tensor, controls: Tensor, true_values: Tensor, state: Tensor, meas_step: Tensor) Tuple[Tensor]

wrapper_model

- class StateSpecifics

Bases:

TypedDictSize and type StateSpeof the probe state collected in a single object.

Examples

For a single-qubit probe, the state can be represented by a 2x2 complex matrix with four entries, or by a 3-dimensional vector of real values using the Bloch representation. For a cavity with a single bosonic mode, which is always in a Gaussian state, we need a 2x2 real covariance matrix and a 2-dimensional real vector to represent the state. This requires a total of 6 real values, which can be reduced to 5 noticing that the covariance matrix is symmetric.

- size: int

Size of a 1D Tensor necessary to describe unambiguously the state of the probe.

- type: str

Type of the Tensor representing the probe state. Can be whatever type admissible in Tensorflow.

stateful_physical_model

Module containing the stateful version

of PhysicalModel.

- class StatefulPhysicalModel(batchsize: int, controls: List[Control], params: List[Parameter], state_specifics: StateSpecifics, *, recompute_state: bool = True, outcomes_size: int = 1, prec: str = 'float64')

Bases:

PhysicalModelAbstract description of a stateful quantum probe.

Parameters passed to the constructor of the

PhysicalModelclass.Parameters

- batchsize: int

Batch size of the physical model, i.e., the number of simultaneous estimations in the simulation.

- controls: List[

Control] A list of controls for the probe (the buttons and knobs of the experiment).

- params: List[

Parameter] A list of

Parameterobjects that represent the unknowns of the estimation.- state_specifics:

StateSpecifics The size of the last dimension and type of the Tensor used to represent internally in the simulation the state of the probe.

- recompute_state: bool = True

Controls whether the state ensemble should be recomputed with the method

recompute_state()after a resampling of the particles or not.Achtung! This flag should be deactivated only if the states in the ensemble don’t depend on the particles or if the resampling has been deactivated.

Recomputing the state is very expensive, especially when there have already been many measurement steps. One should consider whether to resample the particles at all in a simulation involving a relatively large quantum state.

- outcomes_size: int = 1

Number of scalars collected in a measurement on the probe.

- prec: str = “float64”

Floating point precision of the controls, outcomes, and parameters.

- count_resources(resources: Tensor, outcomes: Tensor, controls: Tensor, true_values: Tensor, state: Tensor, meas_step: Tensor) Tensor

Updates the resource Tensor, which contains, for each estimation in the batch, the amount of resources consumed up to the point this method is called.

Achtung! This method has to be implemented by the user.

The optimization of the estimation precision and the visualization of the performances of an agent are organized within the precision-resources paradigm. The precision is identified with the loss to be minimized in the training cycle, defined in

loss_function(), but the precisions of two instances of the same metrological task can only be fairly compared if the costs involved in them is the same. This cost is what we call resource. Some examples of resources are the number of measurements on the probe, the number of identical codified states consumed, the amplitude of a signal, the total measurement time, etc. There is no right or wrong resource in an estimation task, it depends on the user choices and on his understanding of the limitations in the laboratory implementation of the task.For each measurement, the method

count_resources()computes a scalar value based on the controls of the measurement, the measurement step counter, and the true values of the parameter. This scalar is interpreted as the partial resourcesconsumed in the measurement to which the parameters in the call are referring. These partial resources are summed step by step to get the total amount of consumed resources at step

of the measurement loop in

execute(), i.e.(1)

The measurement loop stops when the total amount of consumed resources reaches the value of the attribute

max_resourcesof the classSimulationParameters, set by the user.The definition of the resources doesn’t only have an impact on the stopping condition of the measurement loop, but it defines how the performances of an agent are visualized. The function

performance_evaluation()produces a plot of the mean loss for the estimation task as a function of the consumed resources. Different definitions of the methodcount_resources()will produce different plots.resources: Tensor, outcomes: Tensor, controls: Tensor, true_values: Tensor, state: Tensor, meas_step: Tensor,

Parameters

- resources: Tensor

A Tensor of shape (bs, 1) containing the total amount of consumed resources for each estimation in the batch up to the point this method is called.

- outcomes: Tensor

The observed outcomes of the measurement. This is a Tensor of shape (bs, 1, outcomes_size) and of type prec. bs, outcomes_size, and prec are attributes of the

PhysicalModelclass.- controls: Tensor

Controls of the last measurement on the probe. It is a Tensor of shape (bs, controls_size) and of type prec, with bs, controls_size, and prec are attributes of the

PhysicalModelclass.- true_values: Tensor

Contains the true values of the unknown parameters in the simulations. It is a Tensor of shape (bs, 1, d) and type prec, with bs and d being attributes of the

PhysicalModelclass.- state: Tensor

Current state of the quantum probe, computed from the evolution determined (among other factors) by the encoding of parameters. This is a Tensor of shape (bs, num_systems, state_specifics[“size”]), where state_specifics is an attribute of the

PhysicalModelclass. Its type is state_specifics[“type”].- meas_step: Tensor

The index of the current measurement on the probe system. The counting starts from zero. It is a Tensor of shape (bs, 1) and of type int32.

Return

- Tensor

Resources consumed in the current measurement step, for each simulation in the batch. It is a Tensor of shape (bs, 1) of type prec.

Examples

For the estimation of a magnetic field with an NV-center, two common choices for the resources are the number of Ramsey measurements, which mean

, and the employed time, which is

, where

is the interaction time between the magnetic field and the probe.

- initialize_state(parameters: Tensor, num_systems: int) Tensor

Initialization of the probe state.

Achtung! This method must be implemented by the user.

Parameters

- parameters: Tensor

Tensor of shape (bs, num_systems, d) and of type prec, where bs, d, and prec are attribute of the

Simulationclass. These are values for the unknown parameters associated to each of the probe states that must be initialized in this method, be it the “true values” used to simulate the measurement outcomes or the particles in the particle filter ensemble.- num_systems: int

The number of systems to be initialized for each simulation in the batch. This is the size of the second dimension of the Tensor that this method should return.

Returns

- state: Tensor

The initialized state of the probe and/or some classical variable state. This is a Tensor of shape (bs, num_systems, state_specifics[“size”]) of type state_specifics[“type”], where state_specifics is an attribute of the

PhysicalModelclass. It may be desirable to initialize the state of the probe depending on the values of parameters, for example because the encoding has happened outside of the laboratory and we are given the encoded probe state only. However, if the encoding happens in the lab between the measurements it make sense to reset the probe state at the beginning of the estimation with a parameter independent state.

- model(outcomes: Tensor, controls: Tensor, parameters: Tensor, state: Tensor, meas_step: Tensor, num_systems: int = 1) Tuple[Tensor, Tensor]

Description of the encoding and the measurement on the probe. This method returns the probability of observing a certain outcome and the corresponding evolved state after the measurement backreaction.

Achtung! This method must be implemented by the user.

Achtung! This method does not implement any stochastic evolution. All stochastic operations in the measurement of the probe and in the evolution of the state should be defined in the method

perform_measurement().Suppose that the state of the probe after the encoding is

, where

is parameter and

is control. The probe state will, in general, depend on the entire history of past outcomes and controls, but we neglect the corresponding subscripts to avoid making the notation too cumbersome. The probe measurement is associated with an ensemble of positive operators

, where

is the outcome and

is the set of possible outcomes. According to the laws of quantum mechanics, the probability of observing the outcome

is then

(2)

and the backreaction on the probe state after having observed

is

(3)

Parameters

- outcomes: Tensor

Measurement outcomes. It is a Tensor of shape (batchsize, num_systems, outcomes_size) of type prec, where bs and outcomes_size are attributes of the

PhysicalModelclass.- controls: Tensor

Contains the controls for the measurement. This is a Tensor of shape (bs, num_systems, controls_size) and type prec, where bs and controls_size are attributes of the

PhysicalModelclass.- parameters: Tensor

Values of the unknown parameters. It is a Tensor of shape (bs, num_systems, d) and type prec, where bs and d are attributes of the

PhysicalModelclass.- state: Tensor

Current state of the quantum probe, computed from the evolution determined (among other factors) by the encoding of parameters. This is a Tensor of shape (bs, num_systems, state_specifics[“size”]), where state_specifics is an attribute of the

PhysicalModelclass. Its type is state_specifics[“type”].- meas_step: Tensors

The index of the current measurement on the probe system. The Counting starts from zero. This is a Tensor of shape (bs, num_systems, 1) and of type int32.

Returns

- prob: Tensor

Probability of observing the given vector of outcomes, having done a measurement with the given controls, parameters, and states. It is a Tensor of shape (bs, num_systems) and type prec. bs and prec are attributes of the

PhysicalModelclass.- state: Tensor

State of the probe after the encoding of parameters and the application of the measurement backreaction, associated with the observation of the given outcomes. This is a Tensor of shape (bs, num_systems, state_specifics[“size”]), where state_specifics is an attribute of the

PhysicalModelclass.

- perform_measurement(controls: Tensor, parameters: Tensor, true_state: Tensor, meas_step: Tensor, rangen: Generator) Tuple[Tensor, Tensor, Tensor]

Performs the stochastic extraction of measurement outcomes and updates the state of the probe.

Achtung! This method must be implemented by the user.

Samples measurement outcomes to simulate the experiment and returns them, together with the likelihood of obtaining such outcomes and the evolved state of the probe after the measurement. Typically, this function contains at least one call to the

model()method, which produces the probabilities for the outcome sampling.Achtung! When using the

StatefulPhysicalModelclass in combination withBoundSimulationthe methodperform_measurement()can be implemented to return an outcome extracted from an arbitrary probability distribution, which can be different from the one predicted by the true model of the system. This is used to introduce an importance sampling on the probe trajectories. The log_prob outcome should be the log-likelihood of the outcome according to the modified distribution, while true_state remains the state of the system conditioned on the observation of the outcome. It is important thatmodelimplement the true probability. This feature should be used together with the activation of the flag importance_sampling in the constructor of theBoundSimulationobject, and should not be used for a Bayesian estimation carried out with theSimulationclass.Parameters

- controls: Tensor

Contains the controls for the current measurement. This is a Tensor of shape (bs, 1, controls_size) and type prec, where bs and controls_size are attributes of the

PhysicalModelclass.- parameters: Tensor

Contains the true values of the unknown parameters in the simulations. The observed measurement outcomes must be simulated according to them. It is a Tensor of shape (bs, 1, d) and type prec, where bs and d are attributes of the

PhysicalModelclass. In the estimation, these values are not observable, only their effects through the measurement outcomes are.- true_states: Tensor

The true state of the probe in the estimation, computed from the evolution determined (among other factors) by the encoding of the parameter true_values. Like true_values, this information is not observable. This is a Tensor of shape (bs, 1, state_specifics[“size”]), where state_specifics is an attribute of the

PhysicalModelclass. Its type is state_specifics[“type”].- meas_step: Tensor

The index of the current measurement on the probe system. The counting starts from zero. This is a Tensor of shape (bs, 1, 1) and of type int32.

- rangen: Generator

A random number generator from the module

tensorflow.random.

Returns

- outcomes: Tensor

The observed outcomes of the measurement. This is a Tensor of shape (bs, 1, outcomes_size) and of type prec. bs, outcomes_size, and prec are attributes of the

PhysicalModelclass.- log_prob: Tensor

The logarithm of the probabilities of the observed outcomes. This is a Tensor of shape (bs, 1) and of type prec. bs and prec are attributes of the

PhysicalModelclass.- true_state: Tensor

The true state of the probe, encoded with the parameters true_values, after the measurement backreaction corresponding to outcomes is applied. This is a Tensor of shape (bs, 1, state_specifics[“size”]), where state_specifics is an attribute of the

PhysicalModelclass. Its type is state_specifics[“type”].

- wrapper_count_resources(resources, outcomes, controls, true_values, state, meas_step) Tuple[Tensor]

wrapper_model

stateless_physical_model

Module containing the stateless version

of PhysicalModel.

- class StatelessPhysicalModel(batchsize: int, controls: List[Control], params: List[Parameter], outcomes_size: int = 1, prec: str = 'float64')

Bases:

PhysicalModelAbstract description of a stateless quantum probe.

Constructor of the

StatelessPhysicalModelclass.Parameters

- batchsize: int

Batch size of the physical model, i.e., the number of simultaneous estimations in the simulation.

- controls: List[

Control] A list of controls for the probe (the buttons and knobs of the experiment).

- params: List[

Parameter] A list of

Parameterobjects that represent the unknowns of the estimation.- outcomes_size: int = 1

Number of scalars collected in a measurement on the probe.

- prec: str = “float64”

Floating point precision of the controls, outcomes, and parameters. Can be either “float32” or “float64”.

- count_resources(resources: Tensor, outcomes: Tensor, controls: Tensor, true_values: Tensor, meas_step: Tensor) Tensor

Updates the resource Tensor, which contains, for each estimation in the batch, the amount of resources consumed up to the point this method is called.

Achtung! This method has to be implemented by the user.

The optimization of the estimation precision and the visualization of the performances of an agent are organized within the precision-resources paradigm. The precision is identified with the loss to be minimized in the training cycle, defined in

loss_function(), but the precisions of two instances of the same metrological task can only be fairly compared if the costs involved in them is the same. This cost is what we call resource. Some examples of resources are the number of measurements on the probe, the number of identical codified states consumed, the amplitude of a signal, the total measurement time, etc. There is no right or wrong resource in an estimation task, it depends on the user choices and on his understanding of the limitations in the laboratory implementation of the task.For each measurement, the method

count_resources()computes a scalar value based on the controls of the measurement, the measurement step counter, and the true values of the parameter. This scalar is interpreted as the partial resourcesconsumed in the measurement to which the parameters in the call are referring. These partial resources are summed step by step to get the total amount of consumed resources at step

of the measurement loop in

execute(), i.e.(4)

The measurement loop stops when the total amount of consumed resources reaches the value of the attribute

max_resourcesof the classSimulationParameters, set by the user.The definition of the resources doesn’t only have an impact on the stopping condition of the measurement loop, but it defines how the performances of an agent are visualized. The function

performance_evaluation()produces a plot of the mean loss for the estimation task as a function of the consumed resources. Different definitions of the methodcount_resources()will produce different plots.Parameters

- resources: Tensor

A Tensor of shape (bs, 1) containing the total amount of consumed resources for each estimation in the batch up to the point this method is called.

- outcomes: Tensor

The observed outcomes of the measurement. This is a Tensor of shape (bs, 1, outcomes_size) and of type prec. bs, outcomes_size, and prec are attributes of the

PhysicalModelclass.- controls: Tensor

Controls of the last measurement on the probe. It is a Tensor of shape (bs, controls_size) and of type prec, with bs, controls_size, and prec are attributes of the

PhysicalModelclass.- true_values: Tensor

Contains the true values of the unknown parameters in the simulations. It is a Tensor of shape (bs, 1, d) and type prec, with bs and d being attributes of the

PhysicalModelclass.- meas_step: Tensor

The index of the current measurement on the probe system. The counting starts from zero. It is a Tensor of shape (bs, 1) and of type int32.

Return

- Tensor

Resources consumed in the current measurement step, for each simulation in the batch. It is a Tensor of shape (bs, 1) of type prec.

Examples

For the estimation of a magnetic field with an NV-center, two common choices for the resources are the number of Ramsey measurements, which mean

, and the employed time, which is

, where

is the interaction time between the magnetic field and the probe.

- model(outcomes: Tensor, controls: Tensor, parameters: Tensor, meas_step: Tensor, num_systems: int = 1) Tensor

Description of the encoding and the measurement on the probe. This method returns the probability of observing a certain outcome after a measurement.

Achtung! This method must be implemented by the user.

Achtung! This method does not implement any stochastic evolution. The stochastic extraction of the outcomes should be defined in the method

perform_measurement().Suppose that the state of the probe after the encoding is

, where

is parameter and

is control. The probe measurement is associated with an ensemble of positive operators

, where

is the outcome and

is the set of possible outcomes. According to the laws of quantum mechanics, the probability of observing the outcome

is then

(5)

Parameters

- outcomes: Tensor

Measurement outcomes. It is a Tensor of shape (bs, num_systems, outcomes_size) of type prec, where bs, outcomes_size, and prec are attributes of the

PhysicalModelclass.- controls: Tensor

Contains the controls for the measurement. This is a Tensor of shape (bs, num_systems, controls_size) and type prec, where bs, controls_size, and prec are attributes of the

PhysicalModelclass.- parameters: Tensor

Values of the unknown parameters. It is a Tensor of shape (bs, num_systems, d) and type prec, where bs, d, and prec are attributes of the

PhysicalModelclass.- meas_step: Tensors

The index of the current measurement of the probe system. The counting starts from zero. This is a Tensor of shape (bs, num_systems, 1) and of type int32.

Returns

- prob: Tensor

Probability of observing the given outcomes vector, having done a measurement with the given controls and parameters. It is a Tensor of shape (bs, num_systems) and of type prec. bs and prec are attributes of the

PhysicalModelclass.

- perform_measurement(controls: Tensor, parameters: Tensor, meas_step: float, rangen: Generator) Tuple[Tensor, Tensor]

Performs the stochastic extraction of measurement outcomes.

Achtung! This method must be implemented by the user.

Samples measurement outcomes to simulate the experiment and returns them, together with the likelihood of obtaining such outcomes. Typically, this function contains at least one call to the

model()method, which produces the probabilities for the outcome sampling.Parameters

- controls: Tensor

Contains the controls for the current measurement. This is a Tensor of shape (bs, 1, controls_size) and type prec, where bs and controls_size are attributes of the

PhysicalModelclass.- parameters: Tensor

Contains the true values of the unknown parameters in the simulations. The observed measurement outcomes must be simulated according to them. It is a Tensor of shape (bs, 1, d) and type prec, where bs and d are attributes of the

PhysicalModelclass. In the estimation, these values are not observable, only their effects through the measurement outcomes are.- meas_step: Tensor

The index of the current measurement on the probe system. The counting starts from zero. This is a Tensor of shape (bs, 1, 1) and of type int32.

- rangen: Generator

A random number generator from the module

tensorflow.random.

Returns

- outcomes: Tensor

The observed outcomes of the measurement. This is a Tensor of shape (bs, 1, outcomes_size) and of type prec. bs, outcomes_size, and prec are attributes of the

PhysicalModelclass.- log_prob: Tensor

The logarithm of the probabilities of the observed outcomes. This is a Tensor of shape (bs, 1) and of type prec. bs and prec are attributes of the

PhysicalModelclass.

- wrapper_count_resources(resources, outcomes, controls, true_values, state, meas_step) Tuple[Tensor]

wrapper_model

particle_filter

Submodule containing the class ParticleFilter

necessary for Bayesian estimation.

- class ParticleFilter(num_particles: int, phys_model: PhysicalModel, resampling_allowed: bool = True, resample_threshold: float = 0.5, resample_fraction: float = 0.98, alpha: float = 0.5, beta: float = 0.98, gamma: float = 1.0, scibior_trick: bool = True, trim: bool = True, prec: str = 'float64')

Bases:

objectParticle filter object, with methods to reset the particle ensemble and perform Bayesian filtering for quantum metrology.

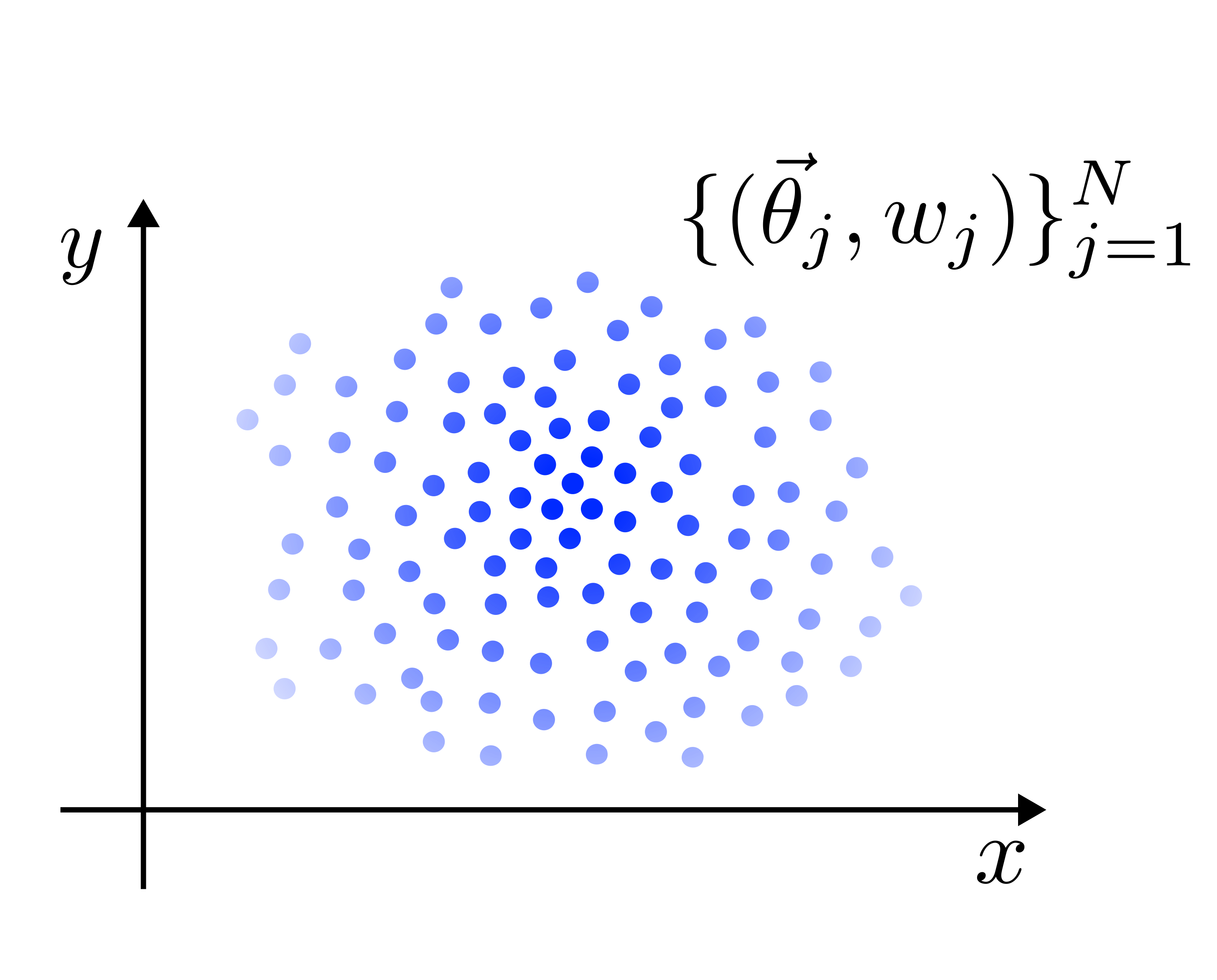

Given the tuple of unknown parameters

, in the Bayesian framework we start from a prior

and update it after each observation through the Bayes rule. In simulating such procedure (called Bayesian filtering) we need a computer representation of a generic probability distribution on the parameters. We can approximate a distribution

with a weighted sum of delta functions as follows:

(6)

where the vectors

are called particles, while the real numbers

are the weights. The integer

is the attribute np of the

ParticleFilterobject. The core idea is that the posterior distribution is represented by an ensemble of particles and weights. The Bayesian filtering techniques that use this kind of representations for the posterior are called particle filter methods.

In the figure, we represent the ensemble of a two-dimensional particle filter, where the intensity of the color is proportional to the weight associated with each particle.

The update of the posterior in the particle filter becomes the update of the weights

.

Attributes

- bs: int

Batchsize of the particle filter, i.e. number of Bayesian estimations performed simultaneously.

- np: int

Parameter num_particles passed to the constructor of the class. It is the number of particles in the ensemble.

- phys_model:

PhysicalModel Abstract description of the physical model of the quantum probe passed to the

ParticleFilterconstructor. It also contains the description of the parameters to estimate, on the basis of which the particles of the ensemble are initialized.- resampling: bool

Flag resampling_allowed passed to the constructor of

ParticleFilter.Achtung! This attribute has no effect inside the methods of the

ParticleFilter. Calling the methodfull_resampling()will resample the particles if needed also with resampling=False.- state_size: int

Size of the state of the probe, it is phys_model.state_specifics[“size”], where phys_model is the attribute of

ParticleFilter.- state_type: str

Type of the state vector of the probe, it is phys_model.state_specifics[“type”], where phys_model is the attribute of

ParticleFilter.- d: int

Number of unknown parameters of the simulation, it is the length of the attribute params.

- prec: str

Floating point precision of the parameter values. Can be either float32 or float64. It is the parameter prec passed to the constructor.

Notes

This class can deal with a whole batch of particle filter ensembles that are updated simultaneously. The batchsize attribute bs is taken from phys_model in the initialization.

This implementation of the particle filter is fully differentiable and can, therefore, be used in the training loop of a neural network agent that controls the experiment. It is trivial to see that the Bayes update is differentiable, while the differentiability of the resampling 3 is achieved through a combination of soft resampling 4 and the method of Ścibior and Wood 5.

Constructor of the

ParticleFilterclass.Parameters

- num_particles: int

Number of particles in the ensemble of the particle filter.

- phys_model:

PhysicalModel Contains the physics of the quantum probe and the methods to operate on its state and compute the probabilities of observing a certain outcome in a measurement. This object is used in the

apply_measurement()method, which performs the Bayesian update on the particle weights.- resampling_allowed: bool = True

Controls whether the resampling is allowed or not for a particular instance of

ParticleFilter. The typical case in which we set resampling_flag=False is that of hypothesis testing, where we have a single discrete parameter and num_particles equals the number of hypotheses.This flag isn’t used directly in this class, but in the

StatelessSimulationandStatefulSimulationclasses, which use the methods ofParticleFilter.- resample_threshold: float = 0.5

The method

full_resampling()verifies the need for resampling for a certain particle filter ensemble according to the effective particle number, defined as(7)

Indicating with

the resample_threshold, the particle filter identifies an ensemble as in need of resampling when

.

- resample_fraction: float = 0.98

The method

full_resampling()triggers the resampling for the whole batch of particle filter ensembles if at least a fraction resample_fraction of simulations are in need of resampling. Also those simulations in the batch that don’t need resampling will be resampled.- alpha: float = 0.5

In the method

resample()an importance sampling of the particles is performed, where the extraction is done from a distribution, being the one defined by the ensemble mixed with a uniform distribution on the particles. This procedure, called soft resampling, aims to make the update step differentiable. The mixing coefficient is the parameter alpha, and it is indicated within the equations.

This parameter quantitatively controls the flow of derivatives through the resampling routine and the effectiveness of the resampling. With alpha=1, regular resampling without mixing occurs, but no derivatives can flow through this operation. With alpha=0, differentiability is achieved, but no resampling is done. There is a tradeoff between the efficiency of the soft resampling and its differentiability.

- beta: float = 0.98

In the method

resample(), a Gaussian perturbation of the particles obtained from the soft resampling is performed. The intensity of this perturbation is controlled by the parameter 1.0-beta, which is indicated byin the equations.

- gamma: float = 1.0

This parameter controls the fraction of num_particles that are extracted in the soft resampling. The remaining fraction of particles, 1.0-gamma, are newly generated from a Gaussian distribution having the same mean and variance as the posterior distribution before the resampling. This parameter, like alpha and beta, regulates the behavior of the

resample()method and is indicated byin the equations.

Achtung! Proposing new particles during a resampling won’t work when one ore more discrete parameters are present. The advice in these cases is to keep gamma=1.0.

- scibior_trick: bool = True

This flag controls the use of the method proposed by Ścibior and Wood to make the resampling differentiable. It can be used alone or together with soft resampling to improve the flow of the gradient through the particle ensemble resampling steps.

- trim: bool = True

After the Gaussian perturbation of the particles or their extraction from scratch from a Gaussian, they may fall outside the admissible bounds for the parameters. If trim=True, they are cast again inside the bounds using the function

trim_single_param().- prec: str = “float64”

This parameter indicates the floating-point precision of the particles and weights, it can be either float64 or float32.

- 3

Granade et al, New J. Phys. 14 103013 (2012).

- 4

Ma, P. Karkus, D. Hsu, and W. S. Lee, arXiv:1905.12885 (2019).

- 5

Ścibior, F. Wood, arXiv:2106.10314 (2021).

- apply_measurement(weights: Tensor, particles: Tensor, state_ensemble: Tensor, outcomes: Tensor, controls: Tensor, meas_step: Tensor) Tuple[Tensor, Tensor]

Bayesian update of the weights of the particle filter.

Consider

the outcome of the last observation on the probe and

the control. The weights are updated as

(8)

where

is the probability of observing the outcome

under the control

, and

the j-th particles with its associated state

before the encoding and the measurement.

Parameters

- weights: Tensor

A Tensor of weights with shape (bs, np) and type prec. bs, np, and prec are attributes of the

ParticleFilterclass.- particles: Tensor

A Tensor of particles with shape (bs, np, d) and type prec. bs, np, d, and prec are attributes of the

ParticleFilterclass.- state_ensemble: Tensor

The state of the probe system before the last measurement for each particle. It is a Tensor of shape (bs, np, state_size) and of type state_type. Here, state_size and state_type are attributes of

ParticleFilter. In the Bayes rule formula, the state_ensemble is indicated with.

- outcomes: Tensor

The outcomes of the last measurement on the probe. It is a Tensor of shape (bs, phys_model.outcomes_size) and of type prec. In the Bayes rule formula, it is indicated with

.

- controls: Tensor

The controls of the last measurement on the probe. It is a Tensor of shape (bs, phys_model.controls_size) and of type prec. In the Bayes rule formula, it is indicated with

.

- meas_step: Tensor

The index of the current measurement on the probe system. The counting starts from zero. It is a Tensor of shape (bs, 1) and of type int32.

Returns

- weights: Tensor

Updated weights after the application of the Bayes rule. It is a Tensor of shape (bs, np) and of type prec. bs, np, and prec are attributes of the

ParticleFilterclass.- state_ensemble: Tensor

Probe state associated to each particle after the measurement backreaction associated to outcomes. It is a Tensor of shape (bs, np, state_size) and of type state_type. bs, np, state_size, and state_type are attributes of the

ParticleFilterclass.

Notes

If an outcome is observed that is not compatible with any of the particles, this method ignores the measurements for the corresponding batch element. Such observation is treated as an outlier.

- check_resampling(weights: Tensor, count_for_resampling: Tensor) Tensor

Checks the resampling condition on the effective number of particles for each simulation in the batch.

A particle filter ensemble is in need of resampling when the effective number of particles, defined in Eq.7, is less then a fraction resample_threshold of the total particle number.

Parameters

- weights: Tensor

Tensor of weights of shape (bs, np) and of type prec. bs, np, and prec are attributes of the

ParticleFilterclass.- count_for_resampling: Tensor

Tensor of bool of shape (bs, 1), that says whether a certain ensemble in the batch should be counted or not in the total number of active simulation. Some estimations in the batch may have already terminated and are not to be counted in assessing the need of launching the resampling routine. If a fraction resample_fraction of the active simulations needs resampling then the whole batch is resampled.

Returns

- trigger_resampling: Tensor

Tensor of shape (bs, ) and of type bool that indicates whether the corresponding batch element should undergo resampling or not.

- compute_covariance(weights: Tensor, particles: Tensor, batchsize: Optional[Tensor] = None, dims: Optional[int] = None) Tensor

Computes the covariance matrix of the Bayesian posterior distribution.

The empirical covariance matrix of the particle filter ensemble is defined as:

(9)

where

is the mean value returned by the method

compute_mean().Parameters

- weights: Tensor

A Tensor of weights of shape (bs, np) and of type prec. bs, np, and prec are attributes of the

ParticleFilterclass.- particles: Tensor

A Tensor of particles of shape (bs, np, d) and of type prec, bs, np, d, and prec are attributes of the

ParticleFilterclass.- dims: int, optional

This method can deal with a last dimension for the particles parameter different from the attribute d of the

ParticleFilterobject. If this optional parameter is passed to the method call, then the shape of particles is expected to be (bs, np, dims). Its typical use is when we compute the covariance of a subset only of the parameters.

Returns

- Tensor

A Tensor of shape (bs, d, d) of type prec with the covariance matrix of the Bayesian posterior for each ensemble in the batch. if dims was passed to the function call then the outcome Tensor has shape (bs, dims, dims).

- compute_max_weights(weights: Tensor, particles: Tensor) Tensor

Returns the particle with the maximum weight in the ensemble.

Achtung! This method does not return the true maximum of the posterior if the particles are not equidistant in the parameter space. In general, it should not be used if the resampling is active or if the initialization of the particles in the ensemble is stochastic. Its use case is for pure hypothesis testing, where it returns the most plausible hypothesis.

Parameters

- weights: Tensor

A Tensor of weights with shape (bs, np) and type prec.

- particles: Tensor

A Tensor of particles with shape (bs, np, d) and type prec. Here, d is the attribute of the

ParticleFilterobject.

Returns

- Tensor

A Tensor of shape (bs, d) and type prec with the particle having the highest weight for each ensemble in the batch.

- compute_mean(weights: Tensor, particles: Tensor, batchsize: Optional[Tensor] = None, dims: Optional[int] = None) Tensor

Computes the empirical mean of the unknown parameters based on the particle filter posterior distribution.

The mean

is computed as follows:

(10)

are the weights of the particles, and

are the particles.

Parameters

- weights: Tensor

A Tensor of weights with shape (bs, np) and type prec.

- particles: Tensor

A Tensor of particles with shape (bs, np, d) and type prec, where d is the attribute of the

ParticleFilterobject.- dims: int, optional

This method can handle a particles parameter with a last dimension different from the attribute d of the

ParticleFilterclass. If dims is passed to the method call, the shape of particles is expected to be (bs, np, dims). This parameter is typically used when computing the mean of a subset of the parameters.

Returns

- Tensor

The mean of the unknown parameters computed on the posterior distribution represented by weights and particles. It is a Tensor of shape (bs, d), or possibly (bs, dims) if dims is defined. Its type is prec.

- compute_state_mean(weights: Tensor, state_ensemble: Tensor, batchsize: Tensor) Tensor

Compute the mean state of a particle filter ensemble.

Given the weight

and state

of the j-th particle in the ensemble, the mean state is defined as:

(11)

Parameters

- weights: Tensor

Tensor of particle weights with shape (bs, np) and type prec.

- state: Tensor

Tensor of states for the probe, with shape (bs, np, state_size) and type state_type.

Returns

- Tensor

Mean state for each estimation in the batch computed with the posterior distribution. It is a Tensor of shape (bs, state_size) and type state_type. state_size and state_type are attributes of the

ParticleFilterclass.

- extract_particles(weights: Tensor, particles: Tensor, num_extractions: int, rangen: Generator) Tensor

Extracts num_extractions particles from the posterior distribution represented by the particle filter ensemble.

Parameters

- weights: Tensor

A Tensor of weights with shape (bs, np) and type prec.

- particles: Tensor

A Tensor of particles with shape (bs, np, d) and type prec. Here, d is the attribute of the

ParticleFilterobject.- num_extraction: int

The number of particles to be stochastically extracted.

- rangen: Generator

A random number generator from the module

tensorflow.random.

Returns

- Tensor

A Tensor of shape (bs, num_extractions, d), of type prec, containing the num_extraction particles sampled from the posterior distribution.

- full_resampling(weights: Tensor, particles: Tensor, count_for_resampling: Tensor, rangen: Generator) Tuple[Tensor, Tensor, Tensor]

Checks the resampling condition on the effective number of particles and applies the resampling if needed.

A particle filter ensemble is in need of resampling when the effective number of particles, defined in Eq.7, is less then a fraction resample_threshold of the total particle number. The whole batch of particle filters gets resampled if at least a fraction resample_fraction of the active simulations, defined by count_for_resampling, is in need of resampling.

Parameters

- weights: Tensor

Tensor of weights of shape (bs, np) and of type prec. bs, np, and prec are attributes of the

ParticleFilterclass.- particles: Tensor

Tensor of particles of shape (bs, np, d) and of type prec. bs, np, d, and prec are attributes of the

ParticleFilterclass. d is the attribute ofParticleFilter.- count_for_resampling: Tensor

Tensor of bool of shape (bs, 1), that says whether a certain ensemble in the batch should be counted or not in the total number of active simulation. Some estimations in the batch may have already terminated and are not to be counted in assessing the need of launching the resampling routine. If a fraction resample_fraction of the active simulations needs resampling then the whole batch is resampled.

- rangen: Generator

Random number generator from the module

tensorflow.random.

Returns

- weights: Tensor

Resampled weights. Tensor of shape (bs, np) and of type prec. bs, np, and prec are attributes of the

ParticleFilterclass.- particles: Tensor

Resampled particles. Tensor of shape (bs, np, d) and of type prec. bs, np, d, and prec are attributes of the

ParticleFilterclass.- resample_flag: Tensor

Tensor of shape (, ) of type bool that informs whether the resampling has been executed or not.

- partial_resampling(weights: Tensor, particles: Tensor, count_for_resampling: Tensor, rangen: Generator) Tuple[Tensor, Tensor, Tensor]

Checks the resampling condition on the effective number of particles and applies the resampling if needed.

A particle filter ensemble is in need of resampling when the effective number of particles, defined in Eq.7, is less then a fraction resample_threshold of the total particle number. The whole batch of particle filters gets resampled if at least a fraction resample_fraction of the active simulations, defined by count_for_resampling, is in need of resampling.

Parameters

- weights: Tensor

Tensor of weights of shape (bs, np) and of type prec. bs, np, and prec are attributes of the

ParticleFilterclass.- particles: Tensor

Tensor of particles of shape (bs, np, d) and of type prec. bs, np, d, and prec are attributes of the

ParticleFilterclass. d is the attribute ofParticleFilter.- count_for_resampling: Tensor

Tensor of bool of shape (bs, 1), that says whether a certain ensemble in the batch should be counted or not in the total number of active simulation. Some estimations in the batch may have already terminated and are not to be counted in assessing the need of launching the resampling routine. If a fraction resample_fraction of the active simulations needs resampling then the whole batch is resampled.

- rangen: Generator

Random number generator from the module

tensorflow.random.

Returns

- weights: Tensor

Resampled weights. Tensor of shape (bs, np) and of type prec. bs, np, and prec are attributes of the

ParticleFilterclass.- particles: Tensor

Resampled particles. Tensor of shape (bs, np, d) and of type prec. bs, np, d, and prec are attributes of the

ParticleFilterclass.

- recompute_state(index: Tensor, particles: Tensor, hist_control_rec: Tensor, hist_outcomes_rec: Tensor, hist_continue_rec: Tensor, hist_step_rec: Tensor, num_steps: int) Tensor

Routine that recomputes the state ensemble starting from the measurement outcomes and the controls.

When a resampling of the particle filter ensemble is carried out, a new set of particles is produced. In this situation state_ensemble generated by

initialize_state()and updated in the measurement loop inside the methodexecute()still refers to the old particles. The only way to align the state ensemble with the new particles is to re-initialise and re-evolve the state ensemble with the applied controls, the observed outcomes, and the new particles. That is the role of this method.Parameters

- index: Tensor

Tensor of shape (,) and type int32 that contains the number of iterations of the measurement loop in

execute(), as this method is called.- particles: Tensor

Tensor of particles of shape (bs, np, d) and of type prec. d is an attribute of

ParticleFilter.- hist_outcomes_rec: Tensor

Tensor of shape (num_step, bs, phys_model.outcomes_size) and of type prec. It contains the history of the outcomes of the measurements for all the estimations in the batch up to the point at which this method is called. It is generated automatically in the

execute()method.- hist_control_rec: Tensor

Tensor of shape (num_steps, bs, phys_model.control_size) and of type prec. It contains the history of the controls of the measurements for all the estimations in the batch up to the point at which this method is called. It is generated automatically in the

execute()method.- hist_step_rec: Tensor

Tensor of shape (num_steps, bs, 1) that contains, for each loop of the measurement cycle, the index of the last measurement performed on each estimation in the batch. Different estimations can terminate with a different number of total measurements performed. This Tensor is fundamentally a table that shows for each loop of the measurement cycle (row index) the number of measurements performed up to that point for each estimation (column index). Because for some loops the measurement might be applied only to a subset of estimations, some cells can show a number of measurements lower than the current number of completed loops in the measurement cycle. This Tensor is generated automatically in the

execute()method.- hist_continue_rec: Tensor

A Tensor of shape (num_steps, bs, 1) and type int32, hist_continue_rec is a flag Tensor indicating whether a simulation in the batch is ended or not. This flag is necessary because different simulations may stop at different numbers of measurements. The typical column of hist_continue_rec consists of a series of contiguous ones, say in number of M, followed by zeros until the end of the row. This tells us that the particular simulation to which the row refers, consisted of M measurements so far. In recomputing the state with different values for the particles, M measurement should be performed for that particular simulation. The parameter hist_continue_rec is generated automatically in the Simulation.execute method and could be easily derived from hist_step_rec, which contains more information.

- batchsize: Tensor

Dynamical batchsize of the recomputed states.

- num_steps: int

The maximum number of steps in the training loop. It is the attribute num_steps of the data class

SimulationParameters.

Returns

- state_ensemble: Tensor

The state of the quantum probe associated with each entry of particles, that has been recomputed using the applied controls and the observed outcomes. Each entry of state_ensemble is the state of the probe computed as if the true values of the parameters were the particles of the ensemble. When these are resampled, the states do not refer anymore to the correct values of the parameters, and the only way to realign them is to recompute them from scratch. state_ensemble is a Tensor of shape (bs, np, state_size) and type state_type, where state_size and state_type are attributes of

ParticleFilter.

Notes

The stopping condition in the measurement loop, which determines the number of measurements for an estimation, depends on the number of computed resources, which depends only on the applied controls and observed outcomes. For this reason, it is possible to reuse the objects hist_step_rec and hist_continue_rec, which were generated before the resampling. For the same reason, these objects are actually superfluous in the function call, as they could be recomputed from the hist_outcomes_rec and hist_continue_rec parameters.

- resample(weights: Tensor, particles: Tensor, rangen: Generator, batchsize: Optional[Tensor] = None) Tuple[Tensor, Tensor]

Resample routine of the particle filter.

The resampling procedure works in three steps. First, we mix the probability distribution on the particles represented by the weights

with a uniform distribution and construct the soft-weights

defined as:

(12)

The new particles

are resampled from the ensemble

and the new weight associated with each resampled particle is:

(13)

where

is the original index in the old ensemble of the j-th particle in the new ensemble. The function

is in general neither injective nor surjective. Only a fraction gamma of the total particles np is resampled in this way, and their weights are normalized such that they sum to gamma, i.e.

(14)

The second step is to perturb the newly extracted particles

as follows:

(15)

where

is the mean value of the parameters calculated from the ensemble before the resampling with the method

compute_mean(), andis a random variable extracted from a Gaussian distribution, i.e.

(16)

with

being the covariance matrix of the ensemble, computed with the method

compute_covariance(). The third and last step of the resampling routine is to propose new particles. These are again extracted from a Gaussian distribution, i.e.(17)

A number

of particles are sampled in this way with

being the total number of particles np. Their weights are uniform and normalized to

, so that together with the particles produced in the first two steps, their weights sum to one.

Parameters

- weights: Tensor

Tensor of weights of shape (bs, np) and of type prec.

- particles: Tensor

Tensor of particles of shape (bs, np, d) and of type prec. d is the attribute of

ParticleFilter.- rangen: Generator

Random number generator from the module

tensorflow.random.

Returns

- new_weights: Tensor

Resampled weights. Tensor of shape (bs, np) and of type prec.

- new_particles: Tensor

Resampled particles. Tensor of shape (bs, np, d) and of type prec.

Notes

It is possible that the soft resampling produces an invalid set of new weights (i.e., all zeros) for cases where there are very few particles and highly concentrated weights. In such limit cases, the soft resampling is aborted, and a normal resampling (without mixing with the uniform distribution) is executed to ensure that valid weights are produced.

- reset(rangen: Generator) Tuple[Tensor, Tensor]

Initializes the particles and the weights of the particle filter ensemble, both uniformly.

Parameters

- rangen: Generator

A random number generator from the module

tensorflow.random.

Returns

- weights: Tensor

Contains the weights of the particles initialized to 1/np, where np is an attribute of

ParticleFilter. It is a Tensor of shape (bs, np) and type prec, where the first dimension is the number of estimations performed in the batch simultaneously (the batchsize).- particles:

Contains the particles extracted uniformly within the admissible bounds of each parameter. It is a Tensor of shape (bs, np, d), of type prec, where d is an attribute of

ParticleFilter.

Notes

This method calls the

reset()methods of eachParameterobject in the attribute params.

simulation_parameters

Module containing the SimulationParameters

dataclass.

- class SimulationParameters(sim_name: str, num_steps: int, max_resources: float, resources_fraction: float = 1.0, prec: str = 'float64', stop_gradient_input: bool = True, loss_logl_outcomes: bool = True, loss_logl_controls: bool = False, log_loss: bool = False, cumulative_loss: bool = False, stop_gradient_pf: bool = False, baseline: bool = False, permutation_invariant: bool = False)

Bases:

objectFlags and parameters to tune the

Simulationclass.This dataclass contains the attributes

num_steps,max_resources, andresources_fractionthat determine the stopping condition of the measurement loop in theexecute()method, some flags that regulate the gradient propagation and the choice of the loss in the training, and the name of the simulation in thesim_nameattribute, along with the numerical precision of the simulation inprec.

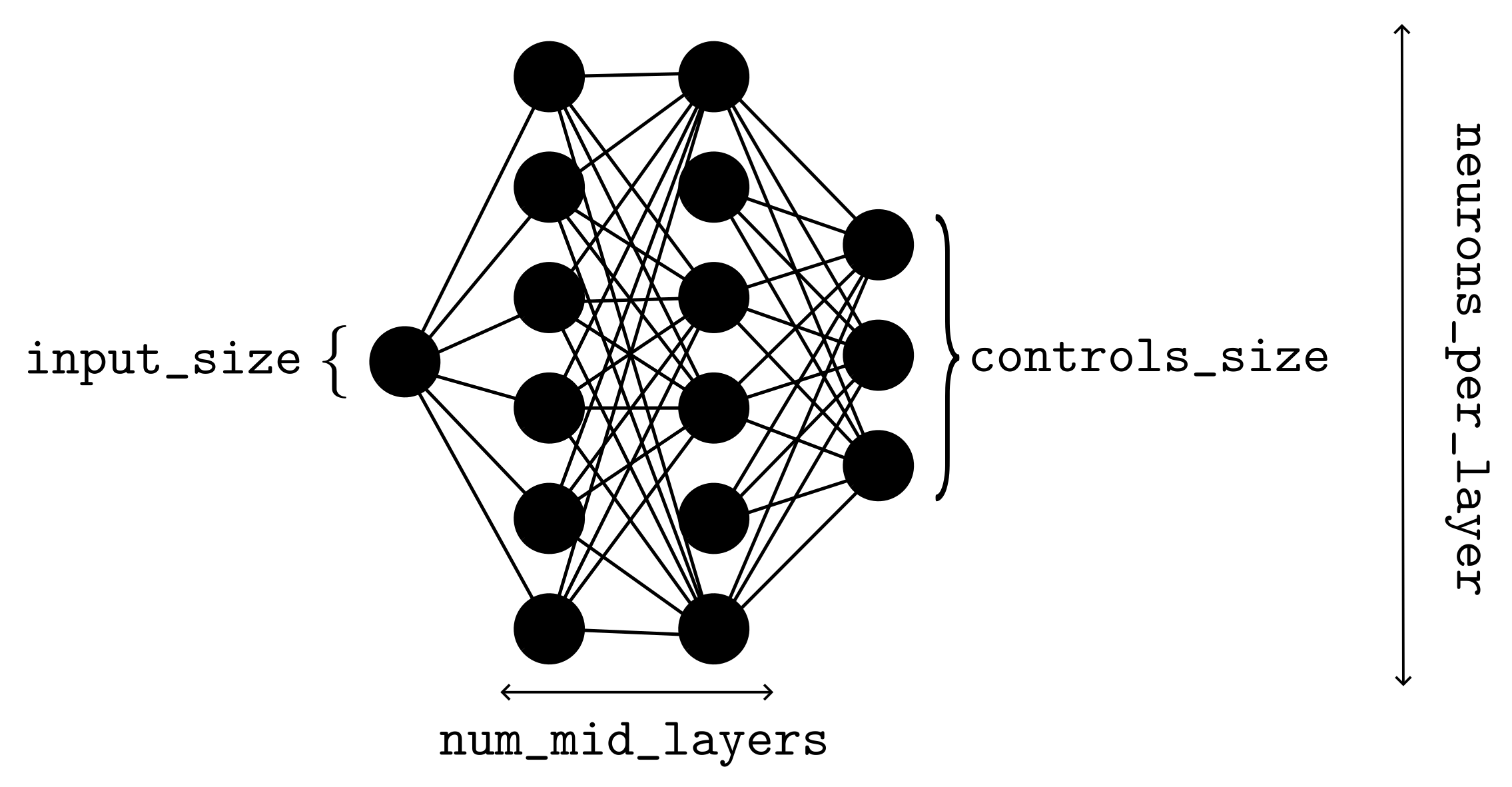

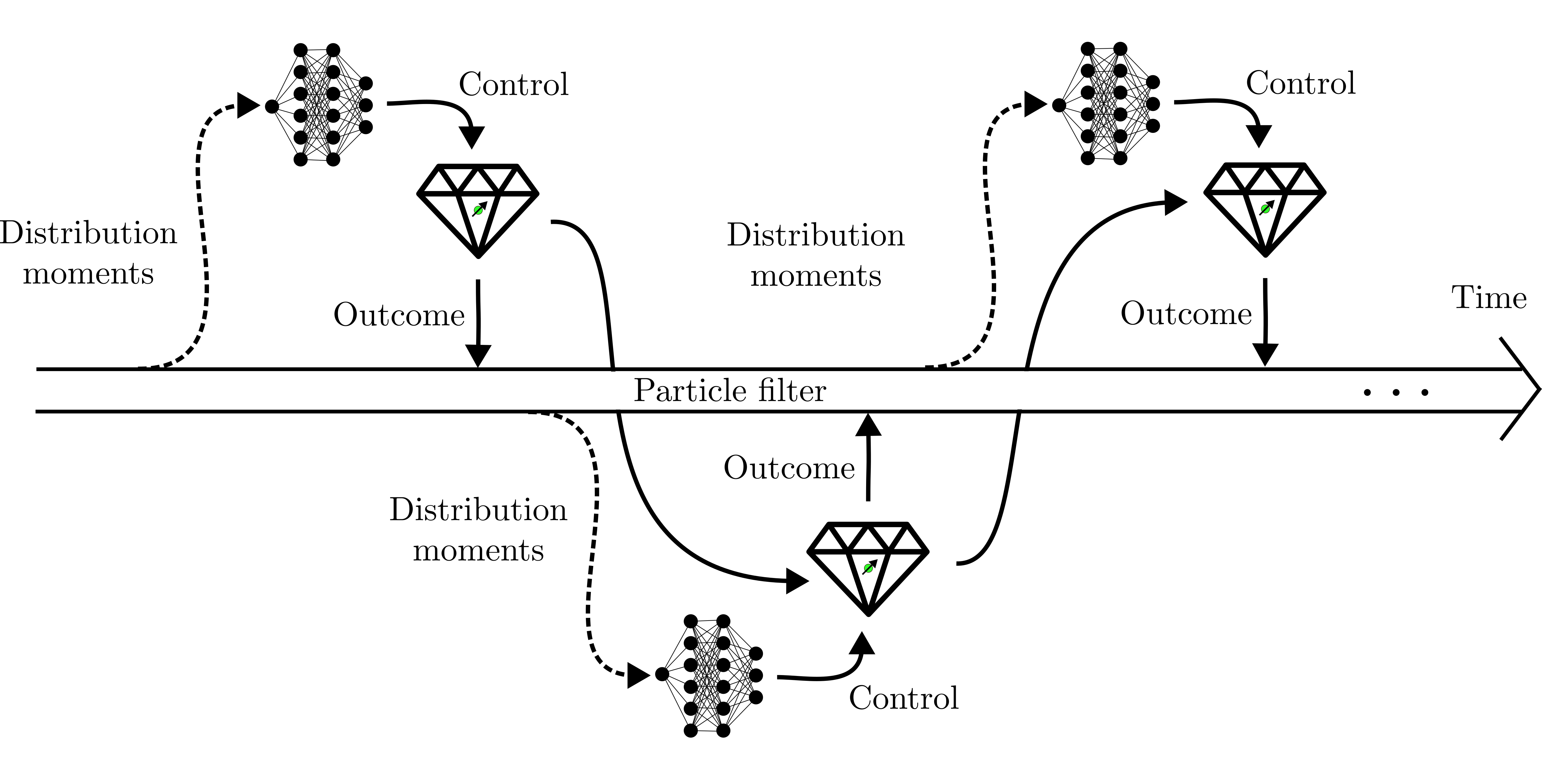

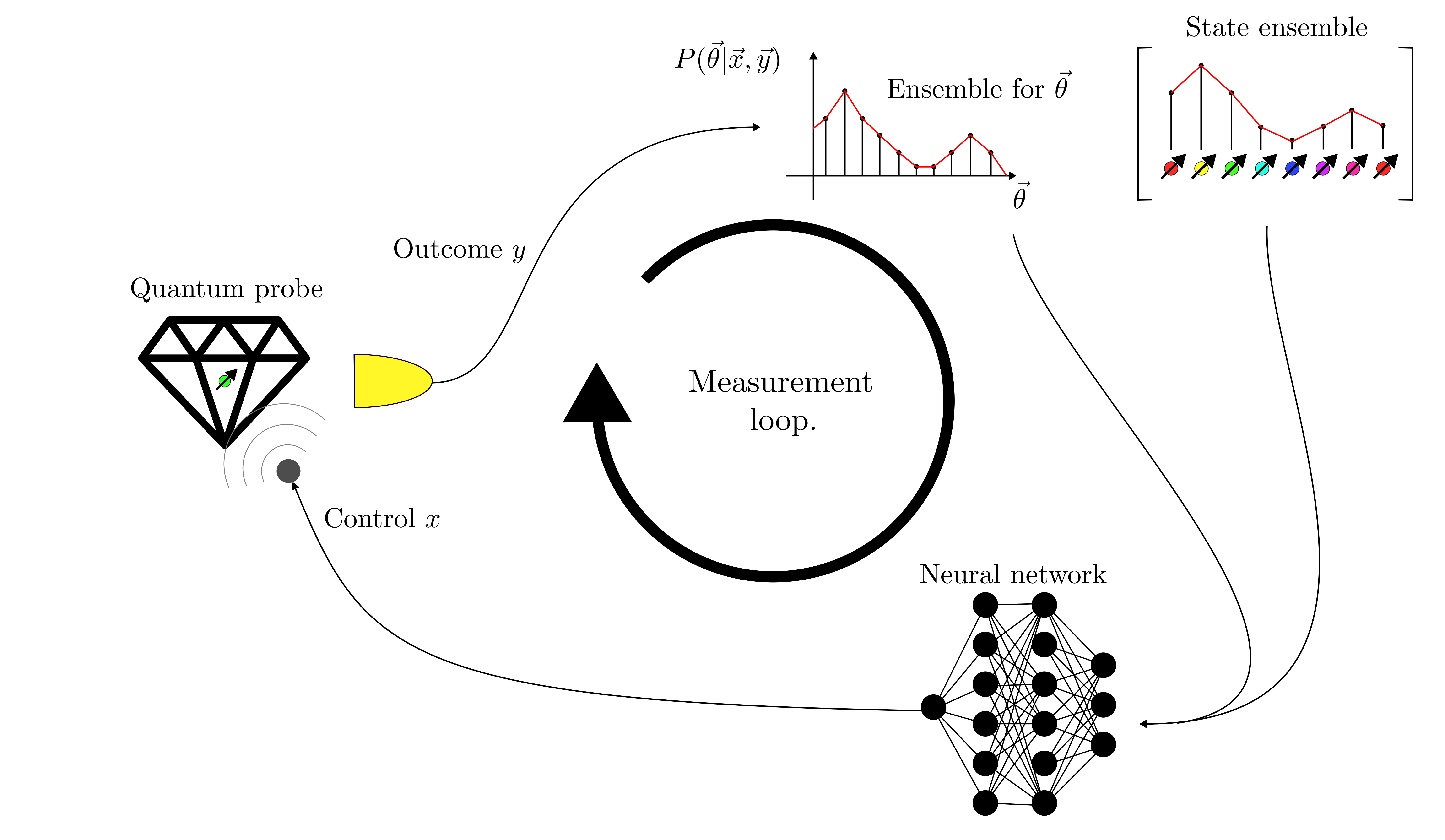

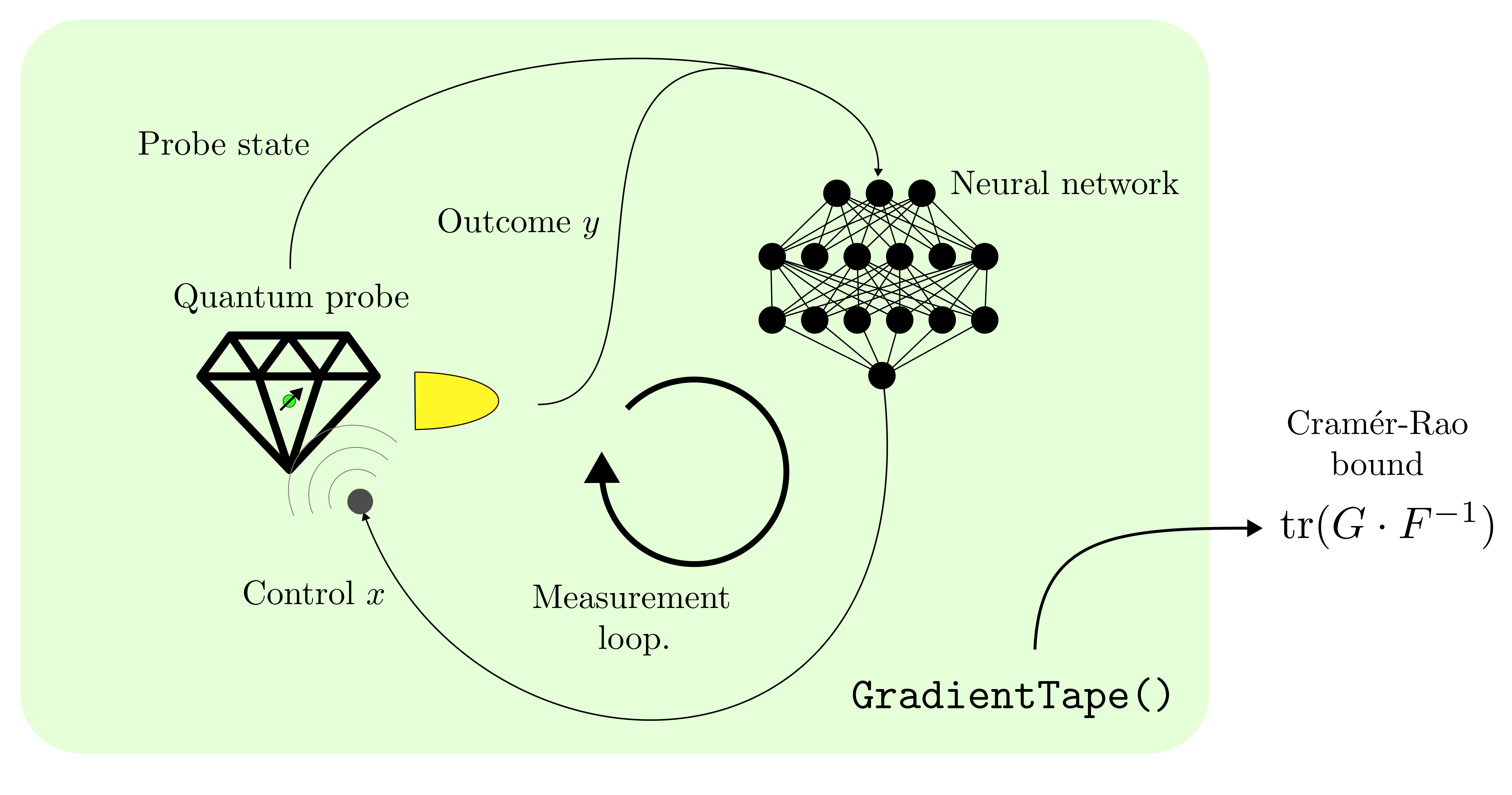

This picture represents schematically the default setting of the gradient propagation in the training. The solid arrows are the flows of information along which the gradient is propagated, while the dashed lines are the ones along which the gradient is not computed. By default the gradient is propagated along the particle filter, whose evolving state is represented by the thick blank line across the picture, along the evolving state of the probe, and through the neural network. The backpropagation of the gradient goes in the opposite direction of the information flow.

For a certain estimation in the batch we will refer to the loss produced by the method

loss_function()with the symbol, with

, containing the list of measurement outcomes

up to step

, and the true values

of the unknown parameters to be estimated. The vector

contains all the degrees of freedom of the control strategy. In case of a neural network these are the weights and the biases. The loss to be optimized is then the average of

on a batch of estimations, i.e.

(18)

where

is the batchsize. If the flag

cumulative_lossis deactivated the loss is computed at the end of the simulation, which is triggered when at least a fractionresources_fractionof estimations in the batch have terminated or when the maximum number of stepsnum_stepsin the measurement loop has been achieved. If an estimation in the batch has terminated because of the resources requirements, the last estimator before the termination is used to compute the mean loss.- baseline: bool = False

If both this flag and the attribute

loss_logl_outcomesare True then the loss of Eq.25 is changed to(19)

with

(20)

The additional term should reduce the variance of the gradient computed from the batch. The baseline

is recomputed for each step of the measurement loop.

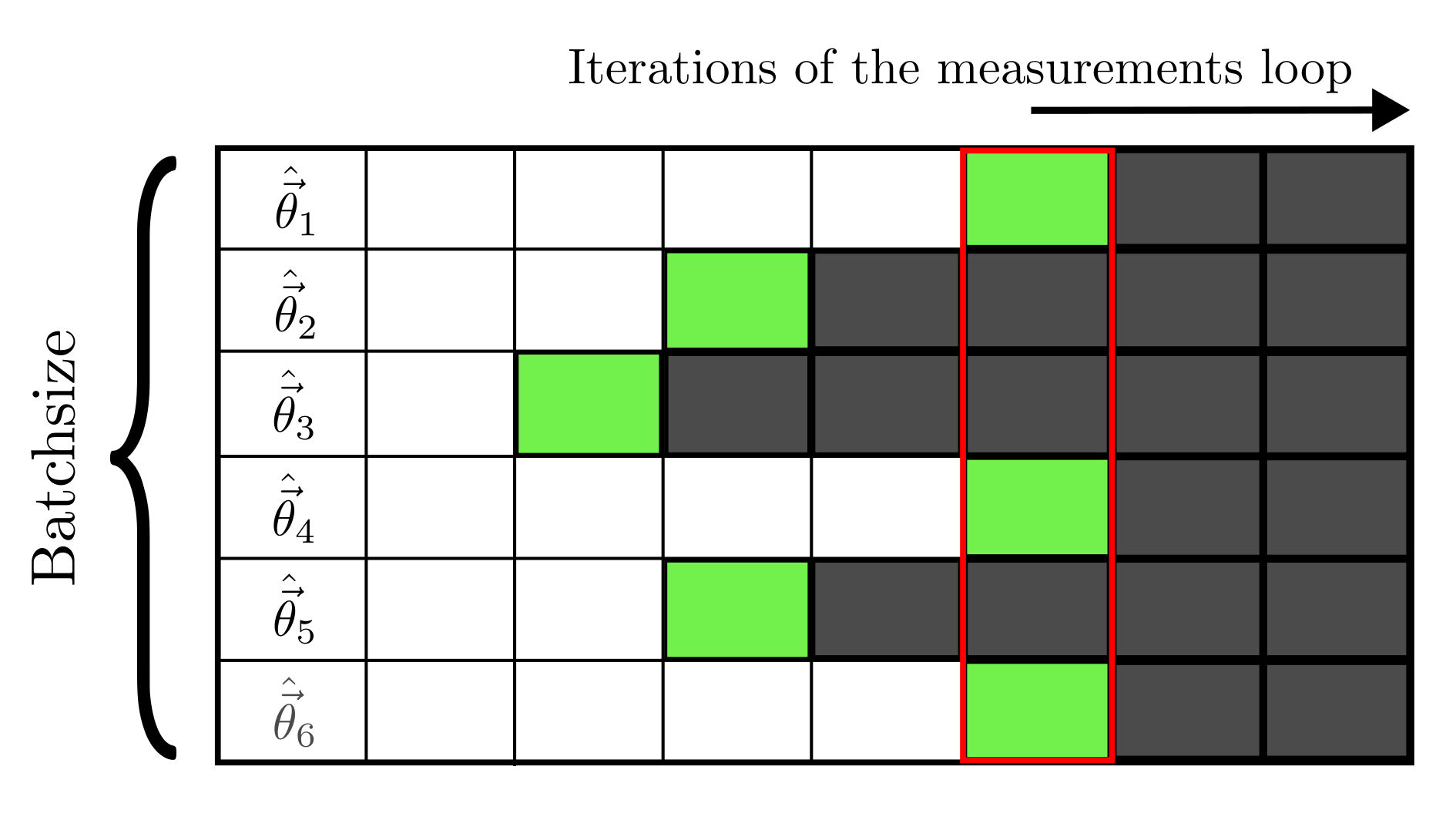

- cumulative_loss: bool = False

With this flag on the loss of Eq.18 is computed after each measurement and accumulated, so that the quantity that is differentiated for the training at the end of the loop in

execute()is(21)

The maximum number of measurement performed is

, but some estimations in the batch might terminate with less measurements. In any case the last estimator

from each simulation in the batch is used to compute the mean loss in all the subsequent steps.

In the picture a cells of the table contains an estimator for the unknown parameters. The rows are the different simulations in the batch and the columns the steps of the measurement cycle. The current measurements step is indicated with the red box. The cells highlighted in grey are those without an estimator, either because it is not yet been computed or because the estimation is already finished for that particular batch element. In green are highlighted the

estimators that are used in the computation of the mean loss at the current step. For the third estimation in the batch, that is already terminated, the most recent version of

is used for computing the mean loss in all the steps after its termination.

It follows that the last estimator of those simulations that have already exhausted the resources appear multiple times in the evaluation of the loss.

Mixing precisions that refer to different number of measurements might not be a fair way to compute the loss in the metrological task. In this case the user should define the loss function so that it contains a normalization factor

, that should be of the order of magnitude of the expected loss, i.e.

(22)

The cumulative loss is then

(23)

Achtung! This flag has no effect on a

BoundSimulationobject. For which the Fisher information on the probe trajectory is already a sort of cumulative loss.

- log_loss: bool = False

If this flag is True, then the used loss is the logarithm of

in Eq.18. In this case, if the flags

loss_logl_controlsandloss_logl_outcomesare also activated, the simulation modifies accordingly the log-likelihood terms to account for the extra logarithm in the definition of the loss. This flag can be used in conjunction withcumulative_lossto weight in the precisions at all steps in the training, while avoiding the use of a reference precision. The cumulative logarithmic loss would be

(24)

- loss_logl_controls: bool = False

If one ore more controls are discrete, that is, their flag continuous of the

Controlclass is False, then they are probably extracted stochastically from a probability distribution produced by the control strategy. If this is a neural network for example, its output could be the categorical distribution from which the controls are extracted, instead of the controls themselves. In such cases the callable attribute control_strategy of theSimulationclass takes as input the random number generator rangen alongside input_tensor and returns controls and log_prob_control, respectively the values of the controls and the logarithm of the probability of having extracted the said values.If this flag is True, then log_prob_control is mixed with the loss to compute the correct gradient. This means that an extra terms analogous to the one in Eq.25 is added to the loss, which refers to the controls instead of the outcomes.

This option should be activated only if the controls are generated stochastically.

- loss_logl_outcomes: bool = True

If True the logarithm of the likelihood of the observed measurement outcomes is mixed with the loss

in order to compute the correct gradient. The modified loss is

(25)

The additional term is needed in order to propagate the gradient through the physical model of the probe, described by the differentiable methods

perform_measurement()andmodel(), which return the logarithm of the probability of the outcomes. Without this extra term the gradient used in the training is biased. This flag must be deactivated only when the measurement outcomes are generated through a differentiable reparametrization. That is, instead of extractingdirectly from

it is obtained as

, with

being a stochastic variable extracted from a distribution independent on

and

.

- max_resources: float

Strict upper bound on the number of resources usable in an estimation, as counted by the

count_resources()method. This parameter enters in the stopping condition of the measurement loop.

- num_steps: int

Maximum number of steps in the measurement cycle of the

execute()method in theSimulationclass.

- permutation_invariant: bool = False

If the model is invariant under permutations in the unknown parameters (for all the values of the controls) this flag should be activated. Given the unknown parameters

, the ambiguity is lifted by fixing

.

- prec: str = 'float64'

Floating point precision of used in the simulation, can be either float32 or float64.

Achtung! The type of the probe state defined in the attribute state_specifics of the class

PhysicalModelcan be different from prec, but the precisions of theParticleFilter,PhysicalModel,SimulationParametersobjects, and of the neural network should all agree.

- resources_fraction: float = 1.0

Fraction of the estimations in a batch that must have exhausted the available resources for the measurement loop to stop and the batch of estimations to be declared complete. For example if resources_fraction=0.9 then only 90% of the estimations in the batch need to be terminated to stop the measurement loop of the